Making great progress on the OVA importer. Here are a few blockers I'm still seeing:

1. Synthetic disks are ignored. This is a disk defined in the OVF that has no file associated with it. Import should create a blank disk of the defined size.

2. For VMs with more than 2 IDE disks, only 2 IDE disks are imported. VMW uses IDE0:0,0:1,1:0,1:1 for IDE connectivity, PVE uses IDE0,IDE1,IDE2,IDE3. The importer isn't mapping them correctly and discarding the additional disks. In 8.2 it simply failed, so a little progress was made. If mapping IDE ports is too hard, leave the other disks in a detached state for now.

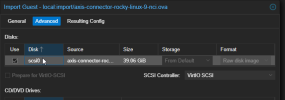

3. The SCSI controller seems to always come in as LSI 53C895A regardless of the controller called out in the ovf.

Other than that its the hard things, like missing OVF Environment vars preventing some complex OVAs from coming up, OVFs with multiple configuration options, or guests not recognizing the PVE variants of the LSI SAS or PVSCSI controllers.

1. Synthetic disks are ignored. This is a disk defined in the OVF that has no file associated with it. Import should create a blank disk of the defined size.

2. For VMs with more than 2 IDE disks, only 2 IDE disks are imported. VMW uses IDE0:0,0:1,1:0,1:1 for IDE connectivity, PVE uses IDE0,IDE1,IDE2,IDE3. The importer isn't mapping them correctly and discarding the additional disks. In 8.2 it simply failed, so a little progress was made. If mapping IDE ports is too hard, leave the other disks in a detached state for now.

3. The SCSI controller seems to always come in as LSI 53C895A regardless of the controller called out in the ovf.

Other than that its the hard things, like missing OVF Environment vars preventing some complex OVAs from coming up, OVFs with multiple configuration options, or guests not recognizing the PVE variants of the LSI SAS or PVSCSI controllers.