I'm using Terraform to deploy VMs on Proxmox by cloning a template. The deployment works in the sense that the VM gets created and starts successfully. However, the VM doesn't apply the Cloud-Init configurations I provided, including:

Here’s a snippet of my Terraform configuration for the Proxmox VM resource:

Here are the Terraform provider details:

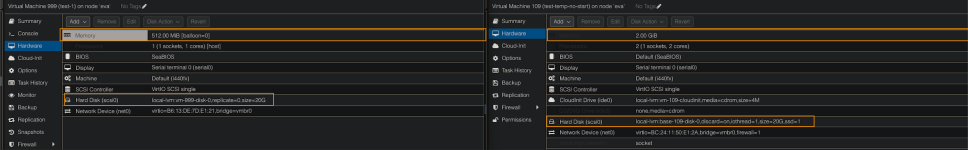

my template conf:

Things I’ve tried so far:

Any tips on debugging this would be greatly appreciated!

- The VM doesn't use the specified name.

- The Cloud-Init user and password settings are ignored.

Here’s a snippet of my Terraform configuration for the Proxmox VM resource:

Code:

resource "proxmox_vm_qemu" "test-case-15" {

name = "humus-3"

desc = "test blae"

target_node = "eva"

vmid = 991

agent = 1

clone = "test-temp-no-start"

full_clone = true

vga {

type = "virtio"

}

os_type = "l26"

cores = 2

sockets = 1

scsihw = "virtio-scsi-single"

disks {

scsi {

scsi0 {

disk {

size = 20

storage = "local-lvm"

}

}

}

}

network {

bridge = "vmbr0"

firewall = false

model = "virtio"

}

ipconfig0 = "ip=dhcp"

}Here are the Terraform provider details:

Code:

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "3.0.1-rc4"

}

}

required_version = ">= 1.7.1"

}

variable "proxmox_api_url" {

type = string

}

variable "proxmox_api_token_id" {

type = string

sensitive = true

}

variable "proxmox_api_token_secret" {

type = string

sensitive = true

}

provider "proxmox" {

pm_api_url= var.proxmox_api_url

pm_api_token_id = var.proxmox_api_token_id

pm_api_token_secret = var.proxmox_api_token_secret

pm_tls_insecure = true

pm_debug = true

pm_log_levels = {

_default = "debug"

_capturelog = ""

}

}my template conf:

Code:

agent: 1

boot: order=ide2;scsi0;net0

cipassword: $5$XXUY1nUM$Fu5Oll4nxoO9LpQRBWbvQPrVRdBfWF4PvWh77cpoN37

ciuser: bote

cores: 2

ide0: local-lvm:vm-109-cloudinit,media=cdrom,size=4M

ide2: none,media=cdrom

ipconfig0: ip=dhcp

memory: 2048

meta: creation-qemu=9.0.2,ctime=1732140151

name: test-temp-no-start

net0: virtio=BC:24:11:50:E1:2A,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-lvm:base-109-disk-0,discard=on,iothread=1,size=20G,ssd=1

scsihw: virtio-scsi-single

serial0: socket

smbios1: uuid=711d1814-4c72-4b08-80ac-7617c0a2de24

sockets: 1

template: 1

vga: serial0

vmgenid: ad659086-8c71-4e25-80de-2315c48fb00dThings I’ve tried so far:

- Verified that the template has the cloud-init drive attached.

- Ensured that the template is configured with a clean base image using cloud-init.

- Rechecked that the Terraform provider has the necessary permissions on Proxmox.

Any tips on debugging this would be greatly appreciated!