Hi, all,

I'm new to Proxmox. Forgive me if the answer/solution here is obvious!

Since mid-afternoon yesterday, my Ubuntu Server 22.04.1 VM has randomly started shutting down. I find no evidence of errors in the logs of the Ubuntu machine itself, but I am seeing an OOM-Kill in Proxmox's logs.

I'm currently running version 7.3.3 of Proxmox. It's running on an i7-6700 with 16GB of DDR4 Ram and a 256GB SSD. I have 2x2TB HDD and 2x2TB SSD being passed to the Ubuntu VM, which has them set up as 2 Mirror vDevs in a zpool.

I have 14GB assigned to my Ubuntu VM, leaving what I thought was 2GB free for Proxmox. The VM seems to take up 13 of the 14 GB almost constantly after I do any writing, but I presume this is just the ZFS ARC.

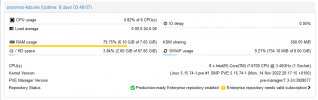

I'm noticing that the Proxmox host has 8GB assigned to RAM, then 8GB assigned to swap. Is this the cause of my OOM issues? My VM is thinking it can use up 14GB, but it actually only has access to 8GB since 8GB is reserved for swap on the Proxmox host?

Here is the relevant error log:

Any thoughts on what I could do to fix this?

I'm new to Proxmox. Forgive me if the answer/solution here is obvious!

Since mid-afternoon yesterday, my Ubuntu Server 22.04.1 VM has randomly started shutting down. I find no evidence of errors in the logs of the Ubuntu machine itself, but I am seeing an OOM-Kill in Proxmox's logs.

I'm currently running version 7.3.3 of Proxmox. It's running on an i7-6700 with 16GB of DDR4 Ram and a 256GB SSD. I have 2x2TB HDD and 2x2TB SSD being passed to the Ubuntu VM, which has them set up as 2 Mirror vDevs in a zpool.

I have 14GB assigned to my Ubuntu VM, leaving what I thought was 2GB free for Proxmox. The VM seems to take up 13 of the 14 GB almost constantly after I do any writing, but I presume this is just the ZFS ARC.

I'm noticing that the Proxmox host has 8GB assigned to RAM, then 8GB assigned to swap. Is this the cause of my OOM issues? My VM is thinking it can use up 14GB, but it actually only has access to 8GB since 8GB is reserved for swap on the Proxmox host?

Here is the relevant error log:

Code:

Mar 1 00:52:28 proxmox-kitsune kernel: [659046.224786] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=pveproxy.service,mems_allowed=0,global_oom,task_memcg=/qemu.slice/101.scope,task=kvm,pid=167>Mar 1 00:52:28 proxmox-kitsune kernel: [659046.224838] Out of memory: Killed process 1675037 (kvm) total-vm:17593624kB, anon-rss:6575196kB, file-rss:196kB, shmem-rss:0kB, UID:0 pgtables:30100kB oom_score_ad>Mar 1 00:52:28 proxmox-kitsune systemd[1]: 101.scope: A process of this unit has been killed by the OOM killer.

Mar 1 00:52:28 proxmox-kitsune kernel: [659047.547013] fwbr101i0: port 2(tap101i0) entered disabled stateAny thoughts on what I could do to fix this?

Last edited: