Proxmox now provides its own 6.11 kernel. There is no longer a need for the old Ubuntu kernel workaround

Proxmox Kernel 6.8.12-2 Freezes (again)

- Thread starter Decco1337

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

how did you do go back to 6.11.0-9?Had to back to 6.11.0-9, with 6.11.0-12 got hangs again.

6.11.0-13 need to be tested also and pve-6.11.0-1.

I have 3 nearly identical hardware hosts in one cluster - but one is crashing with kernel 6.8; updated to kernel 6.11 for proxmox and rebooted, but still crashing - I have

Code:

6.11.0-2-pve

Code:

proxmox-kernel-6.8/stable 6.8.12-5 all [upgradable from: 6.8.12-4]I'm running on Supermicro hardware with AMD EPYC 7513 CPUs. Really annoying

The only HW difference might be the network cards:

Code:

root@crashing-server:~# lspci | grep -E -i --color 'network|ethernet'

01:00.0 Ethernet controller: Intel Corporation Ethernet Controller X550 (rev 01)

01:00.1 Ethernet controller: Intel Corporation Ethernet Controller X550 (rev 01)

81:00.0 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)

81:00.1 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)

root@working-server:~# lspci | grep -E -i --color 'network|ethernet'

44:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GBASE-T (rev 02)

44:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GBASE-T (rev 02)

81:00.0 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)

81:00.1 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)//edit 07.01.2025

I replaced the network card from crashing-server today* - before the replacement I also had random (no specific time) crashes - even with no VMs running on the host. Now time will tell if this worked - if yes I'd be more than happy. If I don't update this post for any reason this solved my issues.

Code:

root@crashing-server:~# lspci | grep -E -i --color 'network|ethernet'

44:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GBASE-T (rev 02)

44:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GBASE-T (rev 02)

81:00.0 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)

81:00.1 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01)* Intel X550 PCIE (AOC-STGS-i2T on a regular PCIE riser AOC-2UR668G4) was replaced by a Intel X710, special Add-On Card for my Supermicro Mainboard AOC-2UR68G4-i2XT

Last edited:

Sadly I can't update my previous post anymore. I had no freezes since January - but since 12 days ago they are back. 12.7.25 was the first freeze - today the second (12 days later). Still documenting my steps here, in case they might help others (or help me find the issue while writing it down).

- Updated SUPERMICRO BIOS_FW 07/29/2024 Ver 3.0 to 03/28/2025 Rev 3.3

- Pinned kernel 6.8.12-4-pve again instead of manually installed 6.11.0-2-pve

- Rebooted after BIOS update resulted in boot loop - seems like something with UEFI / LEGCY / DUAL boot got messed up but was an easy fix in the BIOS settings (went to DUAL and selected the right UEFI disk with the OS).

- Renistalled IPMI firmware 03.10.43 (strage that it worked if it was the same version)

- Kernel 6.8.12-4-pve unpinned

- Performed full-upgrade of proxmox from version 8.3.0 to 8.4.5 - now running with kernel 6.11.11-2-pve and pinned that kernel

- Now back to observing if it freezes again.

- The other two servers that have identical hardware did not freeze once sind their deployment (but they were purchased together and later in comparison to the crashing server)... who knows.

You didn't specify the Supermicro Motherboard you are using at all.

- The other two servers that have identical hardware did not freeze once sind their deployment (but they were purchased together and later in comparison to the crashing server)... who knows.

I have zero Experience with AMD EPYC Systems, but if you say that they have Identical Hardware, did you also check the Hardware Revision of the Motherboard (e.g. 1.01 vs 1.20 or whatever) and/or the other Hardware (e.g. NIC) to see if they are any different ?

Possibly also different FW on the NIC and other Components (HBA etc).

The freezing issues reported here seem sporadic/inconsistent, which shouts hardware instability. One person even confirmed it was a RAM problem.

The fixes in the first post, one of them disables command queuing for SATA devices which is a pretty big deal.

No issues for me on my 3 proxmox devices running 8.x and 6.8.x kernel.

My suggestion, if not done already update bios.

Disable PCIe power saving features in bios.

Make sure RAM is configured in a safe configuration, correct voltage, not overclocked etc.

Stress test RAM.

Disable SATA power management in bios.

Disable unused hardware in bios.

Make sure everything seated properly on board, and no kinks in cables.

Disable special performance related features in bios, especially vendor specific one's, these are often unsupported performance hacks.

If still unstable try in bios if available.

Disable package c-states.

Disable core c-states higher than C6.

As always backup/make a note of anything changed, in case need to revert.

The fixes in the first post, one of them disables command queuing for SATA devices which is a pretty big deal.

No issues for me on my 3 proxmox devices running 8.x and 6.8.x kernel.

My suggestion, if not done already update bios.

Disable PCIe power saving features in bios.

Make sure RAM is configured in a safe configuration, correct voltage, not overclocked etc.

Stress test RAM.

Disable SATA power management in bios.

Disable unused hardware in bios.

Make sure everything seated properly on board, and no kinks in cables.

Disable special performance related features in bios, especially vendor specific one's, these are often unsupported performance hacks.

If still unstable try in bios if available.

Disable package c-states.

Disable core c-states higher than C6.

As always backup/make a note of anything changed, in case need to revert.

Last edited:

To be fair, I feel like the only way to troubleshoot these kind of Issues would be to connect a Serial Null-Modem Cable (DB9) and then log via e.g.

Netconsole would be great but I never managed to get it working. Fair enough it was over netcat and there were some Posts stating that in those cases it's not working even though it's supposed to, but anyways, I feel like Serial over a Null-Model Cable (DB9) is the best bet, all other Things being equal. That way you should get a Stack Trace which should point in the right Direction of what's causing the Issue. Logs in

Of course you might want to do some kind of automatic Log Rotation or abort & restart every Day or so such that your Log doesn't get too big though.

You could e.g. schedule a Systemd Timer every Day, kill the Script, then restart the Script which in turns does:

Obviously check Baud Rate and Device as it might be different .

.

minicom what's going on. Obviously making sure that the BIOS configured the Serial Port correctly first and do a test on the "Client" System. You should see e.g. the Boot Process & initial Kernel/Initrd Output via Serial.Netconsole would be great but I never managed to get it working. Fair enough it was over netcat and there were some Posts stating that in those cases it's not working even though it's supposed to, but anyways, I feel like Serial over a Null-Model Cable (DB9) is the best bet, all other Things being equal. That way you should get a Stack Trace which should point in the right Direction of what's causing the Issue. Logs in

/var/log/syslog and /var/log/kern.log and such I believe are of no use, since the Kernel is already frozen by that Time, so it cannot write to Disk what caused the Issue in the first Place (obviously).Of course you might want to do some kind of automatic Log Rotation or abort & restart every Day or so such that your Log doesn't get too big though.

You could e.g. schedule a Systemd Timer every Day, kill the Script, then restart the Script which in turns does:

Code:

TIMESTAMP=$(date +"%Y%m%d-%Hh%Mm%Ss"); minicom --capturefile="serial-log-${TIMESTAMP}.log" --device=/dev/ttyS0 --baudrate 115200Obviously check Baud Rate and Device as it might be different

Not 100% sure that it is related, but I had a crontab entry for setting the CPU governor to `performance` after every reboot. I removed the entry yesterday and set the governor to `powersave` instead. So far it has been running without interruptions.

I used a tteck helper script for setting up the crontab. This one: https://github.com/tteck/Proxmox/blob/main/misc/scaling-governor.sh.

Edit: I also have the microcode for Intel installed.

This worked for me!

Starting point symptom:

Proxmox kept on crashing randomly (uptime before crashing ranges from few hours to mostly under 24 hours), journalctl gave no information about what went wrong. Symptom of the crash is - screen blank, no response, more than half the time (but not all the time) I find the CPU fan to be on high spinning speed.

It is a brand new system (MSI Cubi 1M Intel Core 3-100U Barebone Mini PC), Installed Win11, very stable. Installed Ubuntu, very stable. Proxmox was the only thing that was crashing randomly.

Tried multiple different kernel versions, at least these were tested - no difference

6.14.8-2-bpo12-pve

6.8.12-13-pve

Tried all the boot cmdline/boot arguments in various combinations of these - no difference:

pcie_port_pm=off libata.force=noncq intel_iommu=off nox2apic

Wanted to see if certain external trigger may be the cause - unplugged network cable - still crashed

Installed latest microcode right at the start as well as cleared/resetted BIOS setting after upgrading to latest BIOS (and then re-checking the latest available BIOS settings I can change) - never made any difference at all

But changing the scaling governor worked (even after I have rolled back all the cmdline/boot arguments) - 10 days uptime so far

I used the script from https://community-scripts.github.io/ProxmoxVE/scripts?id=scaling-governor and made sure the powersave mode is activated at every boot.

Last edited:

You didn't specify the Supermicro Motherboard you are using at all.

I have zero Experience with AMD EPYC Systems, but if you say that they have Identical Hardware, did you also check the Hardware Revision of the Motherboard (e.g. 1.01 vs 1.20 or whatever) and/or the other Hardware (e.g. NIC) to see if they are any different ?

Possibly also different FW on the NIC and other Components (HBA etc).

Thanks for your reply. I had another freeze this saturday, so checking again...

Mainboard

it's a H12DSU-iN mainboard in all servers, same version 1.01. BIOS on the failing server is newer (was the same as failing servers when issues started, updating didn't help)

BIOS failing server:

Code:

Version: 3.3

Release Date: 03/28/2025

Code:

Version: 2.5

Release Date: 09/14/2022Network

Network addon-cards are 10-Gigabit X540-AT2 (rev 01) and X710 for 10GBASE-T (rev 02) for all servers.

Firmware Version is identical (0x80000628, 1.1681.0 for the X540, 8.50 0x8000be1e 1.3082.0 for the X710)

HBA

HBA is Serial Attached SCSI controller: Broadcom / LSI Fusion-MPT 12GSAS/PCIe Secure SAS38xx, Subsystem: Super Micro Computer Inc AOC-S3816L-L16iT (NI22) Storage Adapter, Kernel driver in use: mpt3sas, Kernel modules: mpt3sas

(lspci -nn | grep -i 'raid\|sas\|sata')

The failing server has a newer firmware:

Code:

[ 3.046149] mpt3sas_cm0: FW Package Ver(20.00.00.03)

[ 3.046826] mpt3sas_cm0: SAS3816: FWVersion(20.00.01.00), ChipRevision(0x00)

Code:

[ 4.452318] mpt3sas_cm0: FW Package Ver(16.00.08.01)

[ 4.471775] mpt3sas_cm0: SAS3816: FWVersion(16.00.08.00), ChipRevision(0x00)So I might check what is the latest firmware here and try to update it.

RAM / memory

I found that the failing server does have memory from Micron Technology (36ASF4G72PZ-3G2R1), the others are Samsung (M393A4K40EB3-CWE) - but both have 3200MT/s speed and look like they have the same specs.

For the other components it's also possible that there are minor version changes, but not really sure what else to check.

CPU / microcode

I found that the amd64-microcode package was not present on any of my 3 servers, I installed the package on the failing one and rebooted.

https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_debian_firmware_repo

https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_firmware_cpu

The freezing issues reported here seem sporadic/inconsistent, which shouts hardware instability. One person even confirmed it was a RAM problem.

The fixes in the first post, one of them disables command queuing for SATA devices which is a pretty big deal.

No issues for me on my 3 proxmox devices running 8.x and 6.8.x kernel.

My suggestion, if not done already update bios.

Disable PCIe power saving features in bios.

Make sure RAM is configured in a safe configuration, correct voltage, not overclocked etc.

Stress test RAM.

Disable SATA power management in bios.

Disable unused hardware in bios.

Make sure everything seated properly on board, and no kinks in cables.

Disable special performance related features in bios, especially vendor specific one's, these are often unsupported performance hacks.

If still unstable try in bios if available.

Disable package c-states.

Disable core c-states higher than C6.

As always backup/make a note of anything changed, in case need to revert.

disables command queuing for SATA devices

done using

Code:

sed -i '$ s/$/ pcie_port_pm=off/' /etc/kernel/cmdline

sed -i '$ s/$/ libata.force=noncq/' /etc/kernel/cmdline

update-initramfs -u -k all && proxmox-boot-tool refreshBIOS settings:

- already checked for PCIE power management - ASPM is already disabled.

- found the time / clock was exactly 2 hours off - changed that

- did not find any other power related settings e.g. for SATA

- did not find anything about package c-states

Other changes:

- pinned kernel "6.8.12-13-pve" instead of "6.8.12-12-pve" with proxmox-boot-tool and rebooted

- made sure all VMs on the failing server do not have CPU configured as [host] but default - had 3 with [host] (not really sure if this will affect anything)

Will now see if the issues happen again.

My next tasks if this freeze is happening again is to:

- run memtest

- update firmware of HBA

- do some research on / try the scaling-governor in powersave mode (like @MagicHarri did)

- check if hardware is in place / properly mounted - I feel that it was much more stable when I opened the server and replaced the NIC card a year ago (probably also checking all connections). After the hardware change it was good for a longer period. Maybe I'll reseat all the memory bars and maybe also the CPUs (had another Supermicro Server freeze completely, suddenly wouldn't even POST, reseating everything fixed that - completely other datacenter and usage)

So probably - I'll be back in 2-3 weeks when it's freeze time again

HBA

HBA is Serial Attached SCSI controller: Broadcom / LSI Fusion-MPT 12GSAS/PCIe Secure SAS38xx, Subsystem: Super Micro Computer Inc AOC-S3816L-L16iT (NI22) Storage Adapter, Kernel driver in use: mpt3sas, Kernel modules: mpt3sas

(lspci -nn | grep -i 'raid\|sas\|sata')

The failing server has a newer firmware:

Compared to the working servers:Code:[ 3.046149] mpt3sas_cm0: FW Package Ver(20.00.00.03) [ 3.046826] mpt3sas_cm0: SAS3816: FWVersion(20.00.01.00), ChipRevision(0x00)

(sudo dmesg | grep mpt3sas)Code:[ 4.452318] mpt3sas_cm0: FW Package Ver(16.00.08.01) [ 4.471775] mpt3sas_cm0: SAS3816: FWVersion(16.00.08.00), ChipRevision(0x00)

So I might check what is the latest firmware here and try to update it.

It could indeed by a stubborn Firmware Quirk

I doubt that is an Issue *per se*, unless it would prevent you from booting at all, which clearly is NOT the case here.RAM / memory

I found that the failing server does have memory from Micron Technology (36ASF4G72PZ-3G2R1), the others are Samsung (M393A4K40EB3-CWE) - but both have 3200MT/s speed and look like they have the same specs.

For the other components it's also possible that there are minor version changes, but not really sure what else to check.

Memory could be faulty though, which is why I suggested a Memtest86.

However, if you have lots of Memory it could very well take several DAYS to complete such Test.

Did you look in IPMI / BMC System Event Log (SEL) to see if there are any Memory or BIOS related Issues ?

Did you runCPU / microcode

I found that the amd64-microcode package was not present on any of my 3 servers, I installed the package on the failing one and rebooted.

https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_debian_firmware_repo

https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_firmware_cpu

update-initramfs -k all -u afterwards ?No Experience with those.disables command queuing for SATA devices

done using

according to the thomas-krenn site / recommendationsCode:sed -i '$ s/$/ pcie_port_pm=off/' /etc/kernel/cmdline sed -i '$ s/$/ libata.force=noncq/' /etc/kernel/cmdline update-initramfs -u -k all && proxmox-boot-tool refresh

About the System Time, easiest is probably toBIOS settings:

- already checked for PCIE power management - ASPM is already disabled.

- found the time / clock was exactly 2 hours off - changed that

- did not find any other power related settings e.g. for SATA

- did not find anything about package c-states

apt-get install chrony & systemctl enable --now chrony.Very weird that there is no C-State related Setting

I believe you could, if you really wanted to check the Configuration 100% and see if there are any notable Differences that you might have overlooked, get a Configuration "dump" of the BIOS Settings via Supermicro SUM to XML File

See for Instance: https://www.supermicro.com/support/faqs/faq.cfm?faq=28095 and Supermicro Update Manager (SUM) at https://www.supermicro.com/en/solutions/management-software/supermicro-update-manager.

I'd be surprised if that was the Case. I ALWAYS useOther changes:

- pinned kernel "6.8.12-13-pve" instead of "6.8.12-12-pve" with proxmox-boot-tool and rebooted

- made sure all VMs on the failing server do not have CPU configured as [host] but default - had 3 with [host] (not really sure if this will affect anything)

Will now see if the issues happen again.

host CPU Type in all of my Guests.Any notable Correlation with Crashing with regards to the Load / Temperature ? Or high Network Activity ? Are you 100% it's a Kernel Panic and not "just" the NIC shutting down for whatever Reason ?My next tasks if this freeze is happening again is to:

- run memtest

- update firmware of HBA

- do some research on / try the scaling-governor in powersave mode (like @MagicHarri did)

- check if hardware is in place / properly mounted - I feel that it was much more stable when I opened the server and replaced the NIC card a year ago (probably also checking all connections). After the hardware change it was good for a longer period. Maybe I'll reseat all the memory bars and maybe also the CPUs (had another Supermicro Server freeze completely, suddenly wouldn't even POST, reseating everything fixed that - completely other datacenter and usage)

So probably - I'll be back in 2-3 weeks when it's freeze time again

Could it be caused by high Temperature of CPU / NIC / HBA ? That might be a Reason why

powersave helps in some Cases.It's also possible that you had a bad Silicon Lottery and your CPU needs slightly higher Voltage, but I'm surprised that the Defaults from OEM like Supermicro fail to provide that. I had some Issues when I was undervolting a CPU (crashes every 2-3 Weeks), but since you cannot do that with a Supermicro Motherboard ... unless of course you are using an undervolting Tool (you can find some on GitHub for both Intel and AMD) and the MSR "Trick" (or equivalent) works correctly for you.

It could indeed by a stubborn Firmware Quirk. I got no direct Experience with this specific one, only LSI 2118/3008 Series Chipsets so far.

Still thanks for checking

I doubt that is an Issue *per se*, unless it would prevent you from booting at all, which clearly is NOT the case here.

Memory could be faulty though, which is why I suggested a Memtest86.

However, if you have lots of Memory it could very well take several DAYS to complete such Test.

Did you look in IPMI / BMC System Event Log (SEL) to see if there are any Memory or BIOS related Issues ?

Everything clean in IPMI / Event Log - no issues at all. Also compared the RAM again, their performance specs are 1:1 the same.

Did you runupdate-initramfs -k all -uafterwards ?

Yep, I did.

No Experience with those.

Me too. ^^

About the System Time, easiest is probably toapt-get install chrony&systemctl enable --now chrony.

Chrony is already installed and configured - it was the BIOS time that was off, not the Debian / Proxmox system time. But will check again if chrony is working as it should.

Very weird that there is no C-State related Setting.

Maybe I‘m just too stupid to find them. Will look that up again.

I believe you could, if you really wanted to check the Configuration 100% and see if there are any notable Differences that you might have overlooked, get a Configuration "dump" of the BIOS Settings via Supermicro SUM to XML File

See for Instance: https://www.supermicro.com/support/faqs/faq.cfm?faq=28095 and Supermicro Update Manager (SUM) at https://www.supermicro.com/en/solutions/management-software/supermicro-update-manager.

Will definitely do that. Thanks for the hint!

I'd be surprised if that was the Case. I ALWAYS usehostCPU Type in all of my Guests.

Same here…

Any notable Correlation with Crashing with regards to the Load / Temperature ? Or high Network Activity ? Are you 100% it's a Kernel Panic and not "just" the NIC shutting down for whatever Reason ?

Could it be caused by high Temperature of CPU / NIC / HBA ? That might be a Reason why powersave helps in some Cases.

The crashing times were different for every crash - also thought maybe it‘s during backup or something like that, but no. I kept the load very minimal after the last crash, there are just 5 VMs running on the server (Debian 11 - Debian 12) which barely cause any load.

The servers are in a A/C controlled environment, also the temperatures in our monitoring system look good / no special peaks for the server or the CPUs in general.

I‘m not monitoring any other addon cards / PCIE temperature - so could technically be that one if the NICs is suddenly too hot (but as the system is stable if I cause severe load on the network I doubt thats the issue)

And no - not really sure it‘s kernel panic. The system just freezes with no (sys)logs that could give a hint. (Also nothing on the virtual HTML5 console - just the Proxmox login)

It's also possible that you had a bad Silicon Lottery and your CPU needs slightly higher Voltage, but I'm surprised that the Defaults from OEM like Supermicro fail to provide that. I had some Issues when I was undervolting a CPU (crashes every 2-3 Weeks), but since you cannot do that with a Supermicro Motherboard ... unless of course you are using an undervolting Tool (you can find some on GitHub for both Intel and AMD) and the MSR "Trick" (or equivalent) works correctly for you.

Hm, could be - but would this really be the case if the server works for a whole year, and then „suddenly“ breaks? Something must have happened… Never had a faulty CPU and I had over 50 Supermicro Servers within the last 10 years. Would be my first

Thanks alot for your reply!! Really appreciate it.

Alright ...Everything clean in IPMI / Event Log - no issues at all. Also compared the RAM again, their performance specs are 1:1 the same.

Sometimes the OEM / BIOS Vendor either puts them in Places that are impossible to find, or they are altogether hidden.Maybe I‘m just too stupid to find them. Will look that up again.

You may need to patch BIOS (using a NEW Version of

uefitool to extract the BIOS, ifrextractor to dump that to Text File, edit using e.g, HxD Editor, rebuilding a BIOS with a LEGACY version of uefitool and finally either flashing via IPMI OR you may need to use a CH341).But before we go that Road I think it's better to explore other Possibilities

Kernel Version and ZFS Version if applicable ?I‘m not monitoring any other addon cards / PCIE temperature - so could technically be that one if the NICs is suddenly too hot (but as the system is stable if I cause severe load on the network I doubt thats the issue)

And no - not really sure it‘s kernel panic. The system just freezes with no (sys)logs that could give a hint. (Also nothing on the virtual HTML5 console - just the Proxmox login)

But you cannot type or do anything with the Keyboard, right ? That in itself is NOT conclusive of a Hardware Freeze though. On my Supermicro Motherboards sometimes I get a KVM Freeze instead, thus I need to reboot the KVM and sometimes even the IPMI/BMC altogether (cold Reset).

That can happen e.g. when I have Network Issues and a flood of Warnings/Errors gets printed to the TTY (and seen via KVM).

Rebooting the KVM & IPMI/BMC can sometimes Help.

Do you have monitoring via e.g. Uptime Kuma, Zabbix, Gatus, Monit maybe, ..., of your LAN Connection from another Host ? Just to check that it's not a NIC Issue only. Did you try pinging/monitoring the Host on another NIC as well, to see if it's not "only" just the NIC that failed ? To Mind comes a case with a Mellanox NIC shutting down due to Overtemperature (and there was something in

dmesg about that). I'm NOT saying it's your Case, but if you only have that NIC monitored via Ping etc, that could be ONE Possibility.I'd just like that you are 100% sure that it IS a Hardware Freeze, because in that case:

- You could setup a Watchdog to automatically reboot the System

- There should definitively be some Indication somewhere (if not you'll need to setup & test Serial Debugging using Null Modem Cable and/or Netconsole, but make sure you validate that they are working in "normal Operation" before "counting" on them to log the Error)

I agree.Hm, could be - but would this really be the case if the server works for a whole year, and then „suddenly“ breaks? Something must have happened… Never had a faulty CPU and I had over 50 Supermicro Servers within the last 10 years. Would be my firstBut thats also why I have reseating everything, including the CPUs, on my list.

But blindly speculating about what might/could/can/will replicate your Issue can lead to an infinite list of Hypothesis.

We'll never run out of Ideas, we'll run out of Money and Patience before then though

It could also be a Core that after a while turned "Bad". IIRC Ryzen had Issues where some Motherboard Manufacturers were putting out a WAY too HIGH CPU Voltage, thus frying the CPU. You might have gotten a similar but milder Case of that, so NOT total failure, but maybe one Core got damaged anyway. Or the Original Silicon used to build your CPU had a tiny little defect, who knows, everything is possible.

Or it could "simply" be an incompatibility with the HBA/NIC or possibly ZFS. Kernel 6.XX "Officially Supported" on ZFS does NOT necessarily mean that it works well, just that "most" Stuff works in "most" Circumstances.

Out of Curiosity, did you try Kernel 6.5.x and see if it crashes/freezes with that ? I agree it's NOT ideal but when you are out of Ideas.

Personally when the Proxmox Kernel failed to boot for whatever Reason, I installed the Debian Stock Backports Kernel (probably NOT needed with PVE 9 / Debian Trixie, I am talking about Bookworm) although of course that will make a bit of a Mess in terms of ZFS and you'll need Debian's

zfs-dkms Package & the entire Build Toolchain for building a Kernel Module (plus the Kernel Headers). I'd try Kernel 6.5.x first if I were you.No Problem. I'm about as lost as you areThanks alot for your reply!! Really appreciate it.

It doesn't matter how unlikely you think an Issue is, once you went through everything that is "common", whatever is left must be your Issue.

But "common" Issues can be a very very very long list, from bent CPU/Motherboard Pins, to glitchy/faulty PSU, to Transient Phenomenon, to "broken" CPU Cores that trigger in some Circumstances but not all the Time, to quircky Firmware of the Motherboard, NIC, HBA, Hardware Faults in one Component that due to a Firmware Bug in another System instead of failing "gracefully" trigger a complete Breakdown, etc

Kernel Version and ZFS Version if applicable ?

As you can see, I've now downgraded to 6.5 again - as I just had another freeze.

Code:

root@failing-server:~# uname -r

6.5.13-6-pve

root@failing-server:~# modinfo zfs | grep ^version:

version: 2.2.3-pve1

root@failing-server:~# zfs version

zfs-2.2.8-pve1

zfs-kmod-2.2.3-pve1But you cannot type or do anything with the Keyboard, right ? That in itself is NOT conclusive of a Hardware Freeze though. On my Supermicro Motherboards sometimes I get a KVM Freeze instead, thus I need to reboot the KVM and sometimes even the IPMI/BMC altogether (cold Reset).

That can happen e.g. when I have Network Issues and a flood of Warnings/Errors gets printed to the TTY (and seen via KVM).

Rebooting the KVM & IPMI/BMC can sometimes Help.

Well, It just shows the IP, Port and Login when it's frozen in KVM console, nothing else. I once had some issues with the NICs regarding VLANs - so I had to add "offload-rx-vlan-filter off" to the network config - that's how I know when something regarding overloading / spamming the KVM console looks like ^^ (I also had a lot of VLANs in the config - just in case I'd need them)

Code:

auto lo

iface lo inet loopback

auto enp68s0f0

iface enp68s0f0 inet manual

#10G PCIE - UPLINK BOND

auto enp68s0f1

iface enp68s0f1 inet manual

#10G PCIE - UPLINK BOND

auto enp129s0f0

iface enp129s0f0 inet static

mtu 9000

#10G PCIE - CEPH - local routing

auto enp129s0f1

iface enp129s0f1 inet static

mtu 9000

#10G PCIE - CEPH - local routing

auto bond0

iface bond0 inet manual

bond-slaves enp68s0f0 enp68s0f1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

offload-rx-vlan-filter off

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 100 200 300

offload-rx-vlan-filter off

#VM BRIDGE

auto vmbr0.100

iface vmbr0.100 inet static

address 130.83.167.138/24

gateway 130.83.167.254

offload-rx-vlan-filter off

#ACCESS VLAN 100

#for local routing ceph

post-up /usr/bin/systemctl restart frr.service(and yes, I know, I'm currently not using VLANs 200 and 300 - at least not in this config)

Do you have monitoring via e.g. Uptime Kuma, Zabbix, Gatus, Monit maybe, ..., of your LAN Connection from another Host ? Just to check that it's not a NIC Issue only. Did you try pinging/monitoring the Host on another NIC as well, to see if it's not "only" just the NIC that failed ? To Mind comes a case with a Mellanox NIC shutting down due to Overtemperature (and there was something indmesgabout that). I'm NOT saying it's your Case, but if you only have that NIC monitored via Ping etc, that could be ONE Possibility.

I don't really understand you here - but if it was "just" one NIC (or both NICs on the same network card) - then one connection, either CEPH or my UPLINK would terminate, but not both. So if the UPLINK NIC(s) would be gone, I would still have a working CEPH and could ping trough that network, but this is not working. Otherwise, if the CEPH NIC(s) would be gone, the UPLINK would be still wokring - what is also not the case.

So I assume - it's either both network cards that fail - I can't believe that - or it's something more general.

I'd just like that you are 100% sure that it IS a Hardware Freeze, because in that case:

- You could setup a Watchdog to automatically reboot the System

- There should definitively be some Indication somewhere (if not you'll need to setup & test Serial Debugging using Null Modem Cable and/or Netconsole, but make sure you validate that they are working in "normal Operation" before "counting" on them to log the Error)

Well, rebooting the server would not be my favorite, as all the VMs on this server would reboot, too... Don't really like that. As

Out of Curiosity, did you try Kernel 6.5.x and see if it crashes/freezes with that ? I agree it's NOT ideal but when you are out of Ideas.

-> now running. will see.

Personally when the Proxmox Kernel failed to boot for whatever Reason, I installed the Debian Stock Backports Kernel (probably NOT needed with PVE 9 / Debian Trixie, I am talking about Bookworm) although of course that will make a bit of a Mess in terms of ZFS and you'll need Debian'szfs-dkmsPackage & the entire Build Toolchain for building a Kernel Module (plus the Kernel Headers). I'd try Kernel 6.5.x first if I were you.

Already thinking about upgrading to debian 13 / pve 9 - (or going with a complete fresh install of this failing server) - maybe also with some fresh boot SSDs, just to make sure...

No Problem. I'm about as lost as you are. I also had a few Quirks on other Systems and unfortunately there is no easy Way around it

.

It doesn't matter how unlikely you think an Issue is, once you went through everything that is "common", whatever is left must be your Issue.

But "common" Issues can be a very very very long list, from bent CPU/Motherboard Pins, to glitchy/faulty PSU, to Transient Phenomenon, to "broken" CPU Cores that trigger in some Circumstances but not all the Time, to quircky Firmware of the Motherboard, NIC, HBA, Hardware Faults in one Component that due to a Firmware Bug in another System instead of failing "gracefully" trigger a complete Breakdown, etc

The only complete breakdown currently is myself - lol - so I'm now back to observing what happens with the old kernel.

My next tasks if this freeze is happening again is to:

- run memtest

- update firmware of HBA

- do some research on / try the scaling-governor in powersave mode (like @MagicHarri did)

- check if hardware is in place / properly mounted

- reinstall server with fresh boot SSDs

- upgrading / reinstall with Debian 13 / PVE 9

- logging via null-modem cable

- BIOS dump and compare with working servers

... still froze today even while I was running kernel 6.5 - so I believe it's not a kernel issue, but some other hardware problems.

I found that on kernel 6.5 ceph reported me some slow OSDs from time to time on the failing server, but could not find any correlation between the slow OSDs and any special system usage. Also it seems like it's not always the same OSDs that are slow. Do not see any issues on the other servers with slow OSDs, the ssds in all 3 servers are all the same brand / model and not worn out (SMART says all good). Maybe something with the HBA is wrong?

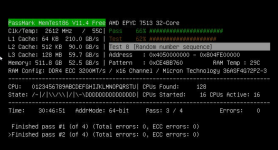

... currently running memtest86 to check the RAM, will sure take some time.

I might still update this thread even if this is not relevant anymore to the kernel issue - but may help other people finding this to make sure it's not the kernel that causes all this.

the next steps will be:

- run memtest (ongoing) / check memtest output

- (physically) check if hardware is in place / properly mounted

- HBA firmware update

- (maybe) logging via null-modem cable

- (maybe) BIOS dump and compare with working servers

steps that will be skipped for now as it's more likely a hardware issue:

- reinstall server with fresh boot SSDs

- upgrading / reinstall with Debian 13 / PVE 9

I found that on kernel 6.5 ceph reported me some slow OSDs from time to time on the failing server, but could not find any correlation between the slow OSDs and any special system usage. Also it seems like it's not always the same OSDs that are slow. Do not see any issues on the other servers with slow OSDs, the ssds in all 3 servers are all the same brand / model and not worn out (SMART says all good). Maybe something with the HBA is wrong?

... currently running memtest86 to check the RAM, will sure take some time.

I might still update this thread even if this is not relevant anymore to the kernel issue - but may help other people finding this to make sure it's not the kernel that causes all this.

the next steps will be:

- run memtest (ongoing) / check memtest output

- (physically) check if hardware is in place / properly mounted

- HBA firmware update

- (maybe) logging via null-modem cable

- (maybe) BIOS dump and compare with working servers

steps that will be skipped for now as it's more likely a hardware issue:

- reinstall server with fresh boot SSDs

- upgrading / reinstall with Debian 13 / PVE 9

Last edited:

to close this issue for me - it seems to be the HBA (or something connected to it) that made my system freeze, although I still can't really be 100% sure. After connecting the boot OS drives directly to the mainboard the freezing of the systems seems to have disappeared. I also replaced both boot disks.

since this change I had no freezes anymore (~3,5 weeks without issues?) and only once a slow OSD in ceph. will continue to minitor if only the OSDs connected to the HBA are (sometimes) slow (due to ceph) or if it's about the disks.

so, I'm out here - thanks alot for your suggestions and support

since this change I had no freezes anymore (~3,5 weeks without issues?) and only once a slow OSD in ceph. will continue to minitor if only the OSDs connected to the HBA are (sometimes) slow (due to ceph) or if it's about the disks.

so, I'm out here - thanks alot for your suggestions and support