Hello everyone,

after years of service, my proxmox host decided to give up on me, at the right moment when i was planning to replace with a new host (not brand new but still new storage) and while i was preparing it building the raid and so on on the new server.

The failing host is a DL380 G8 Dual Xeon E5-2430L 64GB of RAM with a P822 RAID card with 2 LUN configured :

20x 900GB SAS 10K rpm Seagate disks (with 2 hot spare configured) RAID 10 (root LUN, containing LVM local and local-thin where VMs, ISO images and backups are by default stored)

24x 2TB SAS 7.2K rpm Seagate disks (with 2 hot spare) RAID 6 for pure data storage

running on Proxmox 7.4, updated recently (one week ago or so).

I was making some benchmark on the new system to configure my RAID 10 of SSDs for best performance setting up the cache controller, the physical devices cache and so on, and comparing with only 4 benchmarks i run on my old host, just to see how the new system would perform.

I ran those 2 commands, one in read and one in write operation for 4K and 1M chunk size :

https://pve.proxmox.com/wiki/Benchmarking_Storage

fio --ioengine=libaio --direct=1 --sync=1 --rw=read --bs=4K --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=read --bs=1M --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=write --bs=1M --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

then i left my host like that and when i finally found the best settings on the new host, decided to move forward with the final installation and move my VMs there.

I wanted to reboot my host, but selected the wrong tab in my terminal and restarted my old server. Not a big deal you think .... after 10minutes waiting, i decided to go and check because still not available. The server wouldn't boot any longer, POST says no boot drive detected.

I went in the hardware raid controller to check, both volumes are fine, no RAID issues or whatsoever detected and all my disks are still online. I tried to set up again the logical boot volume on the first LUN, no success.

Controller Status OK

Serial Number PDVTF0BRH8Y1RD

Model Smart Array P822 Controller

Firmware Version 8.32

Controller Type HPE Smart Array

Cache Module Status OK

Cache Module Serial Number PBKUD0BRH8V4FT

Cache Module Memory

Logical Drive 01

Status OK

Capacity 5029 GiB

Fault Tolerance RAID 1/RAID 1+0

Logical Drive Type Data LUN

Encryption Status Not Encrypted

Logical Drive 02

Status OK

Capacity 37259 GiB

Fault Tolerance RAID 6

Logical Drive Type Data LUN

Encryption Status Not Encrypted

If i start over the USB Proxmox installation, the boot LVM is missing, not detected at all :

the volume detected is the second one, the data storage.

As the volume is even not detected, i don't even know where to start.

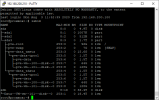

I tried to run testdisk, but not sure how relevant this tool is in getting back the partition like that, what i can say is that he does see LVM2 volumes in my /dev/sda :

From now on, i would please request any advise before proceeding to anything with testdisk. The backup of all my VMs were on the same host, i know it is pretty stupid, but that's why the new host was coming to recycle the old as a Proxmox Backup server, i am running this stuff at home, so it is not like i have budget to buy this kind of hardware every day, and i was already using my old host for pretty much everything. thanks for the help.

after years of service, my proxmox host decided to give up on me, at the right moment when i was planning to replace with a new host (not brand new but still new storage) and while i was preparing it building the raid and so on on the new server.

The failing host is a DL380 G8 Dual Xeon E5-2430L 64GB of RAM with a P822 RAID card with 2 LUN configured :

20x 900GB SAS 10K rpm Seagate disks (with 2 hot spare configured) RAID 10 (root LUN, containing LVM local and local-thin where VMs, ISO images and backups are by default stored)

24x 2TB SAS 7.2K rpm Seagate disks (with 2 hot spare) RAID 6 for pure data storage

running on Proxmox 7.4, updated recently (one week ago or so).

I was making some benchmark on the new system to configure my RAID 10 of SSDs for best performance setting up the cache controller, the physical devices cache and so on, and comparing with only 4 benchmarks i run on my old host, just to see how the new system would perform.

I ran those 2 commands, one in read and one in write operation for 4K and 1M chunk size :

https://pve.proxmox.com/wiki/Benchmarking_Storage

fio --ioengine=libaio --direct=1 --sync=1 --rw=read --bs=4K --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=read --bs=1M --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

fio --ioengine=libaio --direct=1 --sync=1 --rw=write --bs=1M --numjobs=1 --iodepth=1 --runtime=60 --time_based --name seq_read --filename=/dev/sda

then i left my host like that and when i finally found the best settings on the new host, decided to move forward with the final installation and move my VMs there.

I wanted to reboot my host, but selected the wrong tab in my terminal and restarted my old server. Not a big deal you think .... after 10minutes waiting, i decided to go and check because still not available. The server wouldn't boot any longer, POST says no boot drive detected.

I went in the hardware raid controller to check, both volumes are fine, no RAID issues or whatsoever detected and all my disks are still online. I tried to set up again the logical boot volume on the first LUN, no success.

Controller Status OK

Serial Number PDVTF0BRH8Y1RD

Model Smart Array P822 Controller

Firmware Version 8.32

Controller Type HPE Smart Array

Cache Module Status OK

Cache Module Serial Number PBKUD0BRH8V4FT

Cache Module Memory

Logical Drive 01

Status OK

Capacity 5029 GiB

Fault Tolerance RAID 1/RAID 1+0

Logical Drive Type Data LUN

Encryption Status Not Encrypted

Logical Drive 02

Status OK

Capacity 37259 GiB

Fault Tolerance RAID 6

Logical Drive Type Data LUN

Encryption Status Not Encrypted

If i start over the USB Proxmox installation, the boot LVM is missing, not detected at all :

the volume detected is the second one, the data storage.

As the volume is even not detected, i don't even know where to start.

I tried to run testdisk, but not sure how relevant this tool is in getting back the partition like that, what i can say is that he does see LVM2 volumes in my /dev/sda :

From now on, i would please request any advise before proceeding to anything with testdisk. The backup of all my VMs were on the same host, i know it is pretty stupid, but that's why the new host was coming to recycle the old as a Proxmox Backup server, i am running this stuff at home, so it is not like i have budget to buy this kind of hardware every day, and i was already using my old host for pretty much everything. thanks for the help.

Last edited: