I can install the just release stable and go from there instead of the betajust double checked with the just released PBS stable. Datastore creation works for both cases here without issue, when deleting the datastore contents during datastore destruction as well as if checking the reuse datastore and overwrite in-use marker if reusing the previous datastore contents.

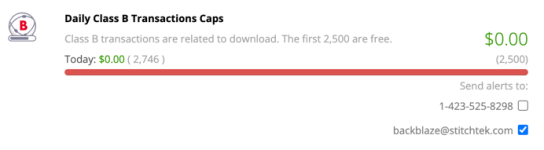

Settings for my backblaze bucket look the same as for you. Keep all versions and no object locking.

What version are you running at the moment? Please post the output ofproxmox-backup-manager versions --verbose

Code:

Linux pbs 6.14.8-2-pve #1 SMP PREEMPT_DYNAMIC PMX 6.14.8-2 (2025-07-22T10:04Z) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

root@pbs:~# proxmox-backup-manager versions --verbose

proxmox-backup 4.0.0 running kernel: 6.14.8-2-pve

proxmox-backup-server 4.0.11-2 running version: 4.0.11

proxmox-kernel-helper 9.0.3

proxmox-kernel-6.14.8-2-pve-signed 6.14.8-2

proxmox-kernel-6.14 6.14.8-2

ifupdown2 3.3.0-1+pmx9

libjs-extjs 7.0.0-5

proxmox-backup-docs 4.0.11-2

proxmox-backup-client 4.0.11-1

proxmox-mail-forward 1.0.2

proxmox-mini-journalreader 1.6

proxmox-offline-mirror-helper 0.7.0

proxmox-widget-toolkit 5.0.5

pve-xtermjs 5.5.0-2

smartmontools 7.4-pve1

zfsutils-linux 2.3.3-pve1

Last edited: