Hi,

when using ceph, the removal of an RBD volume might take a moment. Thats natural as ceph will remove the data.

The problem is: When you are doing multiple actions ( no matter if its create or remove of RBD volumes ) -- like you do it when you create / destroy a VM then the different API processes will wait to acquire a lock to do their magic.

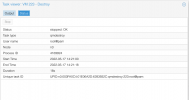

Unfortunatelly it happens ( reproduceable ) quiet easily, that a process will wait to acquire a cfs lock and after a while, will receive a timeout for this lock request and give up.

So far, the story is still fine ( even not nice ). But the problem is, that the status of this API task will actually become OK ( even its not ).

So the API will report back a success of the action, while this is actually not true. And thats a problem if you can not rely on a OK from the API.

It would be very nice if this could be improved.

Thank you & Greetings

Oliver

when using ceph, the removal of an RBD volume might take a moment. Thats natural as ceph will remove the data.

The problem is: When you are doing multiple actions ( no matter if its create or remove of RBD volumes ) -- like you do it when you create / destroy a VM then the different API processes will wait to acquire a lock to do their magic.

Unfortunatelly it happens ( reproduceable ) quiet easily, that a process will wait to acquire a cfs lock and after a while, will receive a timeout for this lock request and give up.

So far, the story is still fine ( even not nice ). But the problem is, that the status of this API task will actually become OK ( even its not ).

So the API will report back a success of the action, while this is actually not true. And thats a problem if you can not rely on a OK from the API.

It would be very nice if this could be improved.

Thank you & Greetings

Oliver