Hi,

In a cluster, I have several nodes with different types of servers. I recently added a server with a Proxmox 9 installation, while I upgraded the others.

This last server has a 25 Gb Intel E810-XXV network card, while the old servers have a 10 Gb Intel X710.

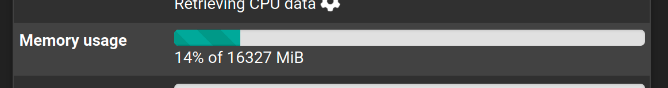

My pfsense 2.8.1 VM runs correctly on the old nodes, with a memory usage of 1.2 Gb (30%) on the VM and 320 Mb (8%) on the pfsense.

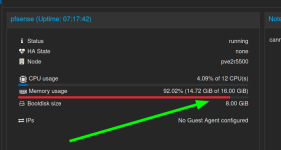

If I migrate the VM to the new node, the memory increases to 95 to 99%, causing the host to crash after 5 to 6 days. I have to hard reboot the server because I can't control it. The KVM service is at 100% CPU.

I tried adjusting the VM's hardware settings to find a solution to this problem, but nothing. Perhaps a slight improvement with queues=1 on network cards with 75% memory, but it gradually increases, and even more so if I simply reboot the VM. I tried with or without Balloon, it's the same.

All the drivers are in Virtio. I focused my tests on the network part because that's where there's the biggest difference with the other nodes, but it could be due to other factors.

Other information: Before migrating to Proxmox 9, the VM had the E1000 network driver, but I had to switch to Virtio, otherwise the VM would no longer communicate. I also checked the pfsense prerequisites https://docs.netgate.com/pfsense/en/latest/recipes/virtualize-proxmox-ve.html.

Does anyone have any ideas on how to solve my problem ?

In a cluster, I have several nodes with different types of servers. I recently added a server with a Proxmox 9 installation, while I upgraded the others.

This last server has a 25 Gb Intel E810-XXV network card, while the old servers have a 10 Gb Intel X710.

My pfsense 2.8.1 VM runs correctly on the old nodes, with a memory usage of 1.2 Gb (30%) on the VM and 320 Mb (8%) on the pfsense.

If I migrate the VM to the new node, the memory increases to 95 to 99%, causing the host to crash after 5 to 6 days. I have to hard reboot the server because I can't control it. The KVM service is at 100% CPU.

I tried adjusting the VM's hardware settings to find a solution to this problem, but nothing. Perhaps a slight improvement with queues=1 on network cards with 75% memory, but it gradually increases, and even more so if I simply reboot the VM. I tried with or without Balloon, it's the same.

All the drivers are in Virtio. I focused my tests on the network part because that's where there's the biggest difference with the other nodes, but it could be due to other factors.

Other information: Before migrating to Proxmox 9, the VM had the E1000 network driver, but I had to switch to Virtio, otherwise the VM would no longer communicate. I also checked the pfsense prerequisites https://docs.netgate.com/pfsense/en/latest/recipes/virtualize-proxmox-ve.html.

Does anyone have any ideas on how to solve my problem ?