I have some other issues with the 6.2 kernel and hardware. I see people downgraded to the 5.15 kernel. How does one get that on a Proxmox 8 system? This was a clean install, not an upgrade from 7.x.

[SOLVED] Proxmox 8.0 / Kernel 6.2.x 100%CPU issue with Windows Server 2019 VMs

- Thread starter jens-maus

- Start date

-

- Tags

- issue proxmox8 windows2019

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yes, this matches our experience as well. We also don't see issues with VMs of low memory or less vCPUs or even CPU sockets assigned. But as we usually use one proxmox host for a single w2019 RDS server vm with full CPU and RAM capacity (>200GB RAM) the issue is quite prominent. As said earlier, things improved with themitigations=offapproach you suggested (thx!) but today as users logged in we can clearly see the issue again. Not so prominent and with less probability, but especially running a constant ICMP ping against a vm shows that from time to time ping times raise up to 10-20 seconds and then the novnc proxmox console freezes for some time, the proxmox host itself then shows also 100% cpu spikes and then after some seconds everything returns to normal but spikes come back after some time again. As said, with mitigations disabled it works better now, but not as smoothly as with kernel 5.15 booted. So there must be something else. So can you please share a VM config of one of your w2019 VMs so that we can compare.

Here we go:

Code:

root@063-pve-04347:~# cat /etc/pve/qemu-server/6303.conf

agent: 1

bios: ovmf

boot: order=ide2;scsi0

sockets: 2

cores: 24

cpu: host

numa: 1

ide2: none,media=cdrom

machine: pc-i440fx-8.0

memory: 147456

meta: creation-qemu=8.0.2,ctime=1687984201

name: srv-term-mng.domain

net0: virtio=00:50:56:AD:3E:A5,bridge=vmbr0,firewall=1,tag=630

ostype: win10

scsi0: rbd:vm-6303-disk-0,size=1T,ssd=1T

scsihw: virtio-scsi-pci

smbios1: uuid=847d250b-c8a0-4a8f-9518-7993adf7e1ff

vmgenid: 32448db6-e370-48bf-bd13-d1b8122ac3ec

Code:

root@063-pve-04347:~# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 56

On-line CPU(s) list: 0-55

Vendor ID: GenuineIntel

BIOS Vendor ID: Intel

Model name: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

BIOS Model name: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz CPU @ 2.6GHz

BIOS CPU family: 179

CPU family: 6

Model: 79

Thread(s) per core: 2

Core(s) per socket: 14

Socket(s): 2

Stepping: 1

CPU(s) scaling MHz: 87%

CPU max MHz: 3500.0000

CPU min MHz: 1200.0000

BogoMIPS: 5200.00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx

fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts re

p_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx sm

x est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadli

ne_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 in

vpcid_single ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_a

djust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap intel_pt xsaveopt cq

m_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts md_clear flush_l1d

Virtualization features:

Virtualization: VT-x

Caches (sum of all):

L1d: 896 KiB (28 instances)

L1i: 896 KiB (28 instances)

L2: 7 MiB (28 instances)

L3: 70 MiB (2 instances)

NUMA:

NUMA node(s): 2

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55

Vulnerabilities:

Itlb multihit: KVM: Vulnerable

L1tf: Mitigation; PTE Inversion; VMX vulnerable

Mds: Vulnerable; SMT vulnerable

Meltdown: Vulnerable

Mmio stale data: Vulnerable

Retbleed: Not affected

Spec store bypass: Vulnerable

Spectre v1: Vulnerable: __user pointer sanitization and usercopy barriers only; no swapgs barriers

Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled, PBRSB-eIBRS: Not affected

Srbds: Not affected

Tsx async abort: Vulnerable

Last edited:

on my nodes is "Y"cat /sys/module/kvm_*/parameters/nested ?

if Y switch off and test

Code:

root@063-pve-04347:~# cat /sys/module/kvm_*/parameters/nested

Ycat /sys/module/kvm_*/parameters/nested ?

if Y switch off and test

Ok, we just tested this and nested was indeed enabled (showed Y). However, after we disabled it with

options kvm_intel nested=0 things did not improve and we still see 100%CPU and ICMP ping spikes and novnc console temp stalling.Only 100% solution so far seems to be a downgrade to kernel 5.15 again. This definitely needs more investigation and kernel bisect to identify the concrete kernel version where it started to appear after kernel 5.15. Hopefully Proxmox staff/dev can assist here!

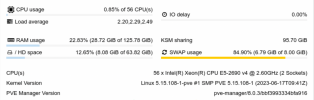

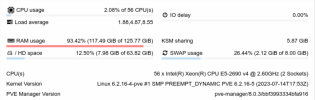

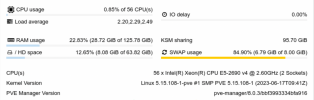

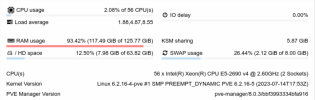

BTW: One further observation regarding comparison of Promox8 with kernel 6.2 vs. kernel 5.15 is also regarding KSM memory management. Thus, please compare the following two proxmox host statistics:

Please note that they are from two different (but hardware wise same) Proxmox host systems. Both of them carry only one windows2019 VM which is set to occupy 120GB of RAM and use all 56 CPU cores (2 sockets). So both VM configs are essentially the same. The interesting part here is IMHO that while the node with kernel 5.15 seems to do quite a nice KSM sharing optimization of 95GB of RAM, thus only occupies 29GB of host memory, the other node with essentially the same VM is only able to collect 6GB of KSM sharing, thus occupies more than 90% of the host memory. And this is the Proxmox host with kernel 6.2 and where the 100%CPU/ICMP ping spikes still happen (because we could not yet reboot it into kernel 5.15 like the other one). So to me this looks like memory management again seems to play a role in that CPU consumption problematic since kernel > 5.15. And also note, that the node which runs now kernel 5.15 with high KSM sharing was also previously booted with kernel 6.2 and showed essentially the same low KSM sharing like the other one...

Please note that they are from two different (but hardware wise same) Proxmox host systems. Both of them carry only one windows2019 VM which is set to occupy 120GB of RAM and use all 56 CPU cores (2 sockets). So both VM configs are essentially the same. The interesting part here is IMHO that while the node with kernel 5.15 seems to do quite a nice KSM sharing optimization of 95GB of RAM, thus only occupies 29GB of host memory, the other node with essentially the same VM is only able to collect 6GB of KSM sharing, thus occupies more than 90% of the host memory. And this is the Proxmox host with kernel 6.2 and where the 100%CPU/ICMP ping spikes still happen (because we could not yet reboot it into kernel 5.15 like the other one). So to me this looks like memory management again seems to play a role in that CPU consumption problematic since kernel > 5.15. And also note, that the node which runs now kernel 5.15 with high KSM sharing was also previously booted with kernel 6.2 and showed essentially the same low KSM sharing like the other one...

Last edited:

Can you post output of

grep -H '' /sys/module/kvm/parameters/*

grep -H '' /sys/module/kvm_intel/parameters/*

in kernel 6.2

See here:

Code:

# grep -H '' /sys/module/kvm/parameters/*

grep -H '' /sys/module/kvm_intel/parameters/*

/sys/module/kvm/parameters/eager_page_split:Y

/sys/module/kvm/parameters/enable_pmu:Y

/sys/module/kvm/parameters/enable_vmware_backdoor:N

/sys/module/kvm/parameters/flush_on_reuse:N

/sys/module/kvm/parameters/force_emulation_prefix:0

/sys/module/kvm/parameters/halt_poll_ns:200000

/sys/module/kvm/parameters/halt_poll_ns_grow:0

/sys/module/kvm/parameters/halt_poll_ns_grow_start:10000

/sys/module/kvm/parameters/halt_poll_ns_shrink:0

/sys/module/kvm/parameters/ignore_msrs:N

/sys/module/kvm/parameters/kvmclock_periodic_sync:Y

/sys/module/kvm/parameters/lapic_timer_advance_ns:-1

/sys/module/kvm/parameters/min_timer_period_us:200

/sys/module/kvm/parameters/mitigate_smt_rsb:N

/sys/module/kvm/parameters/mmio_caching:Y

/sys/module/kvm/parameters/nx_huge_pages:N

/sys/module/kvm/parameters/nx_huge_pages_recovery_period_ms:0

/sys/module/kvm/parameters/nx_huge_pages_recovery_ratio:60

/sys/module/kvm/parameters/pi_inject_timer:0

/sys/module/kvm/parameters/report_ignored_msrs:Y

/sys/module/kvm/parameters/tdp_mmu:Y

/sys/module/kvm/parameters/tsc_tolerance_ppm:250

/sys/module/kvm/parameters/vector_hashing:Y

/sys/module/kvm_intel/parameters/allow_smaller_maxphyaddr:N

/sys/module/kvm_intel/parameters/dump_invalid_vmcs:N

/sys/module/kvm_intel/parameters/emulate_invalid_guest_state:Y

/sys/module/kvm_intel/parameters/enable_apicv:Y

/sys/module/kvm_intel/parameters/enable_ipiv:N

/sys/module/kvm_intel/parameters/enable_shadow_vmcs:Y

/sys/module/kvm_intel/parameters/enlightened_vmcs:N

/sys/module/kvm_intel/parameters/ept:Y

/sys/module/kvm_intel/parameters/eptad:Y

/sys/module/kvm_intel/parameters/error_on_inconsistent_vmcs_config:Y

/sys/module/kvm_intel/parameters/fasteoi:Y

/sys/module/kvm_intel/parameters/flexpriority:Y

/sys/module/kvm_intel/parameters/nested:Y

/sys/module/kvm_intel/parameters/nested_early_check:N

/sys/module/kvm_intel/parameters/ple_gap:128

/sys/module/kvm_intel/parameters/ple_window:4096

/sys/module/kvm_intel/parameters/ple_window_grow:2

/sys/module/kvm_intel/parameters/ple_window_max:4294967295

/sys/module/kvm_intel/parameters/ple_window_shrink:0

/sys/module/kvm_intel/parameters/pml:Y

/sys/module/kvm_intel/parameters/preemption_timer:Y

/sys/module/kvm_intel/parameters/pt_mode:0

/sys/module/kvm_intel/parameters/sgx:N

/sys/module/kvm_intel/parameters/unrestricted_guest:Y

/sys/module/kvm_intel/parameters/vmentry_l1d_flush:never

/sys/module/kvm_intel/parameters/vnmi:Y

/sys/module/kvm_intel/parameters/vpid:YThat's exactly what we are seeing here as well when trying a fresh install. Can you try to downgrade to kernel 5.15 and see if this improves the situation? In addition, please state which underlying host hardware you are using and what are the VM config settings especially regarding number of assigned CPU sockets and cores. In recent tests it looks like that limiting the windows2019 VM to only a single CPU socket improves the VM performance also under kernel 6.2. That would explain why @Ramalana did not see any problem since he has just a single CPU on his host hardware.

There is no difference: q35 or i440fx with dvd-drive ide/sata

I cannot use the older kernel, PVE8 uses 6.2.16-X-pve.

I installed the Windws Server 2019 VM, there was no problem with the VM at all, but after the PVE8 updates have this "lagg".

The update changed the Debian base packages with Proxmox specific packages (xxx-pmx1)

Code:

$> dpkg --list | grep -i pmx1

ii libnss-myhostname:amd64 252.12-pmx1 amd64 nss module providing fallback resolution for the current hostname

ii libnss-systemd:amd64 252.12-pmx1 amd64 nss module providing dynamic user and group name resolution

ii libpam-systemd:amd64 252.12-pmx1 amd64 system and service manager - PAM module

ii libsystemd-shared:amd64 252.12-pmx1 amd64 systemd shared private library

ii libsystemd0:amd64 252.12-pmx1 amd64 systemd utility library

ii libudev-dev:amd64 252.12-pmx1 amd64 libudev development files

ii libudev1:amd64 252.12-pmx1 amd64 libudev shared library

ii systemd 252.12-pmx1 amd64 system and service manager

ii systemd-sysv 252.12-pmx1 amd64 system and service manager - SysV compatibility symlinks

ii udev 252.12-pmx1 amd64 /dev/ and hotplug management daemon

Code:

HPE ML350p Gen8

CPU(s)

16 x Intel(R) Xeon(R) CPU E5-2667 v2 @ 3.30GHz (2 Sockets)

Kernel Version

Linux 6.2.16-4-pve #1 SMP PREEMPT_DYNAMIC PVE 6.2.16-5 (2023-07-14T17:53Z)

PVE Manager Version

pve-manager/8.0.3/bbf3993334bfa916

Code:

balloon: 0

boot: order=sata0;scsi1

sockets: 1

cores: 4

cpu: kvm64

machine: pc-q35-8.0

memory: 16384

meta: creation-qemu=8.0.2,ctime=1689529605

name: GAMES

net0: e1000=D2:96:4A:B2:F1:A6,bridge=vmbr0

numa: 0

ostype: other

sata0: local:iso/en_windows_server_2019_updated_jan_2021_x64_dvd_5ef22372.iso,media=cdrom,size=5406530K

scsi1: local:3000/vm-3000-disk-0.qcow2,aio=native,backup=0,size=150G

smbios1: uuid=44cacc68-1f15-49a2-a28d-1bc8254e8db6

vmgenid: 138ee4a9-1c44-40bd-b672-3911bb008ca3

vmstatestorage: localCan you post output of

grep -H '' /sys/module/kvm/parameters/*

grep -H '' /sys/module/kvm_intel/parameters/*

in kernel 6.2

Code:

root@proxmox2:~# uname -a

Linux proxmox2 6.2.16-4-pve #1 SMP PREEMPT_DYNAMIC PVE 6.2.16-5 (2023-07-14T17:53Z) x86_64 GNU/Linux

root@proxmox2:~# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 16

On-line CPU(s) list: 0-15

Vendor ID: GenuineIntel

BIOS Vendor ID: Intel

Model name: Intel(R) Xeon(R) CPU E5-2667 v2 @ 3.30GHz

BIOS Model name: Intel(R) Xeon(R) CPU E5-2667 v2 @ 3.30GHz CPU @ 3.3GHz

BIOS CPU family: 179

CPU family: 6

Model: 62

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 2

Stepping: 4

CPU(s) scaling MHz: 43%

CPU max MHz: 4000.0000

CPU min MHz: 1200.0000

BogoMIPS: 6584.03

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant

_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid

dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm cpuid_fault epb pti intel_ppin ssbd ibrs ibpb stibp tpr_shadow vnmi fle

xpriority ept vpid fsgsbase smep erms xsaveopt dtherm ida arat pln pts md_clear flush_l1d

Virtualization features:

Virtualization: VT-x

Caches (sum of all):

L1d: 512 KiB (16 instances)

L1i: 512 KiB (16 instances)

L2: 4 MiB (16 instances)

L3: 50 MiB (2 instances)

NUMA:

NUMA node(s): 2

NUMA node0 CPU(s): 0-7

NUMA node1 CPU(s): 8-15

Vulnerabilities:

Itlb multihit: KVM: Mitigation: Split huge pages

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT disabled

Mds: Mitigation; Clear CPU buffers; SMT disabled

Meltdown: Mitigation; PTI

Mmio stale data: Unknown: No mitigations

Retbleed: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines, IBPB conditional, IBRS_FW, RSB filling, PBRSB-eIBRS Not affected

Srbds: Not affected

Tsx async abort: Not affected

root@proxmox2:~# grep -H '' /sys/module/kvm/parameters/*

/sys/module/kvm/parameters/eager_page_split:Y

/sys/module/kvm/parameters/enable_pmu:Y

/sys/module/kvm/parameters/enable_vmware_backdoor:N

/sys/module/kvm/parameters/flush_on_reuse:N

/sys/module/kvm/parameters/force_emulation_prefix:0

/sys/module/kvm/parameters/halt_poll_ns:200000

/sys/module/kvm/parameters/halt_poll_ns_grow:0

/sys/module/kvm/parameters/halt_poll_ns_grow_start:10000

/sys/module/kvm/parameters/halt_poll_ns_shrink:0

/sys/module/kvm/parameters/ignore_msrs:Y

/sys/module/kvm/parameters/kvmclock_periodic_sync:Y

/sys/module/kvm/parameters/lapic_timer_advance_ns:-1

/sys/module/kvm/parameters/min_timer_period_us:200

/sys/module/kvm/parameters/mitigate_smt_rsb:N

/sys/module/kvm/parameters/mmio_caching:Y

/sys/module/kvm/parameters/nx_huge_pages:Y

/sys/module/kvm/parameters/nx_huge_pages_recovery_period_ms:0

/sys/module/kvm/parameters/nx_huge_pages_recovery_ratio:60

/sys/module/kvm/parameters/pi_inject_timer:0

/sys/module/kvm/parameters/report_ignored_msrs:N

/sys/module/kvm/parameters/tdp_mmu:Y

/sys/module/kvm/parameters/tsc_tolerance_ppm:250

/sys/module/kvm/parameters/vector_hashing:Y

root@proxmox2:~# grep -H '' /sys/module/kvm_intel/parameters/*

/sys/module/kvm_intel/parameters/allow_smaller_maxphyaddr:N

/sys/module/kvm_intel/parameters/dump_invalid_vmcs:N

/sys/module/kvm_intel/parameters/emulate_invalid_guest_state:Y

/sys/module/kvm_intel/parameters/enable_apicv:Y

/sys/module/kvm_intel/parameters/enable_ipiv:N

/sys/module/kvm_intel/parameters/enable_shadow_vmcs:N

/sys/module/kvm_intel/parameters/enlightened_vmcs:N

/sys/module/kvm_intel/parameters/ept:Y

/sys/module/kvm_intel/parameters/eptad:N

/sys/module/kvm_intel/parameters/error_on_inconsistent_vmcs_config:Y

/sys/module/kvm_intel/parameters/fasteoi:Y

/sys/module/kvm_intel/parameters/flexpriority:Y

/sys/module/kvm_intel/parameters/nested:Y

/sys/module/kvm_intel/parameters/nested_early_check:N

/sys/module/kvm_intel/parameters/ple_gap:128

/sys/module/kvm_intel/parameters/ple_window:4096

/sys/module/kvm_intel/parameters/ple_window_grow:2

/sys/module/kvm_intel/parameters/ple_window_max:4294967295

/sys/module/kvm_intel/parameters/ple_window_shrink:0

/sys/module/kvm_intel/parameters/pml:N

/sys/module/kvm_intel/parameters/preemption_timer:Y

/sys/module/kvm_intel/parameters/pt_mode:0

/sys/module/kvm_intel/parameters/sgx:N

/sys/module/kvm_intel/parameters/unrestricted_guest:Y

/sys/module/kvm_intel/parameters/vmentry_l1d_flush:cond

/sys/module/kvm_intel/parameters/vnmi:Y

/sys/module/kvm_intel/parameters/vpid:Y

Last edited:

try with mitigations off and ksm disabled

@jens-maus have you tried to disable KSM on top of "mitigations=off" before VM start?

in my setup there is no memory pressure within the VM, so KSM in inactive state

Haven't tried to disable KSM yet. What is the exact procedure to do that?@jens-maus have you tried to disable KSM on top of "mitigations=off" before VM start?

in my setup there is no memory pressure within the VM, so KSM in inactive state

When I setup 1 VM per host I used to disable KSM on the node following this guide: https://pve.proxmox.com/wiki/Kernel_Samepage_Merging_(KSM)Haven't tried to disable KSM yet. What is the exact procedure to do that?

I dont think this solve the "lagg" problem, the same settings on "Windows 2012R2" working flawlessly.try with mitigations off and ksm disabled

E5-xxx v2 doesn't have many "mitigation problem" unlike the v3/v4 series.

Links ? References ?E5-xxx v2 doesn't have many "mitigation problem" unlike the v3/v4 series.

What links?Links ? References ?

Everyone can do a simple "lscpu"...

sorry for my words.

I mean what are your "sources" or "experiences" ? for your assumption :

I mean what are your "sources" or "experiences" ? for your assumption :

"E5-xxx v2 doesn't have many "mitigation problem" unlike the v3/v4 series.It's not about your words, i meant really lscpu....sorry for my words.

I mean what are your "sources" or "experiences" ? for your assumption :"E5-xxx v2 doesn't have many "mitigation problem" unlike the v3/v4 series.

Remember: spectre v1/v2 is almost impossible to fix, because every cpu is affected by that that has a cache...

Everything else is fixable i think, at least check my 13th gen, i mean intel did there probably what they could?

And for reference an Ryzen Zen3...

I think i could even find an E5 v2, if i ssh into my company and check all servers, but ugh :-(

- Intel(R) Xeon(R) CPU E3-1275 v5

Code:

Vulnerabilities:

Itlb multihit: KVM: Mitigation: Split huge pages

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vul nerable

Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Retbleed: Mitigation; IBRS

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitizat ion

Spectre v2: Mitigation; IBRS, IBPB conditional, STIBP conditional, RSB fillin g, PBRSB-eIBRS Not affected

Srbds: Mitigation; Microcode

Tsx async abort: Mitigation; TSX disabled- 13th Gen Intel(R) Core(TM) i3-1315U

Code:

Vulnerabilities:

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Not affected

Retbleed: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitizat ion

Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-e IBRS SW sequence

Srbds: Not affected

Tsx async abort: Not affected- AMD Ryzen 7 5800X 8-Core Processor

Code:

Vulnerabilities:

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Not affected

Retbleed: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitizat ion

Spectre v2: Mitigation; Retpolines, IBPB conditional, IBRS_FW, STIBP always-o n, RSB filling, PBRSB-eIBRS Not affected

Srbds: Not affected

Tsx async abort: Not affected@emunt6

Your E5 v2 lscpu output pls.

@_gabriel

I found an Xeon v2 in my Company, but the Linux there is a bit older :-(

However, you are right, emunt6 comment is wrong!

Its same affected as v3/v4/v5, only Retrobleed seems unaffected, but im not sure, the eternity old lscpu version doesn't shows it here.

Intel(R) Xeon(R) CPU E3-1245 V2

I found an Xeon v2 in my Company, but the Linux there is a bit older :-(

However, you are right, emunt6 comment is wrong!

Its same affected as v3/v4/v5, only Retrobleed seems unaffected, but im not sure, the eternity old lscpu version doesn't shows it here.

Intel(R) Xeon(R) CPU E3-1245 V2

Code:

Vulnerability Itlb multihit: KVM: Mitigation: Split huge pages

Vulnerability L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vulnerable

Vulnerability Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Vulnerability Meltdown: Mitigation; PTI

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full generic retpoline, IBPB conditional, IBRS_FW, STIBP conditional, RSB filling

Vulnerability Srbds: Vulnerable: No microcode

Vulnerability Tsx async abort: Not affected