I setup a second node and temporarily added it to a cluster. I then removed it via the docs and am trying to login to it as a standalone. I can ssh as root fine but I can't login as root under PAM via the console UI. I have tried the standard things like resetting the passwd and such but it won't take. Any ideas?

Proxmox 7.1 UI Password Fails

- Thread starter Seed

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Can you please post the output of

pvecm status? As on a hunch I'd think that the cluster isn't yet separated cleanly and thus the node has no quorum.I ran this on the node that I can't login to via the UI:Can you please post the output ofpvecm status? As on a hunch I'd think that the cluster isn't yet separated cleanly and thus the node has no quorum.

Cluster information

-------------------

Name: proxmox

Config Version: 2

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Dec 7 20:52:13 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000002

Ring ID: 2.1d

Quorate: No

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 1

Quorum: 2 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 192.168.7.241 (local)

My hunch was true, your node has still a cluster setup and missing the other one so it not quorate which explains your login issue.Highest expected: 2

Total votes: 1

Quorum: 2 Activity blocked

What's the status on the other node that was previously in that cluster?

My hunch was true, your node has still a cluster setup and missing the other one so it not quorate which explains your login issue.

What's the status on the other node that was previously in that cluster?

Cluster information

-------------------

Name: proxmox

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Dec 7 22:32:26 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.d

Quorate: Yes

Votequorum information

----------------------

Expected votes: 1

Highest expected: 1

Total votes: 1

Quorum: 1

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.7.240 (local)

Ok, so that one is quorate and separations seems to have worked there, but they both have the same cluster name, that's not so ideal.

Now, I'd remove the remaining cluster config on the semi-broken node

With that it should work again, and the node is not in a broken cluster anymore, albeit there may still be some left-over configurations from the other node from the time they were in a cluster.

Now, I'd remove the remaining cluster config on the semi-broken node

Bash:

systemctl stop pve-cluster corosync

# start pmxcfs temporary in local mode to make it ignore the cluster config

pmxcfs -l

# remove cluster configs

rm -f /etc/pve/corosync.conf /etc/corosync/corosync.conf /etc/corosync/authkey

# stop local mode pmxcfs again

killall pmxcfs

# start it normally and cleanly via its service

systemctl start pve-clusterWith that it should work again, and the node is not in a broken cluster anymore, albeit there may still be some left-over configurations from the other node from the time they were in a cluster.

You're the man. Thank you so much for this. Should be tagged or something.Ok, so that one is quorate and separations seems to have worked there, but they both have the same cluster name, that's not so ideal.

Now, I'd remove the remaining cluster config on the semi-broken node

Bash:systemctl stop pve-cluster corosync # start pmxcfs temporary in local mode to make it ignore the cluster config pmxcfs -l # remove cluster configs rm -f /etc/pve/corosync.conf /etc/corosync/corosync.conf /etc/corosync/authkey # stop local mode pmxcfs again killall pmxcfs # start it normally and cleanly via its service systemctl start pve-cluster

With that it should work again, and the node is not in a broken cluster anymore, albeit there may still be some left-over configurations from the other node from the time they were in a cluster.

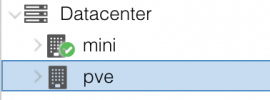

There is one strange artifact however. When logging into the broken node that I ran the above on, it still has some metadata of the other node:

Last edited:

Yeah, that would be that part I described:There is one strange artifact however. When logging into the broken node that I ran the above on, it still has some metadata of the other node:

Basically the configuration files from VMs that where on the "pve" node are still there so the Proxmox VE web-interface needs to map them somewhere, it's just a pseudo entry.albeit there may still be some left-over configurations from the other node from the time they were in a cluster.

You can remove it by deleting the configs from the other node (be sure you got the correct one), e.g.:

rm -rf /etc/pve/nodes/<NODENAME> replace <NODENAME> with the actual node, in your specific case that'd be pveAfter that reload the web-interface completely.

Great. Works a treat. Thank you so much!Yeah, that would be that part I described:

Basically the configuration files from VMs that where on the "pve" node are still there so the Proxmox VE web-interface needs to map them somewhere, it's just a pseudo entry.

You can remove it by deleting the configs from the other node (be sure you got the correct one), e.g.:

rm -rf /etc/pve/nodes/<NODENAME>replace<NODENAME>with the actual node, in your specific case that'd bepve

After that reload the web-interface completely.

THANK YOU! That little recipe is now saved in my notes!Ok, so that one is quorate and separations seems to have worked there, but they both have the same cluster name, that's not so ideal.

Now, I'd remove the remaining cluster config on the semi-broken node

Bash:systemctl stop pve-cluster corosync # start pmxcfs temporary in local mode to make it ignore the cluster config pmxcfs -l # remove cluster configs rm -f /etc/pve/corosync.conf /etc/corosync/corosync.conf /etc/corosync/authkey # stop local mode pmxcfs again killall pmxcfs # start it normally and cleanly via its service systemctl start pve-cluster

With that it should work again, and the node is not in a broken cluster anymore, albeit there may still be some left-over configurations from the other node from the time they were in a cluster.