Hello all,

I made a week ago on the weekend some maintanance work. Therefore I had to shutdown the 2 switches for the Proxmox/Ceph Cluster one after another. We are using the system as HCI for our VMs which works pretty good and we are happy with it.

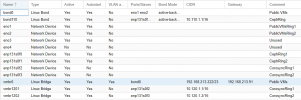

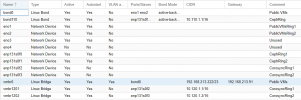

Every server has a connection to both switches. The Ceph NICs (Client and Cluster traffic together) are configured via a Linux bond (bond110), the Corosync NICs via its configuration when joining the cluster and the public NICs also via Linux Bond (bond0). Ceph, Corosync and public traffic are separetad via VLANs.

1) I shut down switch 2, which was no problem - probably because the NICs of the servers to that switch were in slave mode.

2) I made the maintanance and booted switch 2 again and waited until it was finished. I could see in syslog that the NICs were connected again.

3) I shut down switch 1 and after a while the servers started to reboot

That surprised me, as I thought that configuration enabled me to shutdown 1 switch without interruption.

I tried to unterstand what was going on, but could not find any reason for it. Probably because Corosync did not get in sync again.

Why did all servers reboot?

I attached the relevant syslog parts. If you need more information, please let me know.

I made a week ago on the weekend some maintanance work. Therefore I had to shutdown the 2 switches for the Proxmox/Ceph Cluster one after another. We are using the system as HCI for our VMs which works pretty good and we are happy with it.

Every server has a connection to both switches. The Ceph NICs (Client and Cluster traffic together) are configured via a Linux bond (bond110), the Corosync NICs via its configuration when joining the cluster and the public NICs also via Linux Bond (bond0). Ceph, Corosync and public traffic are separetad via VLANs.

1) I shut down switch 2, which was no problem - probably because the NICs of the servers to that switch were in slave mode.

2) I made the maintanance and booted switch 2 again and waited until it was finished. I could see in syslog that the NICs were connected again.

3) I shut down switch 1 and after a while the servers started to reboot

That surprised me, as I thought that configuration enabled me to shutdown 1 switch without interruption.

I tried to unterstand what was going on, but could not find any reason for it. Probably because Corosync did not get in sync again.

Code:

Apr 10 16:23:22 pve01 kernel: [262883.826693] i40e 0000:83:00.0 enp131s0f0: NIC Link is Down

Apr 10 16:23:22 pve01 kernel: [262883.828315] i40e 0000:83:00.2 enp131s0f2: NIC Link is Down

Apr 10 16:23:22 pve01 kernel: [262883.915091] bond110: (slave enp131s0f0): link status definitely down, disabling slave

Apr 10 16:23:22 pve01 kernel: [262883.915093] bond110: (slave enp131s0f1): making interface the new active one

Apr 10 16:23:22 pve01 kernel: [262884.327246] igb 0000:06:00.0 eno1: igb: eno1 NIC Link is Down

Apr 10 16:23:22 pve01 kernel: [262884.331177] bond0: (slave eno1): link status definitely down, disabling slave

Apr 10 16:23:22 pve01 kernel: [262884.331179] bond0: (slave eno2): making interface the new active one

Apr 10 16:23:22 pve01 kernel: [262884.331181] device eno1 left promiscuous mode

Apr 10 16:23:22 pve01 kernel: [262884.331263] device eno2 entered promiscuous mode

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 5 link: 0 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 5 link: 1 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 4 link: 0 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 4 link: 1 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 2 link: 0 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 2 link: 1 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 3 link: 0 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] link: host: 3 link: 1 is down

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 5 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 5 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 5 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 4 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 4 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 4 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 4 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 2 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 2 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 2 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 3 has no active links

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Apr 10 16:23:23 pve01 corosync[3324]: [KNET ] host: host: 3 has no active links

Apr 10 16:23:23 pve01 kernel: [262884.855437] vmbr1201: port 1(enp131s0f2) entered disabled state

Apr 10 16:23:24 pve01 corosync[3324]: [TOTEM ] Token has not been received in 2212 ms

Apr 10 16:23:24 pve01 corosync[3324]: [TOTEM ] A processor failed, forming new configuration.

Apr 10 16:23:28 pve01 corosync[3324]: [TOTEM ] A new membership (1.166) was formed. Members left: 2 3 4 5

Apr 10 16:23:28 pve01 corosync[3324]: [TOTEM ] Failed to receive the leave message. failed: 2 3 4 5

Apr 10 16:23:28 pve01 corosync[3324]: [QUORUM] This node is within the non-primary component and will NOT provide any services.

Apr 10 16:23:28 pve01 corosync[3324]: [QUORUM] Members[1]: 1

Apr 10 16:23:28 pve01 pmxcfs[3109]: [dcdb] notice: members: 1/3109

Apr 10 16:23:28 pve01 corosync[3324]: [MAIN ] Completed service synchronization, ready to provide service.Why did all servers reboot?

I attached the relevant syslog parts. If you need more information, please let me know.

Attachments

Last edited: