Hi, I'm getting this error every time I want to move a disk of the VM to another disk. I state that I have all NAS (physical, Synology) in NFS

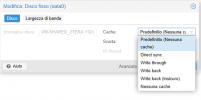

TASK ERROR: storage migration failed: target storage is known to cause issues with aio=io_uring (used by current drive)

can someone help me? Sorry for the English but I'm translating with Google

TASK ERROR: storage migration failed: target storage is known to cause issues with aio=io_uring (used by current drive)

can someone help me? Sorry for the English but I'm translating with Google