Hello togehter,

I bought 3 wd80edaz-11ta3a0 8TB hard drives a few days ago. I have these running with ZFS in Raidz. In addition, I forward them via mountpoint to a ct that does smb. When I tried to test the speed, it settled at about 45mb/s.

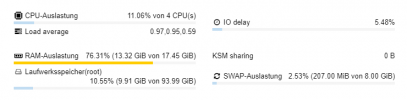

specs about my proxmox server:

I5 4440

18GB Ram

480gb ssd for vm

3x8tb for data

I still have 1x120gb ssd and 1x240gb ssd here in the backup

Zpool list

zpool status

nfs list

I bought 3 wd80edaz-11ta3a0 8TB hard drives a few days ago. I have these running with ZFS in Raidz. In addition, I forward them via mountpoint to a ct that does smb. When I tried to test the speed, it settled at about 45mb/s.

specs about my proxmox server:

I5 4440

18GB Ram

480gb ssd for vm

3x8tb for data

I still have 1x120gb ssd and 1x240gb ssd here in the backup

Zpool list

Code:

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

ssd480 444G 19.4G 425G - - 23% 4% 1.00x ONLINE -

wd16tb 21.8T 83.3G 21.7T - - 0% 0% 1.00x ONLINE -zpool status

Code:

pool: ssd480

state: ONLINE

scan: scrub repaired 0B in 0 days 00:01:11 with 0 errors on Sun Jan 10 00:25:12 2021

config:

NAME STATE READ WRITE CKSUM

ssd480 ONLINE 0 0 0

ata-SATA3_480GB_SSD_2020091901333 ONLINE 0 0 0

errors: No known data errors

pool: wd16tb

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

wd16tb ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ata-WDC_WD80EDAZ-11TA3A0_VGH42L5G ONLINE 0 0 0

ata-WDC_WD80EDAZ-11TA3A0_VGH4P9WG ONLINE 0 0 0

ata-WDC_WD80EDAZ-11TA3A0_VGH4ZT3G ONLINE 0 0 0nfs list

Code:

NAME USED AVAIL REFER MOUNTPOINT

ssd480 56.4G 374G 24K /ssd480

ssd480/subvol-101-disk-0 1.54G 48.5G 1.54G /ssd480/subvol-101-disk-0

ssd480/subvol-103-disk-0 3.26G 21.7G 3.26G /ssd480/subvol-103-disk-0

ssd480/vm-104-disk-0 3M 374G 12K -

ssd480/vm-104-disk-1 51.6G 411G 14.5G -

wd16tb 55.5G 14.0T 55.5G /wd16tb