I hate to necro a thread like this, but I also am curious what happened for everyone here.

When I set up my current cluster I thought I'd try out CEPH, and I've been impressed by a lot of it's ability.

The performance on the other hand is downright abysmal and I'm struggling to determine why.

Granted my setup is a bit weird, since I'm using 40Gb Infiniband between the nodes, but even so I can get Iperf tests of 11-13gbps.

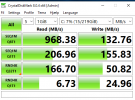

Regardless of this I am getting write speeds of 50-90MB/s on both replicated, and erasure coded pool types with rados bench.

Meanwhile, I get 350-430MB/s sequential read and 400-500MB/s random.

I've even tried enabling RDMA for CEPH because I can get 5-6GB/s transfers when using qperf vs iperf. As an aside, this has sadly done absolutely nothing so, I'm not sure if I just did it wrong or if it is actually useless.

The ping between all of my nodes is less than 1ms, and this awful performance shows up even when there is zero load on the pool.

I was thinking that maybe my write speed could be somehow related to the network the traffic is going over, but this logically makes no sense to me if my reads are almost 10 times faster.

I'm not really sure where to look, but when I run the rados write benchmark I am seeing max latencies of 4 with an average of 0.9.

I'm not really sure what this means, it seems to indicate it's seconds with (s), but it seems like I'd have a bigger issue if it was that bad.

Did anyone ever narrow down what is happening or have a good diagnostic path to follow to find out? I'm just getting sick of fighting with CEPH to get it to not have the same speed as my 60GB laptop drive from 2003.