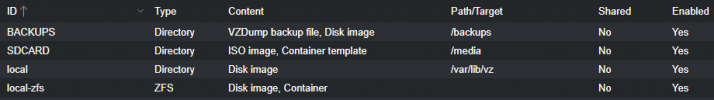

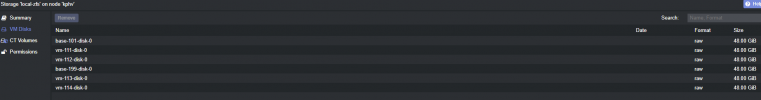

My local-zfs is on a 1 TB SSD, can't explain why Total size is constantly reducing, how could that be ? i was assuming total size always remain the same.

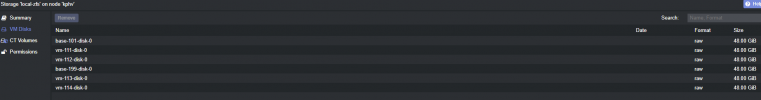

There are only 6 VM disks on there, and used size looks consistent as they are not full at all

I do nightly backups on a the separate backup storage (1 TB ext HD) so that cannot be backups.

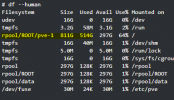

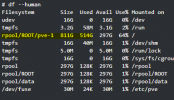

However i notice 514GB taken on rpool/ROOT/pve-1, this is huge and can't determine what is causing this.

digging a bit more i find local storage to grow the same but that storage is not destination for backups.

Any help would be welcomed Thanks

Thanks

There are only 6 VM disks on there, and used size looks consistent as they are not full at all

I do nightly backups on a the separate backup storage (1 TB ext HD) so that cannot be backups.

However i notice 514GB taken on rpool/ROOT/pve-1, this is huge and can't determine what is causing this.

digging a bit more i find local storage to grow the same but that storage is not destination for backups.

Any help would be welcomed

Attachments

Last edited: