Hello, I apologize in advance if it has already been somewhere, I have been looking for a solution for several days - also on this forum and probably a stupid simple problem but I can not find a solution anywhere ... sorry for that

I have proxmox ve 6.3 installed on debian 10.7 and I have an additional sda4 partition mounted to Debian

And I would like to have it in the Debian VM as well, but I can't make it there at all

I talk about partition:

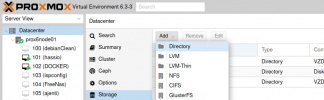

Where can I find proxmox in the GUI or anywhere else, to mount this missing partition?

Best regards to everyone!

I have proxmox ve 6.3 installed on debian 10.7 and I have an additional sda4 partition mounted to Debian

Code:

root @ prox6node01: lsblk

├─sda1 8: 1 0 512M 0 part / boot / efi

├─sda2 8: 2 0 490.8G 0 part /

├─sda4 8: 4 0 378G 0 part / media / DATA

└─sda5 8: 5 0 25G 0 part [SWAP]And I would like to have it in the Debian VM as well, but I can't make it there at all

Code:

root @ debian: ~ # lsblk

sda 8: 0 0 32G 0 disk

├─sda1 8: 1 0 31G 0 part /

├─sda2 8: 2 0 1C 0 part

└─sda5 8: 5 0 975M 0 part [SWAP]

sr0 11: 0 1 694M 0 romI talk about partition:

Code:

sda4 8: 4 0 378G 0

part / media / DATABest regards to everyone!

Last edited: