Hello,

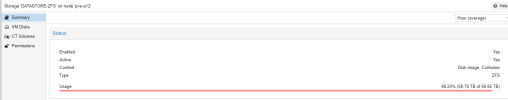

I have a strange problem in one of our servers that I think is related to the ZFS volume.

If I run a du or ncdu command on this server to read the files, it is extremely slow. Takes about 30/40 minutes per TB. I don't notice the problem with writing to it.

Before making the backup, our backup client first reads the size of the storage vault and does this with du, so this takes far too long now.

I just added a temporary disk to the same server on the local datastore and copied a 30GB folder to it and when I then execute the command it is very fast.

Here's the setup.

PVE-A12

32 x Intel(R) Xeon(R) Gold 6134 CPU @ 3.20GHz (2 Sockets)

192GB

6 x SAS WD DC HC550 18TB SAS 12Gbps 3.5

2 x HP 1.92TB Enterprise SSD SATA 6G P/N: 838403-005

M.2. SSD (for OS): 2x Samsung 250GB M.2 SSD SATA NEW

ZFS config:

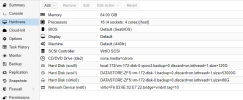

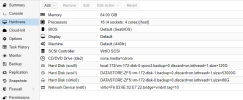

So 1 VM is running on this, Debian 12.

I saw that I had once created it as a virtio disk, but I converted it to scsi1, unfortunately no difference.

If I run du on scsi0 I have all the files and size of 40GB back within 4 seconds, if I move scsi0 to DATASTORE-ZFS it also takes tens of minutes.

We have several Linux Debian servers on different types of hardware and it is fast everywhere, even on very old hardware with a HW raid controller and slow HDDs in RAID5. But this cannot be done no matter how slowly.

The only thing I can think of that makes the difference that we do not have on other servers is a special device with SSDs and that something in the config is not right. I have tried all different options unfortunately without positive results.

In the attachment txt file of zfs parameters.

Anybody a idea?

I have a strange problem in one of our servers that I think is related to the ZFS volume.

If I run a du or ncdu command on this server to read the files, it is extremely slow. Takes about 30/40 minutes per TB. I don't notice the problem with writing to it.

Before making the backup, our backup client first reads the size of the storage vault and does this with du, so this takes far too long now.

I just added a temporary disk to the same server on the local datastore and copied a 30GB folder to it and when I then execute the command it is very fast.

Here's the setup.

PVE-A12

32 x Intel(R) Xeon(R) Gold 6134 CPU @ 3.20GHz (2 Sockets)

192GB

6 x SAS WD DC HC550 18TB SAS 12Gbps 3.5

2 x HP 1.92TB Enterprise SSD SATA 6G P/N: 838403-005

M.2. SSD (for OS): 2x Samsung 250GB M.2 SSD SATA NEW

ZFS config:

So 1 VM is running on this, Debian 12.

I saw that I had once created it as a virtio disk, but I converted it to scsi1, unfortunately no difference.

If I run du on scsi0 I have all the files and size of 40GB back within 4 seconds, if I move scsi0 to DATASTORE-ZFS it also takes tens of minutes.

We have several Linux Debian servers on different types of hardware and it is fast everywhere, even on very old hardware with a HW raid controller and slow HDDs in RAID5. But this cannot be done no matter how slowly.

The only thing I can think of that makes the difference that we do not have on other servers is a special device with SSDs and that something in the config is not right. I have tried all different options unfortunately without positive results.

In the attachment txt file of zfs parameters.

Anybody a idea?