Hi all,

First of all, system info:

I am trying to pass through an ATTO SAS card to my node, the card connects to a Superloader and Tape Drive and I can clearly see them from PVE:

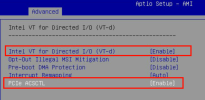

I followed the wiki (and done this before with GPUs on a different system), therefore:

When the system loads it briefly shows some information on the card:

Here is the vm config, I already tried with and without allfunctions and with/without ROM-bar. I also spun up a brand new VM with had OVMF and q35, same.

Made sure the card is not on the same IOMMU group of something else.

Logs:

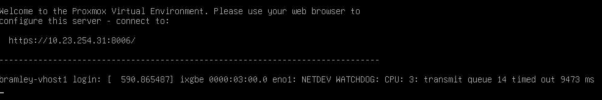

As soon as I start any VM with this PCIe device attached, the system halts and reboots, no clear messages in the console are shown, ie kernel panic etc..

Appreciate any help or pointers on this!

Cheers

P

First of all, system info:

Bash:

root@vhost1:~# pveversion

pve-manager/8.2.4/faa83925c9641325 (running kernel: 6.8.8-1-pve)

root@bramley-vhost1:~# cat /proc/cpuinfo | grep Xeon | uniq

model name : Intel(R) Xeon(R) Silver 4410Y

root@vhost1:~# dmidecode -t baseboard | grep -A2 Base\ Board\ Info

Base Board Information

Manufacturer: Supermicro

Product Name: X13SEI-TF

root@vhost1:~# free -h

total used free shared buff/cache available

Mem: 125Gi 8.2Gi 117Gi 61Mi 913Mi 117Gi

Swap: 4.5Gi 0B 4.5Gi

root@vhost1:~# dmesg | grep efi

[ 0.000000] efi: EFI v2.9 by American Megatrends

[ 0.000000] efi: ACPI=0x73a8f000 ACPI 2.0=0x73a8f014 SMBIOS=0x76dd9000 SMBIOS 3.0=0x76dd8000 MEMATTR=0x67847018 ESRT=0x5bc6a498 MOKvar=0x76eb9000

[ 0.000000] efi: Remove mem605: MMIO range=[0x80000000-0x8fffffff] (256MB) from e820 map

[ 0.000000] efi: Not removing mem606: MMIO range=[0xfe010000-0xfe010fff] (4KB) from e820 map

[ 0.000000] efi: Remove mem607: MMIO range=[0xff000000-0xffffffff] (16MB) from e820 map

[ 0.197144] clocksource: refined-jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 1910969940391419 ns

[ 1.798381] efivars: Registered efivars operations

root@vhost1:~# dmidecode -t bios | grep -iE 'vendor|version'

# SMBIOS implementations newer than version 3.5.0 are not

# fully supported by this version of dmidecode.

Vendor: American Megatrends International, LLC.

Version: 2.1I am trying to pass through an ATTO SAS card to my node, the card connects to a Superloader and Tape Drive and I can clearly see them from PVE:

Bash:

root@vhost1:~# ls -l /dev/tape/by-id/

total 0

lrwxrwxrwx 1 root root 9 Jul 5 21:55 scsi-10WT115885 -> ../../st0

lrwxrwxrwx 1 root root 10 Jul 5 21:55 scsi-10WT115885-nst -> ../../nst0

lrwxrwxrwx 1 root root 9 Jul 5 21:55 scsi-350050763121bbf47 -> ../../st0

lrwxrwxrwx 1 root root 10 Jul 5 21:55 scsi-350050763121bbf47-nst -> ../../nst0

lrwxrwxrwx 1 root root 9 Jul 5 21:55 scsi-3500e09efff11005e -> ../../sg3

lrwxrwxrwx 1 root root 9 Jul 5 21:55 scsi-3500e09efff11005e-changer -> ../../sg3

lrwxrwxrwx 1 root root 9 Jul 5 21:55 scsi-CJ1LBE0005 -> ../../sg3

root@vhost1:~# dmesg | grep -iE 'st0|sg3'

[ 2.437043] scsi host0: ahci

[ 6.123863] scsi 14:0:0:1: Attached scsi generic sg3 type 8

[ 6.430025] st 14:0:0:0: Attached scsi tape st0

[ 6.430027] st 14:0:0:0: st0: try direct i/o: yes (alignment 4 B)

root@vhost1:~# lsscsi | grep 14:0:0

[14:0:0:0] tape IBM ULTRIUM-HH8 MA71 /dev/st0

[14:0:0:1] mediumx QUANTUM UHDL 0100 /dev/sch0

root@vhost1:~# lspci | grep -i atto

17:00.0 Serial Attached SCSI controller: ATTO Technology, Inc. ExpressSAS 6Gb/s SAS/SATA HBA

cat /sys/bus/pci/devices/0000\:17\:00.0/reset_method

busI followed the wiki (and done this before with GPUs on a different system), therefore:

Bash:

root@vhost1:~# grep -i default /etc/default/grub

GRUB_DEFAULT=0

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"

root@vhost1:~# grep vfio /etc/modules

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

root@vhost1:~# lsmod | grep vfio

vfio_pci 16384 0

vfio_pci_core 86016 1 vfio_pci

irqbypass 12288 105 vfio_pci_core,kvm

vfio_iommu_type1 49152 0

vfio 69632 3 vfio_pci_core,vfio_iommu_type1,vfio_pci

iommufd 98304 1 vfio

root@vhost1:~# dmesg | grep -iE 'DMAR|IOMMU|AMD-Vi' | head

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.8-1-pve root=/dev/mapper/pve-root ro quiet console=tty0 console=ttyS0,115200 quiet intel_iommu=on iommu=pt

[ 0.021167] ACPI: DMAR 0x00000000700B1000 00023C (v01 SUPERM SMCI--MB 00000001 INTL 20091013)

[ 0.021241] ACPI: Reserving DMAR table memory at [mem 0x700b1000-0x700b123b]

[ 0.198163] Kernel command line: BOOT_IMAGE=/boot/vmlinuz-6.8.8-1-pve root=/dev/mapper/pve-root ro quiet console=tty0 console=ttyS0,115200 quiet intel_iommu=on iommu=pt

[ 0.198277] DMAR: IOMMU enabled

[ 0.506798] DMAR: Host address width 46

[ 0.506799] DMAR: DRHD base: 0x000000a9ffc000 flags: 0x0

[ 0.506807] DMAR: dmar0: reg_base_addr a9ffc000 ver 6:0 cap 9ed008c40780466 ecap 3ee9e86f050df

[ 0.506811] DMAR: DRHD base: 0x000000be3fc000 flags: 0x0

[ 0.506815] DMAR: dmar1: reg_base_addr be3fc000 ver 6:0 cap 9ed008c40780466 ecap 3ee9e86f050dfWhen the system loads it briefly shows some information on the card:

Here is the vm config, I already tried with and without allfunctions and with/without ROM-bar. I also spun up a brand new VM with had OVMF and q35, same.

Bash:

root@vhost1:~# qm config 302

agent: 1

boot: order=scsi0;ide2;net0

cores: 4

cpu: host

hostpci0: 0000:17:00

ide2: ISOs:iso/debian-12.5.0-amd64-netinst.iso,media=cdrom,size=629M

machine: q35

memory: 4096

meta: creation-qemu=8.1.5,ctime=1713799457

name: tape

net0: virtio=BC:24:11:71:26:71,bridge=vmbr0

numa: 0

onboot: 0

ostype: l26

parent: beforebareos

scsi0: SSD:vm-302-disk-0,cache=writeback,discard=on,iothread=1,size=32G

scsihw: virtio-scsi-single

serial0: socket

smbios1: uuid=57a71338-fc4b-4bed-a03e-f6bc44dcfecd

sockets: 1

vmgenid: 3ada9eb1-e6bc-4db3-804f-17928c38e65dMade sure the card is not on the same IOMMU group of something else.

Bash:

root@vhost1:~# pvesh get /nodes/$(hostname)/hardware/pci --pci-class-blacklist "" | grep 16

│ 0x010700 │ 0x0042 │ 0000:17:00.0 │ 16 │ 0x117c │ ExpressSAS 6Gb/s SAS/SATA HBA │ │ 0x0046 │ ExpressSAS H644 │ 0x117c │ ATTO Technology, Inc. │ ATTO Technology, Inc. │

│ 0x060400 │ 0x352a │ 0000:16:01.0 │ 15 │ 0x8086 │ │ │ 0x0000 │ │ 0x8086 │ Intel Corporation │ Intel Corporation │

│ 0x078000 │ 0x1be0 │ 0000:00:16.0 │ 25 │ 0x8086 │ │ │ 0x1c56 │ │ 0x15d9 │ Super Micro Computer Inc │ Intel Corporation │

│ 0x078000 │ 0x1be1 │ 0000:00:16.1 │ 25 │ 0x8086 │ │ │ 0x1c56 │ │ 0x15d9 │ Super Micro Computer Inc │ Intel Corporation │

│ 0x078000 │ 0x1be4 │ 0000:00:16.4 │ 25 │ 0x8086 │ │ │ 0x1c56 │ │ 0x15d9 │ Super Micro Computer Inc │ Intel Corporation │

│ 0x080700 │ 0x0b23 │ 0000:16:00.4 │ 14 │ 0x8086 │ │ │ 0x0000 │ │ 0x8086 │ Intel Corporation │ Intel Corporation │

│ 0x088000 │ 0x09a2 │ 0000:16:00.0 │ 30 │ 0x8086 │ Ice Lake Memory Map/VT-d │ │ 0x0000 │ │ 0x8086 │ Intel Corporation │ Intel Corporation │

│ 0x088000 │ 0x09a4 │ 0000:16:00.1 │ 31 │ 0x8086 │ Ice Lake Mesh 2 PCIe │ │ 0x0000 │ │ 0x8086 │ Intel Corporation │ Intel Corporation │

│ 0x088000 │ 0x09a3 │ 0000:16:00.2 │ 32 │ 0x8086 │ Ice Lake RAS │ │ 0x0000 │ │ 0x8086 │ Intel Corporation │ Intel Corporation │Logs:

Bash:

root@vhost1:~# dmesg -l err

[ 0.513860] x86/cpu: SGX disabled by BIOS.

[ 1.853217] dmar5 SVM disabled, incompatible paging mode

[ 6.664031] ch 14:0:0:1: [ch0] ID/LUN unknownAs soon as I start any VM with this PCIe device attached, the system halts and reboots, no clear messages in the console are shown, ie kernel panic etc..

Appreciate any help or pointers on this!

Cheers

P

Last edited: