PBS compression backup job

- Thread starter Evgenii36ru

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

yes, the backups on pbs are working differently and the normal compression methods do not really apply (pbs saves chunks which are always zstd compressed)If I choose a section mounted by cifs (choose type - cifs) compression, you can choose. Is this how it should work?

ок. Comparing the volume of backups of virtual machines, I see that the copies on PBS are larger than those made with the ZSTD (fast and good) compression. Perhaps I will be mistaken, I will observe further ...yes, the backups on pbs are working differently and the normal compression methods do not really apply (pbs saves chunks which are always zstd compressed)

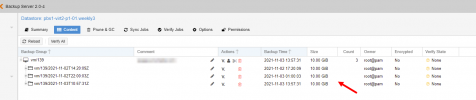

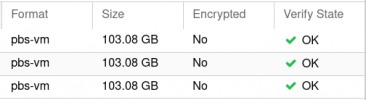

How did you compare? The size that is displayed on PBS is not the 'real' size, but the uncompressed undeduplicated size of the images /filesОк. Comparing the volume of backups of virtual machines, I see that the copies on PBS are larger than those made with the ZSTD (fast and good) compression. Perhaps I will be mistaken, I will observe further ...

You can't really compare PBS and vzdump backups. PBS backups will be deduplicated saving alot of storage. Lets say you got a 10GB VM that can be compressed down to 5-10 GB depending on the algorithm. If you now do 100 backups of that VM your PBS datastore will only need like 7GB to store that because nothing needs to be stored more than once. If you use vzdump you might get the compression down to 5GB, but because you need to store that 5GB 100 times it will use 500GB and not just the 7GB that PBS would need. So the compression doesn't really matter if you keep more than one backup of every VM.

So PBS is preferable in nearly all cases.

So PBS is preferable in nearly all cases.

Last edited:

You can't see that for individual VMs. You can only look how fast your PBS datastore is growing (and PBS will also predict in how many days it will be full).

thanks

How did you compare? The size that is displayed on PBS is not the 'real' size, but the uncompressed undeduplicated size of the images /files

is this also true for backing up files and directories?

You can't really compare PBS and vzdump backups. PBS backups will be deduplicated saving alot of storage. Lets say you got a 10GB VM that can be compressed down to 5-10 GB depending on the algorithm. If you now do 100 backups of that VM your PBS datastore will only need like 7GB to store that because nothing needs to be stored more than once. If you use vzdump you might get the compression down to 5GB, but because you need to store that 5GB 100 times it will use 500GB and not just the 7GB that PBS would need. So the compression doesn't really matter if you keep more than one backup of every VM.

So PBS is preferable in nearly all cases.

is this also true for backing up files and directories?

what do you mean exactly ?is this also true for backing up files and directories?

the sizes shown in the content tab in pbs are undeduplicated, uncompressed and not sparse.

Let's say I have a 10 GB file folder.what do you mean exactly ?

the sizes shown in the content tab in pbs are undeduplicated, uncompressed and not sparse.

Using proxmox-backup-client I set up a backup, will proxmox-backup-client copy the entire folder every time, or will it copy only new and changed files (analogy with rsync)?

Everything that you backup, no matter if it are files, folders or block devices. will get chopped into compressed and deduplicated chunks of data. And this is done on the client side, so it should only send chunks that aren'T already stored in the PBS datastore.

thanksEverything that you backup, no matter if it are files, folders or block devices. will get chopped into compressed and deduplicated chunks of data. And this is done on the client side, so it should only send chunks that aren'T already stored in the PBS datastore.

Hello, I did little test today, made one VM and this is its "df -h":

[root@s01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 12M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/mapper/VolGroup-lv_root 29G 7.7G 22G 27% /

/dev/vda1 1014M 263M 752M 26% /boot

/dev/vdb1 63G 37G 23G 62% /mnt/ncdata

tmpfs 783M 0 783M 0% /run/user/48

tmpfs 783M 0 783M 0% /run/user/0

So we have approx 37G + 7.7G of data which makes cca 45G of data ...

Then I backed this VM up to the PBS .

The Chunks dir was like 65G full ... Is it maybe "too early" to mention compression ? Maybe we need much more VMs, backups / snapshots ....

Thanks

BR

Tonci

[root@s01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 12M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/mapper/VolGroup-lv_root 29G 7.7G 22G 27% /

/dev/vda1 1014M 263M 752M 26% /boot

/dev/vdb1 63G 37G 23G 62% /mnt/ncdata

tmpfs 783M 0 783M 0% /run/user/48

tmpfs 783M 0 783M 0% /run/user/0

So we have approx 37G + 7.7G of data which makes cca 45G of data ...

Then I backed this VM up to the PBS .

The Chunks dir was like 65G full ... Is it maybe "too early" to mention compression ? Maybe we need much more VMs, backups / snapshots ....

Thanks

BR

Tonci

PVE is compressing every chunk-file using ZSTD before sending it to the PBS.Is it maybe "too early" to mention compression ?

if you are doing a VM backup, you have 103G of data that is compressed and deduplicated and only takes up 65G on disk when backed up. VM backups don't know/care about the file system, it works on the block level.. file system usage is just a rough approximation of actual usage on disk (PBS uses 4MB chunks!).