Hello!

I realized that even excluding files, before the backup is performed, these files are counted and backed up.

This is not good, especially when working with large files.

The problem can be easily isolated and reproduced

For example:

1. Create a new virtual disk on a virtual machine

2. Check the backup option, only for this disk

3. On the virtual machine, prepare the file system (I tested it with ext4 and xfs on a Debian 10 VM)

4. Copy a file to the new disk (a + - 200mb iso for example)

5. Make a backup (the backup will be initial)

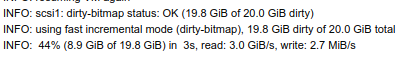

6. Make a new backup (very small incremental backup because there is no new data)

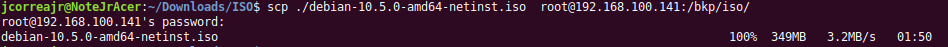

7. Copy a new file to the disk (an iso of + - 350mb for example)

8. Delete the new copied 350mb file (rm -rf file)

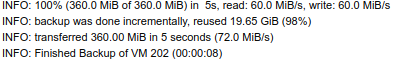

9. Make a new incremental backup

At this point you notice that the amount of data copied, corresponds to the size of the deleted file.

As I said, I tested with ext4 and xfs. I also tested some cache options on the virtual disk, but the problem remained.

I am using the most current version of PBS and PVE.

My background storage is type LVM-Thin

Is there any way to solve this problem?

PS: When I restore the backup, the deleted file does not appear.

I realized that even excluding files, before the backup is performed, these files are counted and backed up.

This is not good, especially when working with large files.

The problem can be easily isolated and reproduced

For example:

1. Create a new virtual disk on a virtual machine

2. Check the backup option, only for this disk

3. On the virtual machine, prepare the file system (I tested it with ext4 and xfs on a Debian 10 VM)

4. Copy a file to the new disk (a + - 200mb iso for example)

5. Make a backup (the backup will be initial)

6. Make a new backup (very small incremental backup because there is no new data)

7. Copy a new file to the disk (an iso of + - 350mb for example)

8. Delete the new copied 350mb file (rm -rf file)

9. Make a new incremental backup

At this point you notice that the amount of data copied, corresponds to the size of the deleted file.

As I said, I tested with ext4 and xfs. I also tested some cache options on the virtual disk, but the problem remained.

I am using the most current version of PBS and PVE.

Code:

proxmox-ve: 6.3-1 (running kernel: 5.4.78-2-pve)

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.55-1-pve: 5.4.55-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-4.10.17-2-pve: 4.10.17-20

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.7

libproxmox-backup-qemu0: 1.0.2-1

libpve-access-control: 6.1-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.3-2

libpve-guest-common-perl: 3.1-4

libpve-http-server-perl: 3.1-1

libpve-storage-perl: 6.3-4

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-cluster: 6.2-1

pve-container: 3.3-2

pve-docs: 6.3-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-2

pve-qemu-kvm: 5.1.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.3-3

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.5-pve1

Code:

proxmox-backup: 1.0-4 (running kernel: 5.4.78-2-pve)

proxmox-backup-server: 1.0.6-1 (running version: 1.0.6)

pve-kernel-5.4: 6.3-3

pve-kernel-helper: 6.3-3

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.73-1-pve: 5.4.73-1

pve-kernel-5.4.65-1-pve: 5.4.65-1

pve-kernel-5.4.60-1-pve: 5.4.60-2

pve-kernel-5.4.44-2-pve: 5.4.44-2

ifupdown2: 3.0.0-1+pve3

libjs-extjs: 6.0.1-10

proxmox-backup-docs: 1.0.6-1

proxmox-backup-client: 1.0.6-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.4-3

pve-xtermjs: 4.7.0-3

smartmontools: 7.1-pve2

zfsutils-linux: 0.8.5-pve1My background storage is type LVM-Thin

Is there any way to solve this problem?

PS: When I restore the backup, the deleted file does not appear.

Last edited: