Hello everyone,

I am currently facing several issues with my local Proxmox Backup Server which most likely are related, and I am hoping for some support in identifying the root cause.

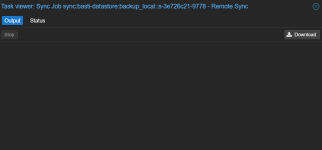

When I start a Push Sync Job from my local PBS, it fails, especially when syncing larger VMs. If I then try to view the failed task in the Task Viewer, no output is shown anymore. As a result, it is not possible for me to understand why the task failed. This behavior does not only occur with Sync Jobs, but also with Verify Jobs, where no output is displayed in the Task Viewer either. Prune Jobs, however, continue to show log output as expected.

In addition, I receive a Connection Error as soon as I open the Status tab of a specific task. At the same time, the log of the local PBS is constantly being filled with very similar messages, for example:

These log entries repeat continuously, even when no job is actively running.

Regarding my setup: locally, I am running a Proxmox Backup Server version 4.1.0. The system uses a 12 TB Seagate IronWolf, a 4 TB WD Red, and an older 1 TB WD Blue. Each disk is configured as its own datastore, and all datastores are using ZFS.

The offsite PBS is also running version 4.1.0, uses two 2 TB WD disks with a separate datastore on each disk using ext4, and is connected via an IPSec site-to-site tunnel. This system does not show any issues.

As part of my troubleshooting, I recreated the datastores on the local PBS, which were originally using ext4, as new ZFS datastores. I also completely reinstalled the local PBS. In addition, I restarted both the PBS itself and the proxmox-backup-proxy.service multiple times. I also checked disk utilization; none of the HDDs are more than 50% utilized. Unfortunately, the very first sync job after the reinstallation failed again, and the described behavior reappeared.

Thank you very much in advance for your support.

I am currently facing several issues with my local Proxmox Backup Server which most likely are related, and I am hoping for some support in identifying the root cause.

When I start a Push Sync Job from my local PBS, it fails, especially when syncing larger VMs. If I then try to view the failed task in the Task Viewer, no output is shown anymore. As a result, it is not possible for me to understand why the task failed. This behavior does not only occur with Sync Jobs, but also with Verify Jobs, where no output is displayed in the Task Viewer either. Prune Jobs, however, continue to show log output as expected.

In addition, I receive a Connection Error as soon as I open the Status tab of a specific task. At the same time, the log of the local PBS is constantly being filled with very similar messages, for example:

Code:

Dec 23 05:05:54 pbs proxmox-backup-proxy[788]: processed 10.867 GiB in 3d 15h 11m 8s, uploaded 7.289 GiB

Dec 23 05:05:59 pbs proxmox-backup-proxy[788]: processed 8.492 GiB in 3d 20m 5s, uploaded 6.59 GiB

Dec 23 05:06:01 pbs proxmox-backup-proxy[788]: processed 19 GiB in 1h 6m 0s, uploaded 16.379 GiB

Dec 23 05:06:04 pbs proxmox-backup-proxy[788]: processed 3.586 GiB in 2d 1h 6m 4s, uploaded 1.91 GiB

Dec 23 05:06:05 pbs proxmox-backup-proxy[788]: processed 1.089 TiB in 1d 1h 6m 2s, uploaded 127.473 GiB

Dec 23 05:06:06 pbs proxmox-backup-proxy[788]: processed 15.52 GiB in 3d 1h 6m 5s, uploaded 13.223 GiB

Dec 23 05:06:07 pbs proxmox-backup-proxy[788]: processed 1.419 GiB in 4d 1h 6m 7s, uploaded 1.101 GiB

Dec 23 05:06:07 pbs proxmox-backup-proxy[788]: processed 7.793 GiB in 3d 18h 30m 6s, uploaded 3.395 GiB

Dec 23 05:06:13 pbs proxmox-backup-proxy[788]: processed 4.813 GiB in 3d 18h 6m 9s, uploaded 2.711 GiB

Dec 23 05:06:27 pbs proxmox-backup-proxy[788]: processed 2.641 GiB in 3d 20h 45m 6s, uploaded 1.844 GiBThese log entries repeat continuously, even when no job is actively running.

Regarding my setup: locally, I am running a Proxmox Backup Server version 4.1.0. The system uses a 12 TB Seagate IronWolf, a 4 TB WD Red, and an older 1 TB WD Blue. Each disk is configured as its own datastore, and all datastores are using ZFS.

The offsite PBS is also running version 4.1.0, uses two 2 TB WD disks with a separate datastore on each disk using ext4, and is connected via an IPSec site-to-site tunnel. This system does not show any issues.

As part of my troubleshooting, I recreated the datastores on the local PBS, which were originally using ext4, as new ZFS datastores. I also completely reinstalled the local PBS. In addition, I restarted both the PBS itself and the proxmox-backup-proxy.service multiple times. I also checked disk utilization; none of the HDDs are more than 50% utilized. Unfortunately, the very first sync job after the reinstallation failed again, and the described behavior reappeared.

Thank you very much in advance for your support.