I have 3 nodes in my cluster, and am using OVSBridge setup.

This is a variation of :

https://pve.proxmox.com/wiki/Open_v...RSTP.29_-_1Gbps_uplink.2C_10Gbps_interconnect

I was able to get all nodes to sync and work, they can ping and I get very good numbers when I check all directions with iPerf3.

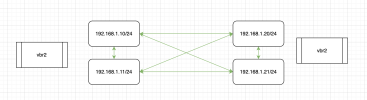

My problem is I have a VM on node01 and one on node02. Both using the OVSBridge setup with static IPs (vbr2). They both can ping the nodes (192.168.1.10 and 192.168.1.20) but they cannot ping eachother (192.168.1.11 and 192.168.1.21).

Any ideas, suggestions?

P.S. The comments I have added in the UI seem be at the bottom of the section in the interfaces file. So make it a little tricky to understand the sections when I post here.

pveversion -v:

pvenode01 /etc/networking/interfaces:

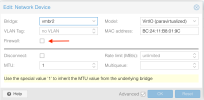

truenas-01 VM hosted on pvenode01 using vmbr2 w/ static IP 192.168.1.11/24

pvenode02 /etc/networking/interfaces:

truenas-02 VM hosted on pvenode01 using vmbr2 w/ static IP 192.168.1.21/24

pvenode03 /etc/networking/interfaces:

This is a variation of :

https://pve.proxmox.com/wiki/Open_v...RSTP.29_-_1Gbps_uplink.2C_10Gbps_interconnect

I was able to get all nodes to sync and work, they can ping and I get very good numbers when I check all directions with iPerf3.

My problem is I have a VM on node01 and one on node02. Both using the OVSBridge setup with static IPs (vbr2). They both can ping the nodes (192.168.1.10 and 192.168.1.20) but they cannot ping eachother (192.168.1.11 and 192.168.1.21).

Any ideas, suggestions?

P.S. The comments I have added in the UI seem be at the bottom of the section in the interfaces file. So make it a little tricky to understand the sections when I post here.

pveversion -v:

Code:

root@pvenode01:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.11-7-pve)

pve-manager: 8.1.4 (running version: 8.1.4/ec5affc9e41f1d79)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5: 6.5.11-7

proxmox-kernel-6.5.11-7-pve-signed: 6.5.11-7

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

ceph: 18.2.1-pve2

ceph-fuse: 18.2.1-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.0.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

openvswitch-switch: 3.1.0-2

proxmox-backup-client: 3.1.2-1

proxmox-backup-file-restore: 3.1.2-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.4

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-3

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.2.0

pve-qemu-kvm: 8.1.2-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve1pvenode01 /etc/networking/interfaces:

Code:

auto lo

iface lo inet loopback

auto enp94s0f0

iface enp94s0f0 inet manual

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options vlan_mode=native-untagged other_config:rstp-path-cost=150 other_config:rstp-port-admin-edge=false other_config:rstp-port-mcheck=true other_config:rstp-port-auto-edge=false other_c>

#node3 (.30)

auto enp94s0f1

iface enp94s0f1 inet manual

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options other_config:rstp-enable=true other_config:rstp-port-mcheck=true other_config:rstp-port-admin-edge=false vlan_mode=native-untagged other_config:rstp-path-cost=150 other_config:rst>

#node2 (.20)

auto eno1

iface eno1 inet manual

auto eno2

iface eno2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

auto vmbr2

iface vmbr2 inet static

address 192.168.1.10/24

ovs_type OVSBridge

ovs_ports enp94s0f0 enp94s0f1

ovs_mtu 9000

up ovs-vsctl set Bridge ${IFACE} rstp_enable=true other_config:rstp-priority=32768 other_config:rstp-forward-delay=4 other_config:rstp-max-age=6

post-up sleep 10

#10G pvecluster01

auto vmbr0

iface vmbr0 inet static

address 10.0.4.10/24

gateway 10.0.4.1

bridge-ports bond0

bridge-stp off

bridge-fd 0

#VLAN40

auto vmbr1

iface vmbr1 inet manual

bridge-ports none

bridge-stp off

bridge-fd 0

#LAN

source /etc/network/interfaces.d/*truenas-01 VM hosted on pvenode01 using vmbr2 w/ static IP 192.168.1.11/24

pvenode02 /etc/networking/interfaces:

Code:

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

#VLAN40

auto eno2

iface eno2 inet manual

#VLAN40

auto enp94s0f0

iface enp94s0f0 inet manual

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options other_config:rstp-port-auto-edge=false other_config:rstp-port-admin-edge=false vlan_mode=native-untagged other_config:rstp-enable=true other_config:rstp-path-cost=150 other_config:rstp-port-mcheck=true

#node03 (.30)

auto enp94s0f1

iface enp94s0f1 inet manual

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options other_config:rstp-port-mcheck=true other_config:rstp-path-cost=150 other_config:rstp-enable=true vlan_mode=native-untagged other_config:rstp-port-admin-edge=false other_config:rstp-port-auto-edge=false

#node01 (.10)

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

auto vmbr2

iface vmbr2 inet static

address 192.168.1.20/24

ovs_type OVSBridge

ovs_ports enp94s0f0 enp94s0f1

ovs_mtu 9000

up ovs-vsctl set Bridge ${IFACE} rstp_enable=true other_config:rstp-priority=32768 other_config:rstp-forward-delay=4 other_config:rstp-max-age=6

post-up sleep 10

#10G pvecluster01

auto vmbr0

iface vmbr0 inet static

address 10.0.4.20/24

gateway 10.0.4.1

bridge-ports bond0

bridge-stp off

bridge-fd 0

#VLAN40

auto vmbr1

iface vmbr1 inet manual

bridge-ports none

bridge-stp off

bridge-fd 0

#LAN

source /etc/network/interfaces.d/*truenas-02 VM hosted on pvenode01 using vmbr2 w/ static IP 192.168.1.21/24

pvenode03 /etc/networking/interfaces:

Code:

auto lo

iface lo inet loopback

auto enp1s0f0

iface enp1s0f0 inet manual

#VLAN40

auto enp1s0f1

iface enp1s0f1 inet manual

#VLAN40

auto enp2s0f0

iface enp2s0f0 inet static

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options vlan_mode=native-untagged other_config:rstp-path-cost=150 other_config:rstp-port-auto-edge=false other_config:rstp-enable=true other_config:rstp-port-admin-edge=false other_config:rstp-port-mcheck=true

#node01 (.10)

auto enp2s0f1

iface enp2s0f1 inet manual

ovs_type OVSPort

ovs_bridge vmbr2

ovs_mtu 9000

ovs_options other_config:rstp-port-admin-edge=false other_config:rstp-enable=true other_config:rstp-path-cost=150 other_config:rstp-port-auto-edge=false vlan_mode=native-untagged other_config:rstp-port-mcheck=true

#node02 (.20)

auto vmbr2

iface vmbr2 inet static

address 192.168.1.30/24

ovs_type OVSBridge

ovs_ports enp2s0f0 enp2s0f1

ovs_mtu 9000

up ovs-vsctl set Bridge ${IFACE} rstp_enable=true other_config:rstp-priority=32768 other_config:rstp-forward-delay=4 other_config:rstp-max-age=6

post-up sleep 10

#10G pvecluster01

auto vmbr0

iface vmbr0 inet static

address 10.0.4.30/24

gateway 10.0.4.1

bridge-ports enp1s0f0

bridge-stp off

bridge-fd 0

source /etc/network/interfaces.d/*