Hi,

We have several Proxmox hosts that have a Ceph storage and are connected to each other.

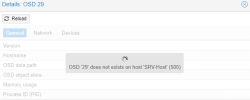

Technically Ceph works, but for one specific host there is always this error message on all of it OSDs (No matter on which host we use the Web UI):

OSD '29' does not exist on host 'SRV-Host' (500)

The configuration differs in the structure of the hostname and I suspect that this is causing a problem:

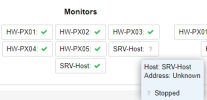

The Host that doesn't display OSDs correctly:

The Host that does display OSDs correctly:

Here I saw that the message comes from matching the hostname:

+ die "OSD '${osdid}' does not exists on host '${nodename}'\n"

+ if $nodename ne $metadata->{hostname};

https://lists.proxmox.com/pipermail/pve-devel/2022-December/055118.html

Can I somehow fix this problem without reinstalling or is there no way around it?

Versions: Ceph 17.2.6 (Currently Updating to 17.2.7), Proxmox PVE 5.15.131-3 / pve-manager 7.4-17 (Currently Updating to PVE 5.15.143-1)

Best Regards Yannik

We have several Proxmox hosts that have a Ceph storage and are connected to each other.

Technically Ceph works, but for one specific host there is always this error message on all of it OSDs (No matter on which host we use the Web UI):

OSD '29' does not exist on host 'SRV-Host' (500)

The configuration differs in the structure of the hostname and I suspect that this is causing a problem:

The Host that doesn't display OSDs correctly:

Code:

ceph osd metadata 29

{

"id": 29,

...

"hostname": "SRV-Host.DOMAIN.com",

...

}

root@SRV-Host:~# hostname

SRV-Host.DOMAIN.comThe Host that does display OSDs correctly:

Code:

ceph osd metadata 1

{

"id": 1,

...

"hostname": "HW-PX03",

...

}

root@HW-PX02:~# hostname

HW-PX02Here I saw that the message comes from matching the hostname:

+ die "OSD '${osdid}' does not exists on host '${nodename}'\n"

+ if $nodename ne $metadata->{hostname};

https://lists.proxmox.com/pipermail/pve-devel/2022-December/055118.html

Can I somehow fix this problem without reinstalling or is there no way around it?

Versions: Ceph 17.2.6 (Currently Updating to 17.2.7), Proxmox PVE 5.15.131-3 / pve-manager 7.4-17 (Currently Updating to PVE 5.15.143-1)

Best Regards Yannik

Last edited: