Not sure to use that , both servers are installed from the Proxmox.iso

Opt-in Linux 6.8 Kernel for Proxmox VE 8 available on test & no-subscription

- Thread starter t.lamprecht

- Start date

-

- Tags

- kernel 6.8

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Better still the widely used Linux Mint 21.3 (current) uses the 5.15 LTS kernelSo how is that a problem? Ubuntu is on 6.8 - so is Proxmox. Debian is on 6.1 LTS

Better still the widely used Linux Mint 21.3 (current) uses the 5.15 LTS kernel

I think a lot of routers with OpenWRT are also on a 5.x

Are there known issues with Mellanox "mlx4_core" driver and kernel 6.8?

I am using 6.8 on other systems without issues so far.

However, on recently put-together system with a Mellanox MCX353A, which is handled by the "mlx4" driver, I am seeing intermittened issues.

I don't have the details right now, as I had no time to really investigate.

Just wanted to ask, if something is already known in general.

System is a Broadwell Xeon E5 v4, with SR-IOV enabled.

I am using 6.8 on other systems without issues so far.

However, on recently put-together system with a Mellanox MCX353A, which is handled by the "mlx4" driver, I am seeing intermittened issues.

I don't have the details right now, as I had no time to really investigate.

Just wanted to ask, if something is already known in general.

System is a Broadwell Xeon E5 v4, with SR-IOV enabled.

Kernel 6.8.8-2 breaks interface stop viewing LXC containers status.

On a cluster using ceph the error in the interface is "can't open '/sys/fs/cgroup/blkio//lxc/..../blkio.throttle.io_service_bytes_recursive'" where ... is the lxc container id.

The old kernel 6.8.4.3 works flawlessy.

CEPH is made on NVME drives and server are interconnected on a 10Gb/s copper lan.

In the same cluster I have also some node that uses CEPH but not provide OSD to the cluster: in those nodes kernel 6.8.8-2 works without issues.

On a cluster using ceph the error in the interface is "can't open '/sys/fs/cgroup/blkio//lxc/..../blkio.throttle.io_service_bytes_recursive'" where ... is the lxc container id.

The old kernel 6.8.4.3 works flawlessy.

CEPH is made on NVME drives and server are interconnected on a 10Gb/s copper lan.

In the same cluster I have also some node that uses CEPH but not provide OSD to the cluster: in those nodes kernel 6.8.8-2 works without issues.

Hi,

please share your container configurationKernel 6.8.8-2 breaks interface stop viewing LXC containers status.

On a cluster using ceph the error in the interface is "can't open '/sys/fs/cgroup/blkio//lxc/..../blkio.throttle.io_service_bytes_recursive'" where ... is the lxc container id.

The old kernel 6.8.4.3 works flawlessy.

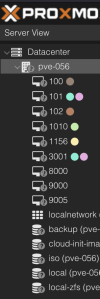

pct config <ID> and kernel commandline cat /proc/cmdline. What exactly do you mean by "interface stop viewing LXC containers status"? Please describe the exact steps leading to the error.That sounds like an issue with the status daemon. Please check the logs of theView attachment 70674

This is something similar to what I see in my cluster, no LXC container names or status, nevertheless the container are running....

pvestatd.service systemd service.Just an update: thanks to your advice I have noticed that in the command line of the not working nodes i still had appended "systemd.unified_cgroup_hierarchy=0" to the command line. While it was working in 6.8.4-3 doesn't work anymore in 6.8.8-2. I have removed the forcing to cgroup v1 and now everything works.

Upgrading to a newer kernel often brings performance improvements, especially for scheduling tasks. The EEVDF task scheduler sounds interesting for potentially improving VM scheduling efficiency across different time zones.

Is kernel 6.8 stable enough for production use now? Still seems to be a lot of posts here with issues related to it.

Is kernel 6.8 stable enough for production use now? Still seems to be a lot of posts here with issues related to it.

Yes, its the default kernel since Proxmox VE 8.2

Why not the latest (non subscription)I upgrade to kernel 6.8.8.2

6.8.8-3I saw some comment with 6.8.8.2 still have unstable issue after >14 days and i upgrade to 6.8.8.2 for testing in my UAT environment then i see this error in 6.8.8.2.Why not the latest (non subscription)6.8.8-3

this looks ike a drive issue instead of kernel issueI upgrade to kernel 6.8.8.2 but found out that there are disk ofter reconnected. Tested at 6.5.13 is not have this error.

View attachment 71656

Any hints can solve this error? Thanks.

Using 6.8.8-3-pve, the system shows some kind of kexec error after the start. It also seems to do nothing for 60 seconds after starting the NFS server:

This happens before lxc is initialized. However I'm not sure if those events are related.

The systems seems to work fine however.

EDIT: Seems to be related to kdump:

Code:

[ 21.998644] Loading iSCSI transport class v2.0-870.

[ 22.082475] NFSD: Using nfsdcld client tracking operations.

[ 22.082479] NFSD: no clients to reclaim, skipping NFSv4 grace period (net f0000000)

[ 88.727321] kernel: 000000000642de24 kernel_size: 0xd7dc68

[ 88.737335] kexec measurement buffer for the loaded kernel at 0xb5ffb000.

[ 88.737357] Crash PT_LOAD ELF header. phdr=00000000cd34526f vaddr=0xffff984500100000, paddr=0x100000, sz=0x99ff000 e_phnum=35 p_offset=0x100000

[ 88.737360] Crash PT_LOAD ELF header. phdr=000000003a7b963c vaddr=0xffff98450a000000, paddr=0xa000000, sz=0x200000 e_phnum=36 p_offset=0xa000000

[ 88.737362] Crash PT_LOAD ELF header. phdr=00000000de9f92e9 vaddr=0xffff98450a20e000, paddr=0xa20e000, sz=0xa3df2000 e_phnum=37 p_offset=0xa20e000

[ 88.737364] Crash PT_LOAD ELF header. phdr=000000000b4869a2 vaddr=0xffff9845b6000000, paddr=0xb6000000, sz=0x706018 e_phnum=38 p_offset=0xb6000000

[ 88.737366] Crash PT_LOAD ELF header. phdr=00000000861d1b6a vaddr=0xffff9845b6706018, paddr=0xb6706018, sz=0x34c40 e_phnum=39 p_offset=0xb6706018

[ 88.737368] Crash PT_LOAD ELF header. phdr=000000006eb0af5f vaddr=0xffff9845b673ac58, paddr=0xb673ac58, sz=0x3c0 e_phnum=40 p_offset=0xb673ac58

[ 88.737369] Crash PT_LOAD ELF header. phdr=000000009b7e924f vaddr=0xffff9845b673b018, paddr=0xb673b018, sz=0x34c40 e_phnum=41 p_offset=0xb673b018

[ 88.737371] Crash PT_LOAD ELF header. phdr=0000000095c754d9 vaddr=0xffff9845b676fc58, paddr=0xb676fc58, sz=0x13763a8 e_phnum=42 p_offset=0xb676fc58

[ 88.737373] Crash PT_LOAD ELF header. phdr=00000000cdf1a6f3 vaddr=0xffff9845b7ae7000, paddr=0xb7ae7000, sz=0x3e5e000 e_phnum=43 p_offset=0xb7ae7000

[ 88.737374] Crash PT_LOAD ELF header. phdr=000000001c941888 vaddr=0xffff9845bdfff000, paddr=0xbdfff000, sz=0x1001000 e_phnum=44 p_offset=0xbdfff000

[ 88.737376] Crash PT_LOAD ELF header. phdr=00000000560cc64d vaddr=0xffff984600000000, paddr=0x100000000, sz=0xf3f380000 e_phnum=45 p_offset=0x100000000

[ 88.737381] Loaded ELF headers at 0xae000000 bufsz=0x1000 memsz=0xe1000

[ 88.737389] Loaded purgatory at 0xb5ff6000

[ 88.737394] Loaded boot_param, command line and misc at 0xb5ff4000 bufsz=0x1540 memsz=0x2000

[ 88.737395] Loaded 64bit kernel at 0xb2200000 bufsz=0xd78c68 memsz=0x3c3d000

[ 88.737518] Loaded initrd at 0xb0b41000 bufsz=0x16bed70 memsz=0x16bed70

[ 88.737520] Final command line is: elfcorehdr=0xae000000 BOOT_IMAGE=/vmlinuz-6.8.8-3-pve root=UUID=c461dc8b-3870-434f-acf7-4163316a394d ro root=UUID=c461dc8b-3870-434f-acf7-4163316a394d rootfstype=btrfs rootflags=degraded ro quiet console=ttyS0,115200 console=tty0 iommu=pt reset_devices systemd.unit=kdump-tools-dump.service nr_cpus=1 irqpoll usbcore.nousb

[ 88.737529] E820 memmap:

[ 88.737530] 0000000000001000-000000000009ffff (1)

[ 88.737532] 00000000bd088000-00000000bd0bffff (3)

[ 88.737533] 000000000a200000-000000000a20dfff (4)

[ 88.737534] 00000000bd0c0000-00000000bd3dcfff (4)

[ 88.737536] 0000000000000000-0000000000000fff (2)

[ 88.737537] 00000000000a0000-00000000000fffff (2)

[ 88.737538] 0000000009aff000-0000000009ffffff (2)

[ 88.737539] 00000000b7ae6000-00000000b7ae6fff (2)

[ 88.737541] 00000000bb945000-00000000bd087fff (2)

[ 88.737542] 00000000bd3dd000-00000000bdffefff (2)

[ 88.737543] 00000000bf000000-00000000bfffffff (2)

[ 88.737544] 00000000fea00000-00000000fea0ffff (2)

[ 88.737545] 00000000fec10000-00000000fec10fff (2)

[ 88.737547] 00000000fed00000-00000000fed00fff (2)

[ 88.737548] 00000000fed40000-00000000fed44fff (2)

[ 88.737549] 00000000fed80000-00000000fed8ffff (2)

[ 88.737550] 00000000fedc2000-00000000fedcffff (2)

[ 88.737551] 00000000fedd4000-00000000fedd5fff (2)

[ 88.737553] 000000103f380000-000000103fffffff (2)

[ 88.737554] 00000000ae0e00b0-00000000b5ffffff (1)

[ 88.737583] nr_segments = 6

[ 88.737585] segment[0]: buf=0x00000000495537f2 bufsz=0x3c19 mem=0xb5ffb000 memsz=0x5000

[ 88.737588] segment[1]: buf=0x0000000027262b5e bufsz=0x1000 mem=0xae000000 memsz=0xe1000

[ 88.737630] segment[2]: buf=0x00000000486ca7ed bufsz=0x4000 mem=0xb5ff6000 memsz=0x5000

[ 88.737634] segment[3]: buf=0x000000001e521622 bufsz=0x1540 mem=0xb5ff4000 memsz=0x2000

[ 88.737636] segment[4]: buf=0x0000000013206fcb bufsz=0xd78c68 mem=0xb2200000 memsz=0x3c3d000

[ 88.742106] segment[5]: buf=0x00000000f9a801d4 bufsz=0x16bed70 mem=0xb0b41000 memsz=0x16bf000

[ 88.743790] kexec_file_load: type:1, start:0xb5ff61a0 head:0x4 flags:0x2

[ 88.918487] softdog: initialized. soft_noboot=0 soft_margin=60 sec soft_panic=0 (nowayout=0)

[ 88.918493] softdog: soft_reboot_cmd=<not set> soft_active_on_boot=0

[ 89.017765] Process accounting resumed

[ 89.272750] kauditd_printk_skb: 11 callbacks suppressedThis happens before lxc is initialized. However I'm not sure if those events are related.

The systems seems to work fine however.

EDIT: Seems to be related to kdump:

Code:

kdump-tools[2121]: Creating symlink /var/lib/kdump/initrd.img.

...Crash happens

kdump-tools[5785]: loaded kdump kernel

kdump-tools[5784]: /sbin/kexec -p -s --command-line="BOOT_IMAGE=/vmlinuz-6.8.8-3-pve...

kdump-tools[2121]: loaded kdump kernel.

Last edited:

Hi,

so it's rather unlikely that your issue is because of the new kernel version here. You do want that fix

the only difference betweenThe kernel that was release this week, 6.8.8.4, seems to be unstable again for one my VM's, (TrueNAS SCALE). I've pinned 6.8.8.3, rebooted, and all is stable again. Seems to be that every other version or something there is now a regression issue.

6.8.8-3 and 6.8.8-4 is adding a missing check in the tap/tun network devices, from the changelog

Code:

proxmox-kernel-6.8 (6.8.8-4) bookworm; urgency=medium

* fix CVE-2024-41090/41091: DoS via short ethernet frames over tun/tap

-- Proxmox Support Team <support@proxmox.com> Fri, 26 Jul 2024 13:15:13 +0200Got CVE-2024-38540 at my pve8 new installation (btw its about #post-651061 ).

When do you plan to upgrade kernel to >=6.8.12?

Issue introduced in 5.7 with commit 0c4dcd602817 and fixed in 6.8.12 with commit 627493443f3a

When do you plan to upgrade kernel to >=6.8.12?

Last edited: