Hello,

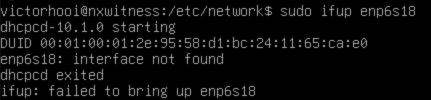

I tried this 6.11 kernel on my HW but it does not activate the network card (which works perfectly fine on a different OS/kernel)

Odd thing is that none of the 6.x PVE kernels is able to activate my NIC but the same NIC worked fine with PVE 7.x using kernels of the 5.x family.

Even oddest is that ArcLinux, latest available ISO using kernel 6.8.2, works perfectly fine on the same HW (made a test boot using the installation ISO without actually installing it), I could activate the NIC and perform some network tests without noticing anything odd.

The HW is

- Dell Wyse 5070 ThinClient (on latest Dell BIOS)

- NIC is Intel X520-2 (but a I350 behave the same)

Attached you will find

- dmesg output for PVE 6.11 kernel and for ArcLinux 6.8 kernel

- lspci of the PVE 6.11kernel

- a screenshot showing the NIC working on ArcLinux (enp1s0f0/enp1s0f1 are the 2 ports of X520, enp2s0 is the builtin Realtek)

While researching the issue I have seen other posts around of people having activation issues of PCI boards using PVE 6.x kernels.

Possibly this is related to the way the kernel interact with the BIOS and the real "culprit" is my system, but as I shown other kernels are more tolerant of my HW combination.

I even tried disabling PCI advanced error reporting (see dmesg kernel bot params) without any result.

I was trying to think about checking the kernel compilation parameter, but things got even more complicated than the last time I compiled a kernel 25+years ago: the config file is almost 12000 lines.

A quick diff between ArcLinux and PVE config files revealed almost 1200 differences (comments excluded).

This is beyond my knowledge and resources.

I hope that some kernel/PCI guru direct his benign eye/knowledge to this issue and can enlighten me, and possibly many others in my situation!

For the time being I thank you all for your time and attention and wish a merry festive season.

Cheers,

Max

P.S. I know I am pushing this little poor thin client beyond Dell specification pretending to use 2x10Gb ports and 32GB of RAM (which Linux also seems not be able to use in full given the lack of sufficient MTRR) but I like to experiment without using too much electricity, making noise and with the space (and money, all is used stuff) I have available so that all is within my WAF, with is very low ;-)