The tmpfs mount workaround works, I added it to fstab to make it a permanent change. Combined with a swap disk I'm not worried about running out of memory.Until then we recommend to either avoid the initialpve-kernel-6.1.0-1-pvepackage if your having your root filesystem on ZFS, or move the/tmpdirectory away from ZFS. e.g., by making it a tmpfs mount.

Opt-in Linux 6.1 Kernel for Proxmox VE 7.x available

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

When checking this issue with PBS backup/restore and the opt-in 6.1 kernel we managed to reproduce it on some setups.

That would be those with/tmplocated on ZFS (normal if whole root file system is on ZFS).

There, the open call with the O_TMPFILE flag set, for downloading the previous backup index for incremental backup, fails withEOPNOTSUPP 95 Operation not supported.

It seems ZFS 2.1.7 received still misses some compat with the 6.1 kernel, which reworked parts of the VFS layer w.r.t. tempfile handling. We notified ZFS upstream with a minimal reproducer for now and will look into providing a stop-gap fix for this if upstream needs more time to handle it as they deem correctly.

Until then we recommend to either avoid the initialpve-kernel-6.1.0-1-pvepackage if your having your root filesystem on ZFS, or move the/tmpdirectory away from ZFS. e.g., by making it a tmpfs mount.

Thank you very much for your help with this. Setting /tmp as a tmpfs mount does, indeed, seem to have resolved this issue on my systems.

Could you please explain how to do this properly?The tmpfs mount workaround works, I added it to fstab to make it a permanent change. Combined with a swap disk I'm not worried about running out of memory.

Go to the shell in the Proxmox GUI and type 'vi /etc/fstab' and add the following line:Could you please explain how to do this properly?

Code:

tmpfs /tmp tmpfs defaults 0 0The size is dynamic and the kernel can swap it out if needed so it's not going to steal memory when it doesn't need to.

https://www.kernel.org/doc/html/latest/filesystems/tmpfs.html

There are ZFS updates for Proxmox no-subscription, including a new 5.15 kernel version with newer ZFS support, but no 6.1 kernel version updates. I wonder if dist-upgrading would break a Proxmox VE running with kernel 6.1.

EDIT: pve-kernel-5.15.83-1-pve explicitly mentions support for the ZFS 2.1.7-pve1 updates (from 2.1.6-pve1), but I can't see the kernel 6.1 change log as easily in the GUI.

EDIT: pve-kernel-5.15.83-1-pve explicitly mentions support for the ZFS 2.1.7-pve1 updates (from 2.1.6-pve1), but I can't see the kernel 6.1 change log as easily in the GUI.

Last edited:

* which zfsutils-linux version do you currently run, and which is about to get updated?There are ZFS updates for Proxmox no-subscription, including a new 5.15 kernel version with newer ZFS support, but no 6.1 kernel version updates. I wonder if dist-upgrading would break a Proxmox VE running with kernel 6.1.

* the 6.1 kernel already ships with ZFS 2.1.7 kernel part

in general AFAIR zfs userspace utils and kernel version mismatches (e.g. 2.1.6 userspace and 2.1.7 kernel or the other way around) are not a cause of problems (or have not been until now in my experience)

Yes, we'll update this thread then. But we'll wait until the ZFS tmpfile issue got resolved before moving it on.Will there be a posting on this thread when this kernel is available on the enterprise repository?

Please be aware of the latest kernel 5.15 serious security flaw: https://www.zdnet.com/article/patch-now-serious-linux-kernel-security-hole-uncovered/

I've been using the Edge kernels since months on several machines without issue - Thankful for the 6.1 release!!

Happy Holidays!

I've been using the Edge kernels since months on several machines without issue - Thankful for the 6.1 release!!

Happy Holidays!

this is a non-issue.Please be aware of the latest kernel 5.15 serious security flaw: https://www.zdnet.com/article/patch-now-serious-linux-kernel-security-hole-uncovered/

I've been using the Edge kernels since months on several machines without issue - Thankful for the 6.1 release!!

Happy Holidays!

ksmb is not active, and it was patched a long time ago in 5.15.61.

Proxmox is now at 5.15.83 (at least with non-subscription repo)

Please be aware of the latest kernel 5.15 serious security flaw: https://www.zdnet.com/article/patch-now-serious-linux-kernel-security-hole-uncovered/

I've been using the Edge kernels since months on several machines without issue - Thankful for the 6.1 release!!

Happy Holidays!

I wouldn't be too concerned about it if you are not using ksmbd (in-kernel smb server, userspace smb implementation - samba - is not affected). Also it is only of concern to those who do use ksmbd with many random users.

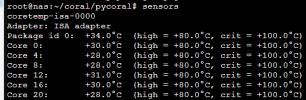

Kernel 6.2 has new hwmon sensor support for more Asus motherboards with nct6798d chips. Can we test 6.2 in proxmox?

6.2 is RC1. Wayyyyyy too early to be asking for it.

Yeah the price we pay for having bleeding edge tech. There's some big strides being made in the Asus sensors department and wanted to test against my z690 proart since it shares the same chip as boards on the latest supported list. Problem is we're about 8 weeks or more away from seeing this in mainline.6.2 is RC1. Wayyyyyy too early to be asking for it.

You need to useThe kernel headers are missing (linux-headers-6.1.0-1-pve) so I can't build dkms modules.

pve-headers-6.1.0-1-pve for Proxmox VE. It works fine with building the vendor-reset module. It comes automatically with pve-headers-6.1.Me too, already fixed in git so the next built should be fine again.Code:# uname -a Linux proxmox 6.1.0-1-pve #1 SMP PREEMPT_DYNAMIC PVE 6.1.0-1 (Tue, 13 Dec 2022 15:08:53 +0 x86_64 GNU/Linux

The missing ) is driving me crazy!

Following up on this, as I have not seen any replies: I did some testing and noticed that pve-kernel-6.1 does not expose an EUI identifier for one of my NVMe devices, and therefore its WWID changes too (which is supposed to be unique and stable?) compared to default pve-kernel-5.15 (which shows anSwitched to pve-kernel-6.1 yesterday, from 5.15. Apparently, something in 6.1 messes with the device IDs of one single storage device:

After restart, I noticed a delay in booting the system, so I looked at the console and noticed a"A start job is running for dev-disk-by-id /dev/disk/by-id/nvme-eui.6479a7311269019b-part4"notification from systemd, with a timeout of 1m30s. After this expired, boot continued fine - but the delay shows up on subsequent reboots with 6.1. Rebooting with the previous 5.15 kernel fixed this immediately.

I have a ZFS root poolrpoolconsisting of two mirrored NVMe devices, and another SATA SSD-based storage pool. Both pools were set up with disk IDs, i.e.rpoolhas been usingnvme-eui.0026b7683b8e8485-part4andnvme-eui.6479a7311269019b-part4so far. When looking up the mentioned disk IDs, I only see one of the two NVMe devices showing up with thenvme-eui.syntax and the other one appearing asnvme-nvme.<aveeeeerylongserial>all of a sudden:

Code:» ls -l /dev/disk/by-id/nvme* lrwxrwxrwx 1 root root 13 Dec 18 12:25 /dev/disk/by-id/nvme-KINGSTON_SA2000M8250G_50026B7683B8E848 -> ../../nvme0n1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-KINGSTON_SA2000M8250G_50026B7683B8E848-part1 -> ../../nvme0n1p1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-KINGSTON_SA2000M8250G_50026B7683B8E848-part2 -> ../../nvme0n1p2 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-KINGSTON_SA2000M8250G_50026B7683B8E848-part3 -> ../../nvme0n1p3 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-KINGSTON_SA2000M8250G_50026B7683B8E848-part4 -> ../../nvme0n1p4 lrwxrwxrwx 1 root root 13 Dec 18 12:25 /dev/disk/by-id/nvme-PNY_CS3030_250GB_SSD_PNY09200003790100411 -> ../../nvme1n1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-PNY_CS3030_250GB_SSD_PNY09200003790100411-part1 -> ../../nvme1n1p1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-PNY_CS3030_250GB_SSD_PNY09200003790100411-part2 -> ../../nvme1n1p2 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-PNY_CS3030_250GB_SSD_PNY09200003790100411-part3 -> ../../nvme1n1p3 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-PNY_CS3030_250GB_SSD_PNY09200003790100411-part4 -> ../../nvme1n1p4 lrwxrwxrwx 1 root root 13 Dec 18 12:25 /dev/disk/by-id/nvme-eui.0026b7683b8e8485 -> ../../nvme0n1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-eui.0026b7683b8e8485-part1 -> ../../nvme0n1p1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-eui.0026b7683b8e8485-part2 -> ../../nvme0n1p2 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-eui.0026b7683b8e8485-part3 -> ../../nvme0n1p3 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-eui.0026b7683b8e8485-part4 -> ../../nvme0n1p4 lrwxrwxrwx 1 root root 13 Dec 18 12:25 /dev/disk/by-id/nvme-nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001 -> ../../nvme1n1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001-part1 -> ../../nvme1n1p1 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001-part2 -> ../../nvme1n1p2 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001-part3 -> ../../nvme1n1p3 lrwxrwxrwx 1 root root 15 Dec 18 12:25 /dev/disk/by-id/nvme-nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001-part4 -> ../../nvme1n1p4

This leads to the situation that the rpool is no longer imported using device IDs for both vdevs (i.e. under 5.15 both imported with nvme-eui.xxx):

Code:» zpool status rpool pool: rpool state: ONLINE scan: scrub repaired 0B in 00:00:17 with 0 errors on Sun Dec 11 00:24:18 2022 config: NAME STATE READ WRITE CKSUM rpool ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 nvme-eui.0026b7683b8e8485-part3 ONLINE 0 0 0 nvme1n1p3 ONLINE 0 0 0 errors: No known data errors

The other storage pool is behaving just fine. Any ideas a) why this one device changes its naming convention unter 6.1, b1) how to potentially revert this, OR b2) how to fix the ongoing boot delay by switching to other device IDs (e.g. the ones withnvme-KINGSTON_SA20...andnvme-PNY_CS30, considering is the root pool which cannot be easily exported and re-imported?

Thanks and regards

eui for both of them!):

Code:

» ls -l /sys/block/nvme*/{wwid,eui}

-r--r--r-- 1 root root 4.0K Dec 28 18:02 /sys/block/nvme0n1/eui

-r--r--r-- 1 root root 4.0K Dec 28 18:00 /sys/block/nvme0n1/wwid

-r--r--r-- 1 root root 4.0K Dec 28 18:00 /sys/block/nvme1n1/wwid

» cat /sys/block/nvme0n1/wwid /sys/block/nvme1n1/wwid

eui.0026b7683b8e8485

nvme.1987-504e593039323030303033373930313030343131-504e592043533330333020323530474220535344-00000001For sake of testing, I also installed pve-kernel-5.19 and to my suprise the exact same issue already happens with 5.19!

Is someone else seeing this with either 5.19 or 6.1 (i.e. missing

/sys/block/nvme*/eui and/or inconsistent wwid)?Regards

Last edited: