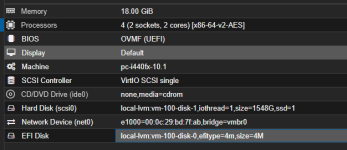

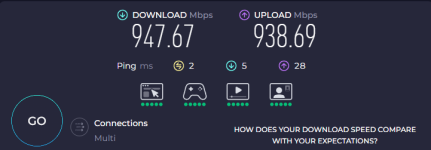

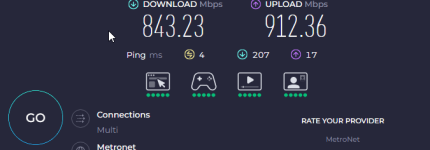

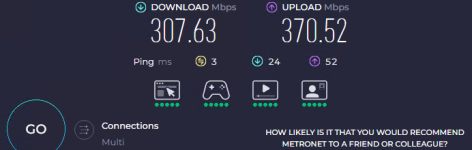

" you're using "old" hardware for a relative modern OS: i440fx" so, these machines all were migrated from ESXi so what platform would you recommend setting it to? I hear you on the true LACP and that under a multithreaded load it balances better. To put it out there. I am never expecting more than my current network capacity of 1 GB and my Internet speed of 1 GB BUT - When I use the same test method and server locations in that test, I would expect all VMs to perform the same. This last server I posted is getting 1/2 the throughput on the same host... That makes no sense to me. I am not new to IT I have 27 years of IT experience so I am not new necessarily, but I am brand new to ProxMox so looking for some guidance on how to configure each guest the proper way after a successful migration from ESXi is the real question here. I also have FreeBSD running here but that out of the box is working great with the virtio hardware.

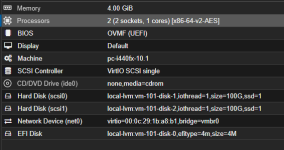

The issue you are seeing is not related to LACP and not caused by the physical network. What you are running into is a VM topology and platform problem that becomes visible after an ESXi to Proxmox migration.

The i440fx machine type is a legacy platform that mainly exists for compatibility. While it works, it is not a good fit for modern operating systems and high-performance virtio devices. It uses a legacy PCI layout instead of native PCIe, has less efficient interrupt routing and does not scale as well with modern kernels and drivers. For current Windows, Linux and BSD guests, q35 is the recommended machine type in Proxmox because it provides native PCIe, better MSI/MSI-X interrupt handling and generally better performance and stability for virtio-net and virtio-scsi. After migrations, switching from i440fx to q35 often fixes unexplained performance differences between otherwise identical VMs.

Another important factor is the vCPU topology and NUMA behavior. If a VM is configured with multiple sockets, Proxmox may schedule vCPUs across different NUMA nodes. If NUMA is not explicitly and correctly configured, this can lead to vCPUs running on one NUMA node while the virtio-net interrupts and memory allocations end up on another. That causes cross-NUMA memory access and increased latency, which can easily cut effective network throughput in half even though CPU usage looks normal. In many cases, this alone explains why one VM reaches close to 1 Gbps while another one on the same host tops out around 500 Mbps.

The fact that your FreeBSD VM performs well with virtio out of the box is an important hint. It shows that the host networking, bonding and switch configuration are fine. Virtio works best when combined with a modern machine type like q35, proper MSI-X interrupt support and a sane CPU layout. Using i440fx together with multi-socket VM configurations can result in virtio-net becoming interrupt-bound or NUMA-limited.

As a general baseline after an ESXi migration, VMs should be adjusted to use the q35 machine type, a single socket with multiple cores, NUMA disabled unless it is explicitly required and carefully configured, CPU type set to host and virtio for network devices. Once these changes are applied, retesting usually shows that the unexplained “half throughput” problem disappears.