Good evening,

today I've decided, after one year, to update/upgrade my Proxmox installation.

I used to run over the 8.0.x (x = like 3 or something), and because this is the only moment of the year I can touch the server, I've decided to perform the update.

But, suddenly, the LVM-thin pool with the VMs image is gone for good.

I've tried everything I've seen on the forum to fix that, but nothing worked.

But, luckily, if I boot using the old kernel, everything works just file:

For now, I've pinned the old kernel, so I can restore the VMs and everything is "good". But, honestly, I would like to know "why" this is happening...

The machine is a Lenovo ThinkSystem ST250 (7Y45) and the vmstorage pool is mapped on a RAID 1 volume.

The RAID controller is ThinkSystem RAID 530-8i PCIe 12Gb Adapter (but recognized as

).

Thanks a lot in advance.

Best regards,

Giacomo

today I've decided, after one year, to update/upgrade my Proxmox installation.

I used to run over the 8.0.x (x = like 3 or something), and because this is the only moment of the year I can touch the server, I've decided to perform the update.

But, suddenly, the LVM-thin pool with the VMs image is gone for good.

I've tried everything I've seen on the forum to fix that, but nothing worked.

Code:

Linux proxima 6.8.12-1-pve #1 SMP PREEMPT_DYNAMIC PMX 6.8.12-1 (2024-08-05T16:17Z) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Sat Aug 10 16:40:47 2024

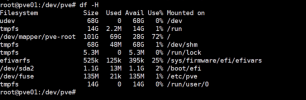

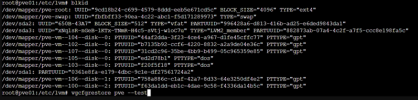

root@proxima:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 223.6G 0 disk

|-sda1 8:1 0 1007K 0 part

|-sda2 8:2 0 1G 0 part /boot/efi

`-sda3 8:3 0 222.6G 0 part

|-pve-swap 252:0 0 8G 0 lvm [SWAP]

`-pve-root 252:1 0 148.9G 0 lvm /

sdb 8:16 0 3.6T 0 disk

`-sdb1 8:17 0 3.6T 0 part /mnt/backup_disk

root@proxima:~# lvscan

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [<148.93 GiB] inherit

root@proxima:~# ls /dev/

autofs cuse full i2c-0 log loop7 null pve sdb stdout tty13 tty21 tty3 tty38 tty46 tty54 tty62 ttyS12 ttyS20 ttyS29 ttyS9 vcs1 vcsa3 vcsu5 zero

block disk fuse i2c-1 loop-control mapper nvram random sdb1 tpm0 tty14 tty22 tty30 tty39 tty47 tty55 tty63 ttyS13 ttyS21 ttyS3 ttyprintk vcs2 vcsa4 vcsu6 zfs

bsg dm-0 gpiochip0 i2c-2 loop0 mcelog port rfkill sg0 tpmrm0 tty15 tty23 tty31 tty4 tty48 tty56 tty7 ttyS14 ttyS22 ttyS30 udmabuf vcs3 vcsa5 vfio

btrfs-control dm-1 hidraw0 i2c-3 loop1 megaraid_sas_ioctl_node ppp rtc sg1 tty tty16 tty24 tty32 tty40 tty49 tty57 tty8 ttyS15 ttyS23 ttyS31 uhid vcs4 vcsa6 vga_arbiter

bus dma_heap hidraw1 initctl loop2 mem psaux rtc0 shm tty0 tty17 tty25 tty33 tty41 tty5 tty58 tty9 ttyS16 ttyS24 ttyS4 uinput vcs5 vcsu vhci

char dri hidraw2 input loop3 mqueue ptmx sda snapshot tty1 tty18 tty26 tty34 tty42 tty50 tty59 ttyS0 ttyS17 ttyS25 ttyS5 urandom vcs6 vcsu1 vhost-net

console ecryptfs hpet ipmi0 loop4 mtd0 ptp0 sda1 snd tty10 tty19 tty27 tty35 tty43 tty51 tty6 ttyS1 ttyS18 ttyS26 ttyS6 userfaultfd vcsa vcsu2 vhost-vsock

core fb0 hugepages kmsg loop5 mtd0ro ptp1 sda2 stderr tty11 tty2 tty28 tty36 tty44 tty52 tty60 ttyS10 ttyS19 ttyS27 ttyS7 userio vcsa1 vcsu3 watchdog

cpu_dma_latency fd hwrng kvm loop6 net pts sda3 stdin tty12 tty20 tty29 tty37 tty45 tty53 tty61 ttyS11 ttyS2 ttyS28 ttyS8 vcs vcsa2 vcsu4 watchdog0

root@proxima:~#But, luckily, if I boot using the old kernel, everything works just file:

Code:

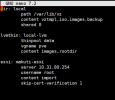

Linux proxima 6.2.16-20-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-20 (2023-12-01T13:17Z) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Sat Aug 10 16:26:00 2024 from 192.168.1.23

root@proxima:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 223.6G 0 disk

|-sda1 8:1 0 1007K 0 part

|-sda2 8:2 0 1G 0 part /boot/efi

`-sda3 8:3 0 222.6G 0 part

|-pve-swap 253:0 0 8G 0 lvm [SWAP]

`-pve-root 253:1 0 148.9G 0 lvm /

sdb 8:16 0 837.3G 0 disk

|-vmstorage-vmstorage_tmeta 253:2 0 8.4G 0 lvm

| `-vmstorage-vmstorage-tpool 253:4 0 820.4G 0 lvm

| |-vmstorage-vmstorage 253:5 0 820.4G 1 lvm

| |-vmstorage-vm--100--disk--0 253:6 0 300G 0 lvm

| `-vmstorage-vm--101--disk--0 253:7 0 20G 0 lvm

`-vmstorage-vmstorage_tdata 253:3 0 820.4G 0 lvm

`-vmstorage-vmstorage-tpool 253:4 0 820.4G 0 lvm

|-vmstorage-vmstorage 253:5 0 820.4G 1 lvm

|-vmstorage-vm--100--disk--0 253:6 0 300G 0 lvm

`-vmstorage-vm--101--disk--0 253:7 0 20G 0 lvm

sdc 8:32 0 3.6T 0 disk

`-sdc1 8:33 0 3.6T 0 part /mnt/backup_disk

root@proxima:~# lvscan

ACTIVE '/dev/vmstorage/vmstorage' [820.39 GiB] inherit

ACTIVE '/dev/vmstorage/vm-100-disk-0' [300.00 GiB] inherit

ACTIVE '/dev/vmstorage/vm-101-disk-0' [20.00 GiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [<148.93 GiB] inherit

root@proxima:~# ls /dev/

autofs cuse dm-7 hidraw0 i2c-3 loop1 megaraid_sas_ioctl_node ppp rtc sg0 stdout tty13 tty21 tty3 tty38 tty46 tty54 tty62 ttyS12 ttyS20 ttyS29 ttyS9 vcs1 vcsa3 vcsu5 watchdog0

block disk dma_heap hidraw1 initctl loop2 mem psaux rtc0 sg1 tpm0 tty14 tty22 tty30 tty39 tty47 tty55 tty63 ttyS13 ttyS21 ttyS3 ttyprintk vcs2 vcsa4 vcsu6 zero

bsg dm-0 dri hidraw2 input loop3 mqueue ptmx sda sg2 tpmrm0 tty15 tty23 tty31 tty4 tty48 tty56 tty7 ttyS14 ttyS22 ttyS30 udmabuf vcs3 vcsa5 vfio zfs

btrfs-control dm-1 ecryptfs hpet ipmi0 loop4 mtd0 ptp0 sda1 sg3 tty tty16 tty24 tty32 tty40 tty49 tty57 tty8 ttyS15 ttyS23 ttyS31 uhid vcs4 vcsa6 vga_arbiter

bus dm-2 fb0 hugepages kmsg loop5 mtd0ro ptp1 sda2 shm tty0 tty17 tty25 tty33 tty41 tty5 tty58 tty9 ttyS16 ttyS24 ttyS4 uinput vcs5 vcsu vhci

char dm-3 fd hwrng kvm loop6 net pts sda3 snapshot tty1 tty18 tty26 tty34 tty42 tty50 tty59 ttyS0 ttyS17 ttyS25 ttyS5 urandom vcs6 vcsu1 vhost-net

console dm-4 full i2c-0 log loop7 null pve sdb snd tty10 tty19 tty27 tty35 tty43 tty51 tty6 ttyS1 ttyS18 ttyS26 ttyS6 userfaultfd vcsa vcsu2 vhost-vsock

core dm-5 fuse i2c-1 loop-control mapper nvram random sdc stderr tty11 tty2 tty28 tty36 tty44 tty52 tty60 ttyS10 ttyS19 ttyS27 ttyS7 userio vcsa1 vcsu3 vmstorage

cpu_dma_latency dm-6 gpiochip0 i2c-2 loop0 mcelog port rfkill sdc1 stdin tty12 tty20 tty29 tty37 tty45 tty53 tty61 ttyS11 ttyS2 ttyS28 ttyS8 vcs vcsa2 vcsu4 watchdog

root@proxima:~#For now, I've pinned the old kernel, so I can restore the VMs and everything is "good". But, honestly, I would like to know "why" this is happening...

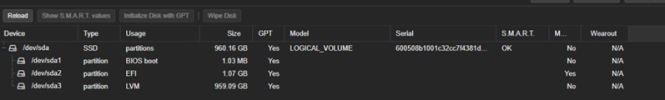

The machine is a Lenovo ThinkSystem ST250 (7Y45) and the vmstorage pool is mapped on a RAID 1 volume.

The RAID controller is ThinkSystem RAID 530-8i PCIe 12Gb Adapter (but recognized as

Code:

04:00.0 RAID bus controller: Broadcom / LSI MegaRAID Tri-Mode SAS3408 (rev 01)Thanks a lot in advance.

Best regards,

Giacomo