Hi community, needs some help with MySQL performance improvement.

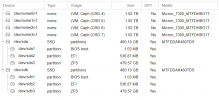

We have recently migrated MySQL DB (about 20GB) from bare metal to VM on Proxmox.

Shortly after the DBA team started complaining about performance issues (slow queries and overall slowness).

They have also ran variety of tests using sysbench, comparing the bare metal performance against test VM.

The sysbench results on the VM are extremely bad (150K QPS vs 1500QPS on the VM).

We had issues with Ceph before so we were naturally drawn into avoiding it.

The test VM was moved to local-zfs volume (pair of 2 SSDs in mirror used to boot PVE from). Side note - moving VM disk from ceph to local-zfs caused random reboots.

However, the sysbech tests were better but far off compared to the physical box (150K vs 4.5K QPS).

We tested with all possible VM options (baloon=0, NUMA=1, cache=writeback, iothread=1 with VirtIO SCSI single, SSD=1, discard=on) - no noticeable difference.

Next, we went into testing Ceph IO performance using fio and landed on some interesting findings.

The fio command we used is the following (typical RDBMS workload according to our research).

To our surprise, the Ubuntu server is not doing well at all, Debian returns identical results.

Our DBA team tested the DB on the CentOS VM but reported no change at all - neither running queries nor sysbench results.

Does anyone have experience with this?

What else we can do to improve the performance?

Any other testing that may be relevant?

Many thanks!

We have recently migrated MySQL DB (about 20GB) from bare metal to VM on Proxmox.

Shortly after the DBA team started complaining about performance issues (slow queries and overall slowness).

They have also ran variety of tests using sysbench, comparing the bare metal performance against test VM.

The sysbench results on the VM are extremely bad (150K QPS vs 1500QPS on the VM).

We had issues with Ceph before so we were naturally drawn into avoiding it.

The test VM was moved to local-zfs volume (pair of 2 SSDs in mirror used to boot PVE from). Side note - moving VM disk from ceph to local-zfs caused random reboots.

However, the sysbech tests were better but far off compared to the physical box (150K vs 4.5K QPS).

We tested with all possible VM options (baloon=0, NUMA=1, cache=writeback, iothread=1 with VirtIO SCSI single, SSD=1, discard=on) - no noticeable difference.

Next, we went into testing Ceph IO performance using fio and landed on some interesting findings.

The fio command we used is the following (typical RDBMS workload according to our research).

Code:

command: fio -ioengine=libaio -direct=1 -name=test -bs=4k -iodepth=1 -rw=randwrite -runtime=60 -filename=/dev/sdc -sync=1

Ubuntu20.04 Jobs: 1 (f=1): [w(1)][100.0%][w=956KiB/s][w=239 IOPS][eta 00m:00s]0m:09s]

CentOS7 Jobs: 1 (f=1): [w(1)][100.0%][w=32.7MiB/s][w=8363 IOPS][eta 00m:00s]To our surprise, the Ubuntu server is not doing well at all, Debian returns identical results.

Our DBA team tested the DB on the CentOS VM but reported no change at all - neither running queries nor sysbench results.

Does anyone have experience with this?

What else we can do to improve the performance?

Any other testing that may be relevant?

Many thanks!