For the life of me I cannot figure out how to get LVM working on top of an ISCSI share coming from a TrueNAS box. I believe multipath is working and I'm able to get the iscsi storage into proxmox, but not able to get LVM on top of it per the error below:

Code:

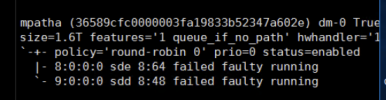

mpatha (36589cfc0000003fa19833b52347a602e) dm-0 TrueNAS,iSCSI Disk

size=1.6T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='round-robin 0' prio=50 status=active

|- 9:0:0:0 sde 8:64 active ready running

`- 8:0:0:0 sdd 8:48 active ready running

Code:

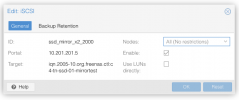

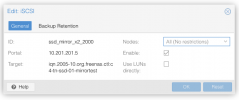

root@c4-pve-04:~# iscsiadm -m session

tcp: [5] 10.201.201.5:3260,1 iqn.2005-10.org.freenas.ctl:c4-tn-ssd-01-mirrortest (non-flash)

tcp: [6] 10.202.202.5:3260,1 iqn.2005-10.org.freenas.ctl:c4-tn-ssd-01-mirrortest (non-flash)

root@c4-pve-04:~# pvs

-empty-

root@c4-pve-04:~# lvs

-empty-

root@c4-pve-04:~# vgs

-empty-

root@c4-pve-04:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 111.8G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 111.3G 0 part

sdb 8:16 0 111.8G 0 disk

├─sdb1 8:17 0 1007K 0 part

├─sdb2 8:18 0 512M 0 part

└─sdb3 8:19 0 111.3G 0 part

sdd 8:48 0 1.6T 0 disk

└─mpatha 253:0 0 1.6T 0 mpath

sde 8:64 0 1.6T 0 disk

└─mpatha 253:0 0 1.6T 0 mpath

Last edited: