Hi,

I have a new server, bigger, better, more disk space.

I have a cluster with the old and new server.

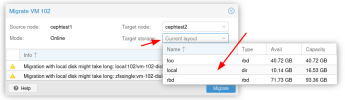

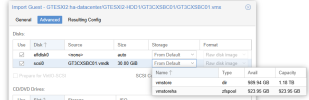

Now I have to migrate the data of 1 VM on disk called "storage" to the new server where the disk is called "DATA".

How would I do this? If I try the normal right click option, it says "storage" does not exist on the new host.

So how can I tell the VM to move the data from "storage" to "DATA"?

Thanks!

EDIT: I wanted to add, making a backup and moving it then is no option, it's 4TB, which I have no space for.

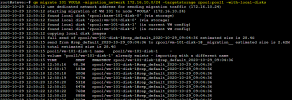

EDIT2: It's from a ZFS store to a LVM thin pool... If it makes a difference.

I have a new server, bigger, better, more disk space.

I have a cluster with the old and new server.

Now I have to migrate the data of 1 VM on disk called "storage" to the new server where the disk is called "DATA".

How would I do this? If I try the normal right click option, it says "storage" does not exist on the new host.

So how can I tell the VM to move the data from "storage" to "DATA"?

Thanks!

EDIT: I wanted to add, making a backup and moving it then is no option, it's 4TB, which I have no space for.

EDIT2: It's from a ZFS store to a LVM thin pool... If it makes a difference.