So, im trying to install Mint-Ubuntu-Debian on vm, and it doesnt work with UEFI, noone of this, so as result i stopped on Mint (if it help, its linuxmint-22-cinnamon-64bit.iso) to test different solutions (but no result atm).

Problem:

Before installation end, linux requesting remove cd-rom. If i dont do that - instalation starting again (but looks like alls data loaded - linux suggests installing again os over current (mean os data here, but doesnt boot)). If i removing cdrom, launch starting with *>>>Start PXE over IPv4* default error like system cant find anything to launch. So, after installing linux with UEFI, i cant launch os.

Things i tried:

With new vm, tried disable *pre-enroll keys*, doesnt work.

Secure boot - not sure, what exacly i gonna do here. As i undestand, Secure Boot in current vm bios defaultly Disabled if pre-enroll keys checkbox disabled. I tried enable *pre-enroll keys* and disable *Attempt secure boot* in bios, looks like it doesnt work or i just dont undestand order, what to select and when - before install or after, as well you can remove enrolled keys, so not sure is it work and when i should do this.

SeaBIOS works fine, looks like i can work in it, but at the moment i would try to solve the problem with uefi if it is solvable.

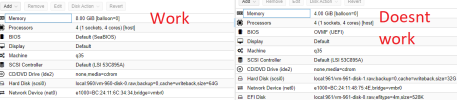

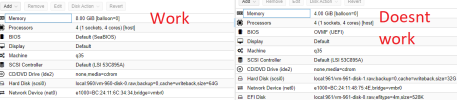

PVE 8.1.3 on LVM, vm q35, not sure whats data can be helpture here, screenshot below

I also tried same .iso on Hyper-v (different pc), Secure Boot disabled, looks like os launching half-times - it can launch or cant, and no logical dependencies here. I don't know, maybe Linux systems don't work at all or work incorrectly with UEFI

Problem:

Before installation end, linux requesting remove cd-rom. If i dont do that - instalation starting again (but looks like alls data loaded - linux suggests installing again os over current (mean os data here, but doesnt boot)). If i removing cdrom, launch starting with *>>>Start PXE over IPv4* default error like system cant find anything to launch. So, after installing linux with UEFI, i cant launch os.

Things i tried:

With new vm, tried disable *pre-enroll keys*, doesnt work.

Secure boot - not sure, what exacly i gonna do here. As i undestand, Secure Boot in current vm bios defaultly Disabled if pre-enroll keys checkbox disabled. I tried enable *pre-enroll keys* and disable *Attempt secure boot* in bios, looks like it doesnt work or i just dont undestand order, what to select and when - before install or after, as well you can remove enrolled keys, so not sure is it work and when i should do this.

SeaBIOS works fine, looks like i can work in it, but at the moment i would try to solve the problem with uefi if it is solvable.

PVE 8.1.3 on LVM, vm q35, not sure whats data can be helpture here, screenshot below

I also tried same .iso on Hyper-v (different pc), Secure Boot disabled, looks like os launching half-times - it can launch or cant, and no logical dependencies here. I don't know, maybe Linux systems don't work at all or work incorrectly with UEFI