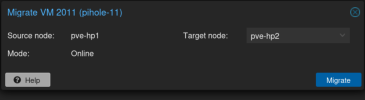

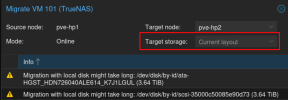

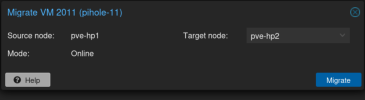

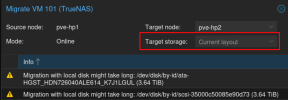

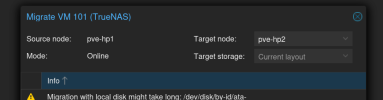

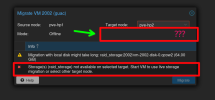

And this is the bit that doesn't make sense. All the UI does is execute the CLI for the user. If it can do it for the Online Migration, why can't it be added to do the Offline Migration?? There's a log in showing it uses the CLI.

the UI doesn't execute the CLI, it does API calls. and yes, we don't put everything that the API can do on the UI (for UX reasons, or because some API might be "dangerous" for unwitting users, or because we consider it not stable enough yet for that kind of exposure). offline storage migration falls into the latter category - it was initially added as a sort of experiment, and then piece by piece it got more stable - it is now enabled on the UI as long as you don't change storage.

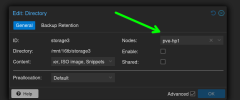

The only reason I had made one of the storages set to Shared was because I thought it was required to allow a single share point but shared between nodes. IE: the disk(s) are stored on Node-A but the config resides on Node-B. It is launched from Node-B, pulls the required information across the network and ran on Node-B, but the bulk storage is on Node-A.

I still don't understand the purpose of Shared as even the non-shared storages showed up on my second node when it joined the Cluster. The folders are all created on PVE-HP2 root system and share the capacity of that drive.

that's what I tried to explain to you in my earlier response. a shared storage requires the underlying storage to present the content in an identical manner on all nodes where it is enabled. a local storage doesn't. if you configure a directory on your host as shared that is actually local, you are lying to PVE and things will break (this is basically what you did and why the migration failed "because the disk didn't exist").

what you normally do if you have a shared storage:

- setup the shared storage (e.g., configure ceph cluster, create NFS export/CIFS share, ..)

- create a single storage entry for that storage

- you will now see this storage on each node, and the contents and usage should be identical

- you can now migrate using this storage, and PVE will skip the disks on it since they already "exist" on the target node

what you normally do if you don't have a shared storage:

- setup a directory/LVM VG/ZFS pool/.. on each node (e.g., backed by a local disk, mounted on /mnt/pve/foobar on each node. or a ZFS pool + dataset with the same name on each node. or a LVM VG with the same name on each node)

- create a single storage entry for those directories/...

- you will now "see" this storage on each node, but the contents and usage will be different on each node

- you can now migrate using this storage, and PVE will copy the disks as part of the migration from one node to the other

if you want to switch storage while migrating (which is only needed if you have a different storage.cfg entry for each node!), you need to either use the API or CLI, or live migration where this feature is already exposed on the UI. we will expose target storage selection also for offline migration at some point, but that doesn't change that your current configuration was broken and is still non-standard and sub-optimal.