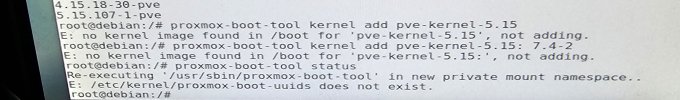

Hello all, I'm back again because I've managed to brick my promox again. I was attempting to finally update my proxmox 5 setup... didn't realize I was so outdated. I was getting numerous issues resolving the repositories... even just updating the 5 to the latest version before attempting to update to 6. I kept trying various things and eventually after 2 hours got it to update....something...

After a reboot I am presenting with the error message of this post. I found this thread....

https://forum.proxmox.com/threads/zfs-problem-sbin-zpool-undefined-symbol-thread_init.38500/

which similarly explains the situation. I was trying to follow these steps after booting into a ubuntu 16.04 live usb.

zpool import -N 'rpool'

zpool set mountpoint=/mnt rpool/RPOOL/pve-1

zfs mount rpool/ROOT/pve-1

mount -t proc /proc /mnt/proc

mount --rbind /dev /mnt/dev

#Enter the chroot

chroot /mnt /bin/bash

source /etc/profile

apt update && apt upgrade & apt dist-upgrade <-- this installed new kernel

zpool status <-- test that zpool can be ran ok

zfs set mountpoint=/ rpool/RPOOL/pve-1

exit

after installing zfsutils-linux i noticed that the rpool was already imported... I noticed this after trying zpool import -n rpool. In fact most of the rpool including pve-1 and root were mounted.

I created directories for /mnt/proc and /mnt/dev and was able to rbind /proc and /dev to them.

My issue now is when i try to chroot /mnt /bin/bash... I am getting chroot: failed to run command '/bin/bash' no such file or directory

I wasn't sure where the bash should be located... i see it in the root of the usb flash drive in /bin/bash... i copied this to /mnt/bin/bash to see if it would help. It did not.

I apologize in advance for the embarrassment of my failures. I am just hoping to repair my rpool so that it can boot again. Thank you in advance for the help.

After a reboot I am presenting with the error message of this post. I found this thread....

https://forum.proxmox.com/threads/zfs-problem-sbin-zpool-undefined-symbol-thread_init.38500/

which similarly explains the situation. I was trying to follow these steps after booting into a ubuntu 16.04 live usb.

zpool import -N 'rpool'

zpool set mountpoint=/mnt rpool/RPOOL/pve-1

zfs mount rpool/ROOT/pve-1

mount -t proc /proc /mnt/proc

mount --rbind /dev /mnt/dev

#Enter the chroot

chroot /mnt /bin/bash

source /etc/profile

apt update && apt upgrade & apt dist-upgrade <-- this installed new kernel

zpool status <-- test that zpool can be ran ok

zfs set mountpoint=/ rpool/RPOOL/pve-1

exit

after installing zfsutils-linux i noticed that the rpool was already imported... I noticed this after trying zpool import -n rpool. In fact most of the rpool including pve-1 and root were mounted.

I created directories for /mnt/proc and /mnt/dev and was able to rbind /proc and /dev to them.

My issue now is when i try to chroot /mnt /bin/bash... I am getting chroot: failed to run command '/bin/bash' no such file or directory

I wasn't sure where the bash should be located... i see it in the root of the usb flash drive in /bin/bash... i copied this to /mnt/bin/bash to see if it would help. It did not.

I apologize in advance for the embarrassment of my failures. I am just hoping to repair my rpool so that it can boot again. Thank you in advance for the help.