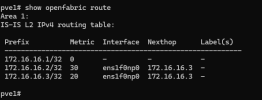

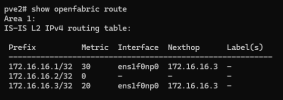

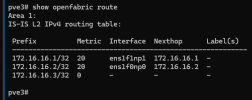

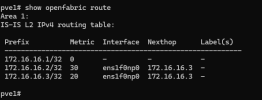

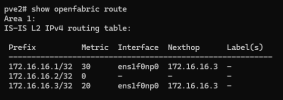

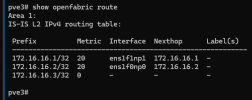

Looks like my route setup wants to route over one of the hosts to get to another, even though i have all 3 100G dac cables, attached, it wont direct connect to the other host for some reason?

pve1 => .1

pve2 => .2

pve3 => .3

pve1 port1 -> pve2 port 1

pve1 port 2 -> pve3 port 1

pve3 port2 -> pve2 port 2

first time using FRR at all. i understand the concept, but i dont see why its not realizing there is a less weighted path to get the the .1 server from the

i have switched cables around a ton (waited about 5 min between each move)

Followed this post here (https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Routed_Setup_.28with_Fallback.29)

any help would be appreciated

pve1 => .1

pve2 => .2

pve3 => .3

pve1 port1 -> pve2 port 1

pve1 port 2 -> pve3 port 1

pve3 port2 -> pve2 port 2

first time using FRR at all. i understand the concept, but i dont see why its not realizing there is a less weighted path to get the the .1 server from the

i have switched cables around a ton (waited about 5 min between each move)

Followed this post here (https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Routed_Setup_.28with_Fallback.29)

any help would be appreciated