I'm running a 'current latest' proxmox (OVH Server stock install) 5.2-7 which has about 10 x LXC VMs on the physical host. Initially it was setup with ~5 VMs and then I added 6 more roughly a week ago. 5 of the 6 new VMs were deployed using a template/copy made from a slightly older proxmox5 host the client has at their office; the idea was to have 5 x identical copies as starting point / in terms of the VM content and then customize them so they all run in parallel but doing more or less the same sort of thing.

Network is setup in what I call the 'typical OVH proxmox way'

-- there is a dummy interface

-- setup with vmbr1

-- and I've got 192.168.95.1 as the private IP associated on proxmox hardware node

-- and LXC Vms are with an eth interface into vmbr1, and use 192.168.95.1 as their gateway. The proxmox physical host does NAT firewall so that the LXC VMs can get 'internet access' (ie, download updates etc) easily, but there is no access to the 192.168.95.0/24 network from the public internet / except for when I add port forwarding rules on the physical proxmox node.

-- this works easily and consistently, I have found in the past.

I've got intermittent weird and frustrating behaviour. In that networking for 5 of 6 new VMs spun up last week - simply times out - some of the time. So for example,

- ping from physical node, to 192.168.95.111 (first of the 5 new~identical VMs created) - takes about 10 seconds .. then it works fine. Try again, it may work fine, or maybe punishes me with 5-10 seconds waiting, then works fine.

- ping to any of the 'older' VMs (192.168.95.2,3,4 for example) from the physical host - always works fine

- similarly, ping from inside older LXC Container with IP 192.168.95.2 - to any of the other 'older' VMs (192.168.95.3,4 or the proxmox physical at 192.168.95.1) - always works fine / no issues observed.

- but ping from inside this one to the new containers - can give me grief, the same sort of pause, 5-10 seconds, then works.

The 5 new VMs were all CentOS bases / while all the rest I'm using are either ubuntu or debian. But in theory I don't believe it should really matter what the distro is for the LXC containers.(?!)

If it was a pure simple firewall issue, I would expect pings to <work> or <not work> but not to exhibit this kind of .. pause .. partial fail .. then success .. sort of behaviour.

Sample of fail might look like this:

For reference, my physical proxmox has config thus for network,

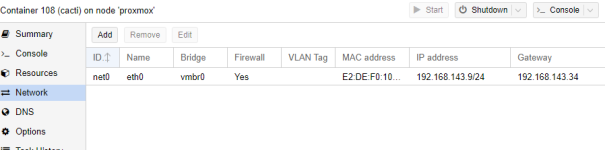

and for reference, here are a couple of reference LXC VM Config files to illustrate their setup,

First one that is older and working fine:

and for a newer one which exhibits the pain/frustrating network behaviour:

I'm curious if anyone has seen this kind of behaviour / has any ideas of things I can try to dig in. It is rather frustrating, rendering the new VMs ~effectively unreliable or at least nearly unusable, because - the VMs need to talk reliably to one another for things to work. (Or at very least talk to their gateway, the physical proxmox node IP 192.168.95.1) for port forward traffic to not stall and time out.

Many thanks for reading this far/ and any comments suggests pointers are greatly appreciated.

Tim

Network is setup in what I call the 'typical OVH proxmox way'

-- there is a dummy interface

-- setup with vmbr1

-- and I've got 192.168.95.1 as the private IP associated on proxmox hardware node

-- and LXC Vms are with an eth interface into vmbr1, and use 192.168.95.1 as their gateway. The proxmox physical host does NAT firewall so that the LXC VMs can get 'internet access' (ie, download updates etc) easily, but there is no access to the 192.168.95.0/24 network from the public internet / except for when I add port forwarding rules on the physical proxmox node.

-- this works easily and consistently, I have found in the past.

I've got intermittent weird and frustrating behaviour. In that networking for 5 of 6 new VMs spun up last week - simply times out - some of the time. So for example,

- ping from physical node, to 192.168.95.111 (first of the 5 new~identical VMs created) - takes about 10 seconds .. then it works fine. Try again, it may work fine, or maybe punishes me with 5-10 seconds waiting, then works fine.

- ping to any of the 'older' VMs (192.168.95.2,3,4 for example) from the physical host - always works fine

- similarly, ping from inside older LXC Container with IP 192.168.95.2 - to any of the other 'older' VMs (192.168.95.3,4 or the proxmox physical at 192.168.95.1) - always works fine / no issues observed.

- but ping from inside this one to the new containers - can give me grief, the same sort of pause, 5-10 seconds, then works.

The 5 new VMs were all CentOS bases / while all the rest I'm using are either ubuntu or debian. But in theory I don't believe it should really matter what the distro is for the LXC containers.(?!)

If it was a pure simple firewall issue, I would expect pings to <work> or <not work> but not to exhibit this kind of .. pause .. partial fail .. then success .. sort of behaviour.

Sample of fail might look like this:

Code:

root@outcomesmysql:/var/log# ping 192.168.95.111

(waiting about 5 seconds, then we get....)

PING 192.168.95.111 (192.168.95.111) 56(84) bytes of data.

64 bytes from 192.168.95.111: icmp_seq=7 ttl=64 time=0.052 ms

64 bytes from 192.168.95.111: icmp_seq=8 ttl=64 time=0.052 ms

64 bytes from 192.168.95.111: icmp_seq=9 ttl=64 time=0.042 ms

64 bytes from 192.168.95.111: icmp_seq=10 ttl=64 time=0.061 ms

64 bytes from 192.168.95.111: icmp_seq=11 ttl=64 time=0.054 ms

^C

--- 192.168.95.111 ping statistics ---

11 packets transmitted, 5 received, 54% packet loss, time 10224ms

rtt min/avg/max/mdev = 0.042/0.052/0.061/0.007 msFor reference, my physical proxmox has config thus for network,

Code:

root@ns508208:/etc/network# cat interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage part of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface eth0 inet manual

auto vmbr1

iface vmbr1 inet static

address 192.168.95.1

netmask 255.255.255.0

bridge_ports dummy0

bridge_stp off

bridge_fd 0

auto vmbr0

iface vmbr0 inet static

address 192.95.31.XX

netmask 255.255.255.0

gateway 192.95.31.254

broadcast 192.95.31.255

bridge_ports eth0

bridge_stp off

bridge_fd 0

network 192.95.31.0

iface vmbr0 inet6 static

address XXXX:5300:0060:2e47::

netmask 64

post-up /sbin/ip -f inet6 route add XXXX:5300:0060:2eff:ff:ff:ff:ff dev vmbr0

post-up /sbin/ip -f inet6 route add default via XXXX:5300:0060:2eff:ff:ff:ff:ff

pre-down /sbin/ip -f inet6 route del default via XXXX:5300:0060:2eff:ff:ff:ff:ff

pre-down /sbin/ip -f inet6 route del XXXX:5300:0060:2eff:ff:ff:ff:ff dev vmbr0

root@ns508208:/etc/network#and for reference, here are a couple of reference LXC VM Config files to illustrate their setup,

First one that is older and working fine:

Code:

root@ns508208:/etc/pve/lxc# cat 100.conf

#Nginx web proxy public facing VM

#

#ubuntu 16.04 LXC template

#4 vCPU / 4gb ram / 32gb disk

#

#TDC may-17-18

arch: amd64

cores: 4

hostname: nginx

memory: 4096

net0: name=eth0,bridge=vmbr1,gw=192.168.95.1,hwaddr=B2:AA:DB:39:8D:6E,ip=192.168.95.2/32,type=veth

onboot: 1

ostype: ubuntu

rootfs: local:100/vm-100-disk-1.raw,size=32G

swap: 4096and for a newer one which exhibits the pain/frustrating network behaviour:

Code:

root@ns508208:/etc/pve/lxc# cat 111.conf

#192.168.95.111%09outcomes-1

#setup aug.16.18.TDC

#

#OLD LAN IP ORIGIN VM%3A 10.10.40.220

arch: amd64

cores: 2

cpulimit: 1

hostname: outcomestest1.clientname.com

memory: 2048

net0: name=eth0,bridge=vmbr1,gw=192.168.95.1,hwaddr=FA:A7:77:AA:AA:02,ip=192.168.95.111/32,type=veth

onboot: 1

ostype: centos

rootfs: local:111/vm-111-disk-1.raw,size=32G

swap: 2048

root@ns508208:/etc/pve/lxc#I'm curious if anyone has seen this kind of behaviour / has any ideas of things I can try to dig in. It is rather frustrating, rendering the new VMs ~effectively unreliable or at least nearly unusable, because - the VMs need to talk reliably to one another for things to work. (Or at very least talk to their gateway, the physical proxmox node IP 192.168.95.1) for port forward traffic to not stall and time out.

Many thanks for reading this far/ and any comments suggests pointers are greatly appreciated.

Tim