Hey Y'all. I've got a question. I hope it isn't a stupid question, but the only way to know is to ask, because google hasn't been forthcoming.

I'm not an IT guy. By education, I'm an engineer. Computers are my hobby. I know enough to install proxmox and configure multiple LXCs/VMs over command line, but I probably only know 5-10% much as a CompSci or Cybersecurity professional.

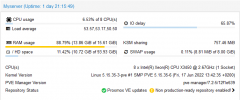

I currently have two applications running on my one node (more applications coming): a pi-hole running on an LXC, and an ubuntu server that I am setting up for file storage. I don't have a ton of resources to go around (4 core 8 thread, 16GB ram) so the pihole only has 1 core, 512mB of RAM. The server has 3 cores and 4 GB. Under normal circumstances, the pihole runs fine, not even close to max resource utilization, because it is only supplying a handful of devices. Whenever I do something intensive on a VM (any VM, not just the ubuntu server), the pihole claims it is under heavy load on CPU through the web interface, then eventually becomes unresponsive to any and all commands, breaking internet on all devices set to use it. The tasks underway on VMs are not querying the pihole (file copying, etc) so it isn't being overloaded with DNS requests.

To my untrained eye, it looks like VMs are taking resources out from underneath LXCs when there is plenty to go around. How can I go about diagnosing/fixing this?

I'm not an IT guy. By education, I'm an engineer. Computers are my hobby. I know enough to install proxmox and configure multiple LXCs/VMs over command line, but I probably only know 5-10% much as a CompSci or Cybersecurity professional.

I currently have two applications running on my one node (more applications coming): a pi-hole running on an LXC, and an ubuntu server that I am setting up for file storage. I don't have a ton of resources to go around (4 core 8 thread, 16GB ram) so the pihole only has 1 core, 512mB of RAM. The server has 3 cores and 4 GB. Under normal circumstances, the pihole runs fine, not even close to max resource utilization, because it is only supplying a handful of devices. Whenever I do something intensive on a VM (any VM, not just the ubuntu server), the pihole claims it is under heavy load on CPU through the web interface, then eventually becomes unresponsive to any and all commands, breaking internet on all devices set to use it. The tasks underway on VMs are not querying the pihole (file copying, etc) so it isn't being overloaded with DNS requests.

To my untrained eye, it looks like VMs are taking resources out from underneath LXCs when there is plenty to go around. How can I go about diagnosing/fixing this?