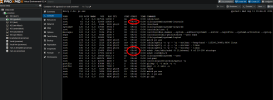

root@ct-adguard:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if40: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:54:00:37:8b:ca brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.10.33/24 brd 192.168.10.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe37:8bca/64 scope link

valid_lft forever preferred_lft forever

root@ct-adguard:/# journalctl -f

-- Journal begins at Sun 2022-08-21 15:16:18 CEST. --

Sep 09 15:29:36 ct-adguard sshd[172]: pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ruser= rhost=192.168.10.41 user=root

Sep 09 15:29:38 ct-adguard sshd[172]: Failed password for root from 192.168.10.41 port 52800 ssh2

Sep 09 15:29:53 ct-adguard sshd[172]: Connection closed by authenticating user root 192.168.10.41 port 52800 [preauth]

Sep 09 15:29:53 ct-adguard systemd[1]: ssh@0-192.168.10.33:22-192.168.10.41:52800.service: Succeeded.

Sep 09 15:30:33 ct-adguard ifup[162]: XMT: Forming Solicit, 126590 ms elapsed.

Sep 09 15:30:33 ct-adguard ifup[162]: XMT: X-- IA_NA 00:37:8b:ca

Sep 09 15:30:33 ct-adguard ifup[162]: XMT: | X-- Request renew in +3600

Sep 09 15:30:33 ct-adguard ifup[162]: XMT: | X-- Request rebind in +5400

Sep 09 15:30:33 ct-adguard ifup[162]: XMT: Solicit on eth0, interval 110330ms.

Sep 09 15:30:33 ct-adguard dhclient[162]: XMT: Solicit on eth0, interval 110330ms.

Sep 09 15:32:24 ct-adguard ifup[162]: XMT: Forming Solicit, 236920 ms elapsed.

Sep 09 15:32:24 ct-adguard ifup[162]: XMT: X-- IA_NA 00:37:8b:ca

Sep 09 15:32:24 ct-adguard ifup[162]: XMT: | X-- Request renew in +3600

Sep 09 15:32:24 ct-adguard ifup[162]: XMT: | X-- Request rebind in +5400

Sep 09 15:32:24 ct-adguard ifup[162]: XMT: Solicit on eth0, interval 121880ms.

Sep 09 15:32:24 ct-adguard dhclient[162]: XMT: Solicit on eth0, interval 121880ms.

Sep 09 15:33:24 ct-adguard systemd[1]: networking.service: start operation timed out. Terminating.

Sep 09 15:33:24 ct-adguard systemd[1]: networking.service: Main process exited, code=exited, status=1/FAILURE

Sep 09 15:33:24 ct-adguard ifup[69]: Got signal Terminated, terminating...

Sep 09 15:33:24 ct-adguard ifup[69]: ifup: failed to bring up eth0

Sep 09 15:33:24 ct-adguard systemd[1]: networking.service: Failed with result 'timeout'.

Sep 09 15:33:24 ct-adguard systemd[1]: Failed to start Raise network interfaces.

Sep 09 15:33:24 ct-adguard systemd[1]: Reached target Network.

Sep 09 15:33:24 ct-adguard systemd[1]: Reached target Network is Online.

Sep 09 15:33:24 ct-adguard systemd[1]: Starting AdGuard Home: Network-level blocker...

Sep 09 15:33:24 ct-adguard systemd[1]: Starting Postfix Mail Transport Agent (instance -)...

Sep 09 15:33:24 ct-adguard systemd[1]: Starting Permit User Sessions...

Sep 09 15:33:24 ct-adguard systemd[1]: Finished Permit User Sessions.

Sep 09 15:33:24 ct-adguard systemd[1]: Started Console Getty.

Sep 09 15:33:24 ct-adguard systemd[1]: Started Container Getty on /dev/tty1.

Sep 09 15:33:24 ct-adguard systemd[1]: Started Container Getty on /dev/tty2.

Sep 09 15:33:24 ct-adguard systemd[1]: Reached target Login Prompts.

Sep 09 15:33:24 ct-adguard systemd[1]: Started AdGuard Home: Network-level blocker.

Sep 09 15:33:25 ct-adguard postfix/postfix-script[342]: starting the Postfix mail system

Sep 09 15:33:25 ct-adguard postfix/master[344]: daemon started -- version 3.5.13, configuration /etc/postfix

Sep 09 15:33:25 ct-adguard systemd[1]: Started Postfix Mail Transport Agent (instance -).

Sep 09 15:33:25 ct-adguard systemd[1]: Starting Postfix Mail Transport Agent...

Sep 09 15:33:25 ct-adguard systemd[1]: Finished Postfix Mail Transport Agent.

Sep 09 15:33:25 ct-adguard systemd[1]: Reached target Multi-User System.

Sep 09 15:33:25 ct-adguard systemd[1]: Reached target Graphical Interface.

Sep 09 15:33:25 ct-adguard systemd[1]: Starting Update UTMP about System Runlevel Changes...

Sep 09 15:33:25 ct-adguard systemd[1]: systemd-update-utmp-runlevel.service: Succeeded.

Sep 09 15:33:25 ct-adguard systemd[1]: Finished Update UTMP about System Runlevel Changes.

Sep 09 15:33:25 ct-adguard systemd[1]: Startup finished in 5min 1.239s.