Today i got the following error/warning when making a snapshot of my vm:

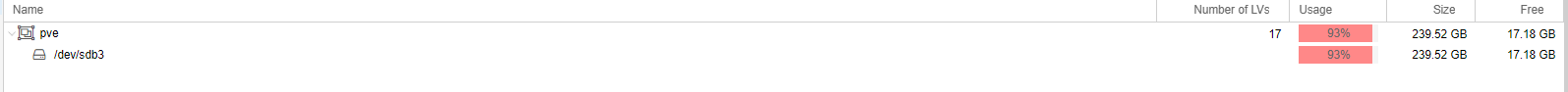

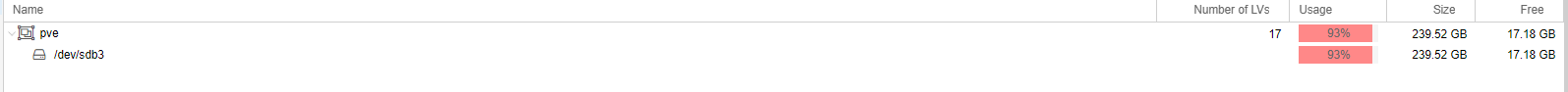

This got me worried, and after inspection i found that my lvm pool was almost full under UI > Disk> LVM

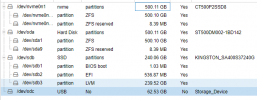

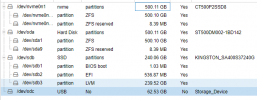

I don't understand why it shows that the disk is almost full because i have not used any storage, this claim also gets backed up when i look at my disks: .

.

For those wondering why i even created a volume group,

I had a choice (remaining root storage) between directory or LVM, I choose Lvm because it had the possibility for snapshots.

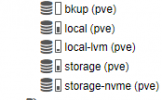

see my disk configuration:

DF --o gives:

Now the question,

How do i remove something from LVM, so it's not almost full. Or make it show the correct storage use.

Best Regards,

Code:

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "vm-100-state-test" created.

WARNING: Sum of all thin volume sizes (<227.48 GiB) exceeds the size of thin pool pve/data and the size of whole volume group (<223.07 GiB).

saving VM state and RAM using storage 'local-lvm'

2.01 MiB in 0s

404.63 MiB in 1s

435.62 MiB in 2s

629.49 MiB in 3s

762.66 MiB in 4s

completed saving the VM state in 4s, saved 783.73 MiB

snapshotting 'drive-scsi0' (local-lvm:vm-100-disk-0)

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "snap_vm-100-disk-0_test" created.

WARNING: Sum of all thin volume sizes (<259.48 GiB) exceeds the size of thin pool pve/data and the size of whole volume group (<223.07 GiB).

snapshotting 'drive-efidisk0' (local-lvm:vm-100-disk-1)

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "snap_vm-100-disk-1_test" created.

WARNING: Sum of all thin volume sizes (259.48 GiB) exceeds the size of thin pool pve/data and the size of whole volume group (<223.07 GiB).

TASK OKThis got me worried, and after inspection i found that my lvm pool was almost full under UI > Disk> LVM

I don't understand why it shows that the disk is almost full because i have not used any storage, this claim also gets backed up when i look at my disks:

.

.For those wondering why i even created a volume group,

I had a choice (remaining root storage) between directory or LVM, I choose Lvm because it had the possibility for snapshots.

see my disk configuration:

DF --o gives:

Code:

Filesystem Type Inodes IUsed IFree IUse% 1K-blocks Used Avail Use% File Mounted on

udev devtmpfs 2028553 744 2027809 1% 8114212 0 8114212 0% - /dev

tmpfs tmpfs 2036573 1281 2035292 1% 1629260 1320 1627940 1% - /run

/dev/mapper/pve-root ext4 3653632 86616 3567016 3% 57278576 31226164 23113124 58% - /

tmpfs tmpfs 2036573 99 2036474 1% 8146292 43680 8102612 1% - /dev/shm

tmpfs tmpfs 2036573 23 2036550 1% 5120 0 5120 0% - /run/lock

/dev/sdb2 vfat 0 0 0 - 523248 312 522936 1% - /boot/efi

storage-nvme zfs 587378143 15 587378128 1% 293689088 128 293688960 1% - /storage-nvme

storage-nvme/subvol-102-disk-0 zfs 15595898 26218 15569680 1% 8388608 603776 7784832 8% - /storage-nvme/subvol-102-disk-0

storage-nvme/subvol-101-disk-0 zfs 20747147 146347 20600800 1% 25165824 14865536 10300288 60% - /storage-nvme/subvol-101-disk-0

storage-nvme/subvol-116-disk-0 zfs 351448086 49494 351398592 1% 188743680 13044480 175699200 7% - /storage-nvme/subvol-116-disk-0

storage-nvme/subvol-107-disk-0 zfs 158813263 41087 158772176 1% 83886080 4500096 79385984 6% - /storage-nvme/subvol-107-disk-0

storage-nvme/subvol-114-disk-0 zfs 47666900 41460 47625440 1% 25165824 1353216 23812608 6% - /storage-nvme/subvol-114-disk-0

storage-nvme/subvol-117-disk-0 zfs 48828352 29096 48799256 1% 25165824 766208 24399616 4% - /storage-nvme/subvol-117-disk-0

storage-nvme/subvol-119-disk-0 zfs 39479584 27128 39452456 1% 26214400 6488192 19726208 25% - /storage-nvme/subvol-119-disk-0

storage-nvme/subvol-118-disk-0 zfs 161522148 40524 161481624 1% 83886080 3145344 80740736 4% - /storage-nvme/subvol-118-disk-0

storage-nvme/subvol-103-disk-0 zfs 45964936 34680 45930256 1% 25165824 2200704 22965120 9% - /storage-nvme/subvol-103-disk-0

storage/bkup zfs 438070474 18 438070456 1% 220059136 1024000 219035136 1% - /bkup

storage zfs 438070463 7 438070456 1% 219035264 128 219035136 1% - /storage

storage/subvol-109-disk-0 zfs 81770504 50632 81719872 1% 41943040 1083136 40859904 3% - /storage/subvol-109-disk-0

/dev/fuse fuse 262144 56 262088 1% 131072 32 131040 1% - /etc/pveNow the question,

How do i remove something from LVM, so it's not almost full. Or make it show the correct storage use.

Best Regards,