Hi all! First post, great community!

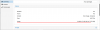

So I tried cloning a template today and it failed, multiple times. Now my issue is that I had to delete the failed clone and the allocated storage on Local-LVM for it but it still shows as full and I can't move/clone/create VMs on it anymore. I'm working on an X3650 server equipped with an M5015 raid card. The LVM and Local-LVM are located on a 2 disk raid1. Sorry if I'm missing something obvious but I'm out of ideas here.

So I tried cloning a template today and it failed, multiple times. Now my issue is that I had to delete the failed clone and the allocated storage on Local-LVM for it but it still shows as full and I can't move/clone/create VMs on it anymore. I'm working on an X3650 server equipped with an M5015 raid card. The LVM and Local-LVM are located on a 2 disk raid1. Sorry if I'm missing something obvious but I'm out of ideas here.

Code:

proxmox-ve: 6.0-2 (running kernel: 5.0.18-1-pve)

pve-manager: 6.0-6 (running version: 6.0-6/c71f879f)

pve-kernel-5.0: 6.0-6

pve-kernel-helper: 6.0-6

pve-kernel-5.0.18-1-pve: 5.0.18-3

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 12.2.11+dfsg1-2.1

corosync: 3.0.2-pve2

criu: 3.11-3

glusterfs-client: 5.5-3

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.10-pve2

libpve-access-control: 6.0-2

libpve-apiclient-perl: 3.0-2

libpve-common-perl: 6.0-4

libpve-guest-common-perl: 3.0-1

libpve-http-server-perl: 3.0-2

libpve-storage-perl: 6.0-7

libqb0: 1.0.5-1

lvm2: 2.03.02-pve3

lxc-pve: 3.1.0-64

lxcfs: 3.0.3-pve60

novnc-pve: 1.0.0-60

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.0-7

pve-cluster: 6.0-5

pve-container: 3.0-5

pve-docs: 6.0-4

pve-edk2-firmware: 2.20190614-1

pve-firewall: 4.0-7

pve-firmware: 3.0-2

pve-ha-manager: 3.0-2

pve-i18n: 2.0-2

pve-qemu-kvm: 4.0.0-5

pve-xtermjs: 3.13.2-1

qemu-server: 6.0-7

smartmontools: 7.0-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.1-pve1

Code:

root@ARCEUS:~# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 7 0 wz--n- <135.47g 16.00g

Code:

root@ARCEUS:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

base-100-disk-1 pve Vri---tz-k 20.00g data

data pve twi-aotz-- <75.72g 94.86 2.81

root pve -wi-ao---- 33.75g

snap_vm-1100-disk-0_FSC pve Vri---tz-k 5.00g data vm-1100-disk-0

swap pve -wi-ao---- 8.00g

vm-1100-disk-0 pve Vwi-aotz-- 5.00g data 45.30

vm-1200-disk-0 pve Vwi-a-tz-- 6.00g data 29.93