Hello im getting a littlebit confused over live migration

do i need ceph or any kind of special settings to be able to migrate 1vm from node1-to node2 while the vmdisk is on local storage?

currently im getting this error

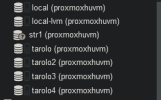

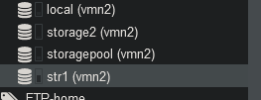

2023-10-12 23:28:16 ERROR: migration aborted (duration 00:00:00): storage 'storage4' is not available on node 'vmn2'

TASK ERROR: migration aborted.

do i need ceph or any kind of special settings to be able to migrate 1vm from node1-to node2 while the vmdisk is on local storage?

currently im getting this error

2023-10-12 23:28:16 ERROR: migration aborted (duration 00:00:00): storage 'storage4' is not available on node 'vmn2'

TASK ERROR: migration aborted.