The upcoming Proxmox VE 6.2 (Q2/2020) will use a 5.4 based Linux kernel. You can test this kernel now, install with:

This version is a Long Term Support (LTS) kernel and will get updates for the remaining Proxmox VE 6.x based releases.

It's not required to enable the pvetest repository, but it will get you updates to this kernel faster.

We invite you to test your hardware with this kernel and we are thankful for receiving your feedback.

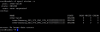

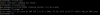

Code:

apt update && apt install pve-kernel-5.4This version is a Long Term Support (LTS) kernel and will get updates for the remaining Proxmox VE 6.x based releases.

It's not required to enable the pvetest repository, but it will get you updates to this kernel faster.

We invite you to test your hardware with this kernel and we are thankful for receiving your feedback.