Hello everyone,

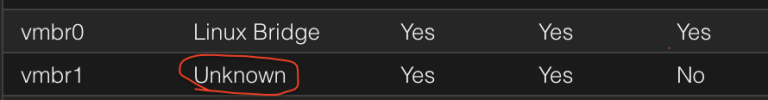

I currently have a failover bond configured for the management interface of my Proxmox instance. Now I am trying to create an LACP interface for the VM network but am having some issues when doing so.

Any time I try to configure LACP, I lose connection to the management interface. I believe I have the configuration on my Netgear GS728tpv2 switch set correctly, so hopefully someone can help me out and point me in the right direction. Below is my Proxmox configuration.

For my switch, I have added ports 13 & 14 together to make them a LAG interface and changed the type to LACP. I then added that LAG interface to VLAN 1 on the switch, along with making sure the PVID is set to 1 as well. When implementing the configuration above for the VM network in Proxmox, I lose access to the management interface.

If anyone has any ideas on what I'm missing, I would be greatly appreciated. TIA.

I currently have a failover bond configured for the management interface of my Proxmox instance. Now I am trying to create an LACP interface for the VM network but am having some issues when doing so.

Any time I try to configure LACP, I lose connection to the management interface. I believe I have the configuration on my Netgear GS728tpv2 switch set correctly, so hopefully someone can help me out and point me in the right direction. Below is my Proxmox configuration.

Code:

# Part of LACP interface for VM Network

iface eth0 inet manual

# Part of LACP interface for VM Network

iface eth1 inet manual

# This is the interface that will be configured as LACP for the new VM network

auto bond1

iface bond1 inet manual

bond-slaves eth0 eth1

bond-miimon 100

bond-mode 802.3ad

bond-emit-hash-policy layer2

mtu 900

# This will be the bridge interface for the VM network using bond1

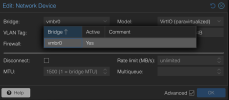

auto vmbr1

iface vmbr1 net static

address 192.168.1.40/24

gateway 192.168.1.1

bridge-ports bond1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vlans 2-4094For my switch, I have added ports 13 & 14 together to make them a LAG interface and changed the type to LACP. I then added that LAG interface to VLAN 1 on the switch, along with making sure the PVID is set to 1 as well. When implementing the configuration above for the VM network in Proxmox, I lose access to the management interface.

If anyone has any ideas on what I'm missing, I would be greatly appreciated. TIA.