Hi all,

i've been using Proxmox for some time now and i'd like to thank you for all the great work, it's really a exceptional nice piece of software.

However, from time to time even the best software drives me mad. We've two servers running PVE and using KVM as guests. Configuration is quite straight forward, guests use virtio for disk and network interfaces, raw as disk format, Debian Squeeze as guests. It's working just fine on Machine A.

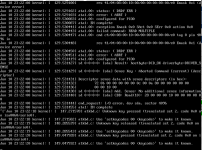

On Machine B, it's not possible to start any KVM migrated from Machine A. The boot process shows "Not a bootable disk" just after the BIOS post. Creating a KVM on Machine B works nice, but while installing the OS, /dev/vda cannot be read - the Debian installer gets stuck with error messages

So, whats the issue here? I can't run KVM on the 2.6.32 branch, even ones created on this system. Does anyone have an idea how to resolve this?

Thank you!

Martin

i've been using Proxmox for some time now and i'd like to thank you for all the great work, it's really a exceptional nice piece of software.

However, from time to time even the best software drives me mad. We've two servers running PVE and using KVM as guests. Configuration is quite straight forward, guests use virtio for disk and network interfaces, raw as disk format, Debian Squeeze as guests. It's working just fine on Machine A.

Code:

libpve-storage-perl 1.0-17

pve-firmware 1.0-11

pve-kernel-2.6.24-12-pve 2.6.24-25

pve-manager 1.8-17

pve-qemu-kvm 0.14.0-3

vzctl 3.0.26-1pve4

proxmox-ve-2.6.24 1.6-26On Machine B, it's not possible to start any KVM migrated from Machine A. The boot process shows "Not a bootable disk" just after the BIOS post. Creating a KVM on Machine B works nice, but while installing the OS, /dev/vda cannot be read - the Debian installer gets stuck with error messages

Code:

libpve-storage-perl 1.0-17

pve-firmware 1.0-11

pve-headers-2.6.32-4-pve 2.6.32-32

pve-kernel-2.6.32-4-pve 2.6.32-33

pve-manager 1.8-17

pve-qemu-kvm 0.14.0-3

vzctl 3.0.26-1pve4

proxmox-ve-2.6.32 1.8-33So, whats the issue here? I can't run KVM on the 2.6.32 branch, even ones created on this system. Does anyone have an idea how to resolve this?

Thank you!

Martin