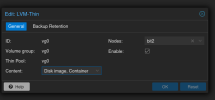

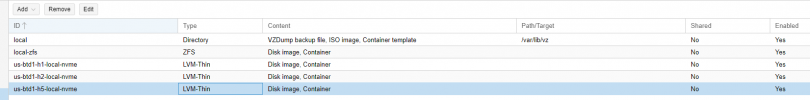

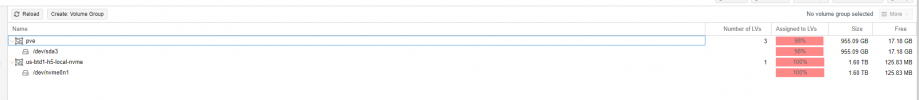

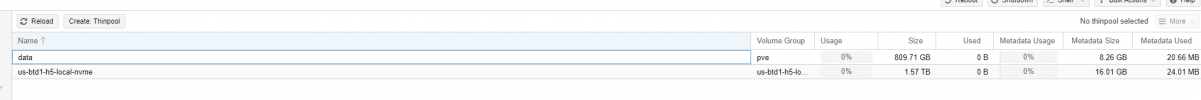

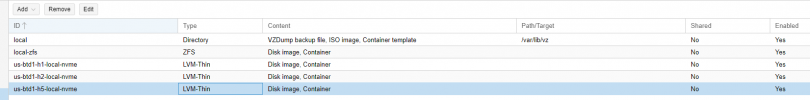

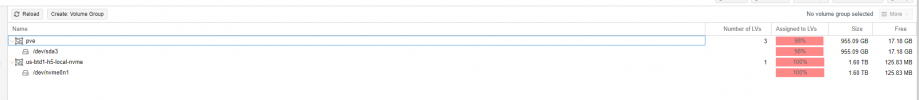

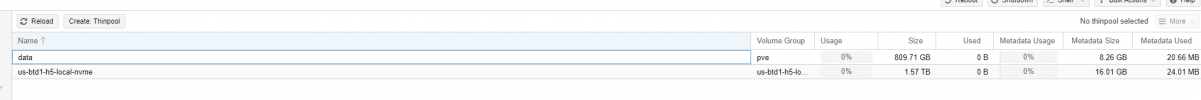

I'm having a hard time understanding or finding good reasoning behind this behavior, but it has become extremely problematic and is reproducible every time. In this case, I have 3 nodes that are installed with ZFS. They all have local, local-zfs, and 2 have a specific datastore for a local NVMe SSD. If I attempt to join a new server to the cluster that does not have an identical storage configuartion (installed on a hardware RAID array) that only has local and local-lvm, local-lvm is "removed" and is not usable, and a phantom datastore is created that is identical to the naming scheme for the local NVMe lvm-thin on other hosts.

On the cluster level, local-zfs has the nodes field explicity set for the hosts with ZFS.

I first had to remove the phantom NVMe datastore from the cluster level and then set it up on the host (this new host has a NVMe SSD as well). That is now fine. I have the following questions:

1. How do I recover local-lvm on this host and make use of it again?

2. Why is Proxmox overwriting storage configurations with seemingly no reason on new cluster members?

3. Why are phantom datastores being created (e.g. a datstore is created, has question mark icon because it doesn't actually exist)? This seems extremely flawed in behavior as frequently clusters are ran with hardware that is not identical.

Host info

Proxmox VE 7.2-11

Linux 5.15.60-2-pve #1 SMP PVE 5.15.60-2 (Tue, 04 Oct 2022 16:52:28 +0200)

pve-manager/7.2-11/b76d3178

Below are screenshots of the storage config on the new host. Any insight is appreciated.

On the cluster level, local-zfs has the nodes field explicity set for the hosts with ZFS.

I first had to remove the phantom NVMe datastore from the cluster level and then set it up on the host (this new host has a NVMe SSD as well). That is now fine. I have the following questions:

1. How do I recover local-lvm on this host and make use of it again?

2. Why is Proxmox overwriting storage configurations with seemingly no reason on new cluster members?

3. Why are phantom datastores being created (e.g. a datstore is created, has question mark icon because it doesn't actually exist)? This seems extremely flawed in behavior as frequently clusters are ran with hardware that is not identical.

Host info

Proxmox VE 7.2-11

Linux 5.15.60-2-pve #1 SMP PVE 5.15.60-2 (Tue, 04 Oct 2022 16:52:28 +0200)

pve-manager/7.2-11/b76d3178

Below are screenshots of the storage config on the new host. Any insight is appreciated.

Code:

root@us-btd1-h5:~# lvdisplay

--- Logical volume ---

LV Path /dev/pve/swap

LV Name swap

VG Name pve

LV UUID AOaDfH-orRd-LABH-Pr3a-0Xzx-ZAzO-AxAZ3G

LV Write Access read/write

LV Creation host, time proxmox, 2022-10-15 20:17:18 -0500

LV Status available

# open 2

LV Size 8.00 GiB

Current LE 2048

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/pve/root

LV Name root

VG Name pve

LV UUID 9VSNs2-6QUR-9Md2-CBT0-0FSe-xvEg-70AJMt

LV Write Access read/write

LV Creation host, time proxmox, 2022-10-15 20:17:18 -0500

LV Status available

# open 1

LV Size 96.00 GiB

Current LE 24576

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

--- Logical volume ---

LV Name data

VG Name pve

LV UUID hsSeOm-3lHa-5z7H-mqJE-zQEv-xQ1G-snOqUp

LV Write Access read/write

LV Creation host, time proxmox, 2022-10-15 20:17:35 -0500

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 0

LV Size <754.11 GiB

Allocated pool data 0.00%

Allocated metadata 0.25%

Current LE 193051

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:4

--- Logical volume ---

LV Name us-btd1-h5-local-nvme

VG Name us-btd1-h5-local-nvme

LV UUID jtucTa-5Sgd-bcGA-Rckc-z9aW-ML0m-6ob2Af

LV Write Access read/write

LV Creation host, time us-btd1-h5, 2022-10-15 20:43:20 -0500

LV Pool metadata us-btd1-h5-local-nvme_tmeta

LV Pool data us-btd1-h5-local-nvme_tdata

LV Status available

# open 0

LV Size <1.43 TiB

Allocated pool data 0.00%

Allocated metadata 0.15%

Current LE 373884

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:7

Code:

root@us-btd1-h5:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content backup,vztmpl,iso

zfspool: local-zfs

pool rpool/data

content images,rootdir

nodes us-btd1-h2,us-btd1-h3,us-btd1-h1

sparse 1

lvmthin: us-btd1-h2-local-nvme

thinpool us-btd1-h2-local-nvme

vgname us-btd1-h2-local-nvme

content rootdir,images

nodes us-btd1-h2

lvmthin: us-btd1-h1-local-nvme

thinpool us-btd1-h1-local-nvme

vgname us-btd1-h1-local-nvme

content rootdir,images

nodes us-btd1-h1

lvmthin: us-btd1-h5-local-nvme

thinpool us-btd1-h5-local-nvme

vgname us-btd1-h5-local-nvme

content images,rootdir

nodes us-btd1-h5

Last edited: