Hi everyone, long time lurker but hey, there's a first time at anything right?

So i have posted about a problem i'm facing on the proxmox reddit, and someone PM'd me to post it also here, so here's a copypasta of the original post with added all the things they told me to try over there, hope that some folks here can help!

I'm attempting to switch my CEPH traffic from an RJ45 bond to fiber cards using a Dell S5048F-ON switch, but I've hit a roadblock. After switching the OVS interface to the second bridge (fiber) and restarting an OSD for testing, RBD becomes inaccessible from the swapped host.

Goal: Move CEPH traffic from 4x1G RJ45 (bonded) to 2x25G Fiber connections for improved performance.

Issue: Post-switch, RBD can't be accessed, although the OSD stays UP.

Steps Taken:

Hardware Configuration:

The things i tried:

Check for good communication between the swapped host and the other hosts:

... and the packet capture shows that the hosts (10.0.0.16 & 10.0.0.25 in that exemple) are communicating correctly on the ceph services.

So i have posted about a problem i'm facing on the proxmox reddit, and someone PM'd me to post it also here, so here's a copypasta of the original post with added all the things they told me to try over there, hope that some folks here can help!

I'm attempting to switch my CEPH traffic from an RJ45 bond to fiber cards using a Dell S5048F-ON switch, but I've hit a roadblock. After switching the OVS interface to the second bridge (fiber) and restarting an OSD for testing, RBD becomes inaccessible from the swapped host.

Goal: Move CEPH traffic from 4x1G RJ45 (bonded) to 2x25G Fiber connections for improved performance.

Issue: Post-switch, RBD can't be accessed, although the OSD stays UP.

Steps Taken:

- Down all OSDs on the host and wait for the cluster to reach health:OK.

- Shutdown other CEPH services (manager, monitor, metadata server).

- Update network configuration to move the interface hosting CEPH's public & cluster network IP.

- Execute ifreload -a

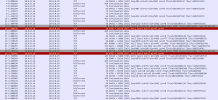

Code:

Mar 22 17:16:30 px06 pvestatd[2781]: status update time (5.943 seconds)

Mar 22 17:16:37 px06 pvedaemon[2827]: <root@pam> starting task UPID:px06:0000DFF9:000938B2:65FDAEE5:srvstart:osd.32:root@pam:

Mar 22 17:16:37 px06 systemd[1]: Starting ceph-osd@32.service - Ceph object storage daemon osd.32...

Mar 22 17:16:37 px06 systemd[1]: Started ceph-osd@32.service - Ceph object storage daemon osd.32.

Mar 22 17:16:38 px06 pvedaemon[2827]: <root@pam> end task UPID:px06:0000DFF9:000938B2:65FDAEE5:srvstart:osd.32:root@pam: OK

Mar 22 17:16:39 px06 pvestatd[2781]: got timeout

Mar 22 17:16:40 px06 pvestatd[2781]: status update time (5.952 seconds)

Mar 22 17:16:48 px06 ceph-osd[57345]: 2024-03-22T17:16:48.172+0100 7ff5fa5606c0 -1 osd.32 68903 log_to_monitors true

Mar 22 17:16:49 px06 pvestatd[2781]: got timeout

Mar 22 17:16:50 px06 pvestatd[2781]: status update time (5.949 seconds)

Mar 22 17:16:51 px06 pmxcfs[2270]: [status] notice: received log

Mar 22 17:16:56 px06 pmxcfs[2270]: [status] notice: received log

Mar 22 17:16:59 px06 pvestatd[2781]: got timeout

Mar 22 17:17:00 px06 pvestatd[2781]: status update time (5.939 seconds)

Mar 22 17:17:01 px06 CRON[58110]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Mar 22 17:17:01 px06 CRON[58111]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Mar 22 17:17:01 px06 CRON[58110]: pam_unix(cron:session): session closed for user root

Mar 22 17:17:09 px06 pvestatd[2781]: got timeout

Mar 22 17:17:10 px06 pvestatd[2781]: status update time (5.947 seconds)

Mar 22 17:17:18 px06 sudo[58327]: zabbix : PWD=/ ; USER=root ; COMMAND=/usr/sbin/qm list

Mar 22 17:17:18 px06 sudo[58327]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=112)

Mar 22 17:17:19 px06 pvestatd[2781]: got timeout

Mar 22 17:17:19 px06 sudo[58327]: pam_unix(sudo:session): session closed for user root

Mar 22 17:17:19 px06 pvestatd[2781]: status update time (5.946 seconds)

Mar 22 17:17:30 px06 pvestatd[2781]: got timeout

Mar 22 17:17:30 px06 pvestatd[2781]: status update time (5.943 seconds)

Mar 22 17:17:49 px06 pvestatd[2781]: got timeout

Mar 22 17:17:50 px06 pvestatd[2781]: status update time (5.936 seconds)

Mar 22 17:17:53 px06 ceph-osd[57345]: 2024-03-22T17:17:53.957+0100 7ff5ee2b26c0 -1 osd.32 69657 set_numa_affinity unable to identify public interface '' numa node: (2) No such file or directory

Mar 22 17:17:59 px06 pvestatd[2781]: got timeout

Mar 22 17:18:00 px06 pvestatd[2781]: status update time (5.938 seconds)

Mar 22 17:18:09 px06 pvestatd[2781]: got timeout

Mar 22 17:18:10 px06 pvestatd[2781]: status update time (5.944 seconds)

Mar 22 17:18:17 px06 sudo[59204]: zabbix : PWD=/ ; USER=root ; COMMAND=/usr/sbin/qm list

Mar 22 17:18:17 px06 sudo[59204]: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=112)

Mar 22 17:18:18 px06 ceph-osd[57345]: 2024-03-22T17:18:18.783+0100 7ff5efab56c0 -1 osd.32 69668 heartbeat_check: no reply from 10.0.0.17:6846 osd.8 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)

Mar 22 17:18:18 px06 ceph-osd[57345]: 2024-03-22T17:18:18.783+0100 7ff5efab56c0 -1 osd.32 69668 heartbeat_check: no reply from 10.0.0.15:6836 osd.31 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)

Mar 22 17:18:18 px06 ceph-osd[57345]: 2024-03-22T17:18:18.783+0100 7ff5efab56c0 -1 osd.32 69668 heartbeat_check: no reply from 10.0.0.17:6806 osd.35 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)

Mar 22 17:18:18 px06 ceph-osd[57345]: 2024-03-22T17:18:18.783+0100 7ff5efab56c0 -1 osd.32 69668 heartbeat_check: no reply from 10.0.0.31:6804 osd.44 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)

Mar 22 17:18:18 px06 ceph-osd[57345]: 2024-03-22T17:18:18.783+0100 7ff5efab56c0 -1 osd.32 69668 heartbeat_check: no reply from 10.0.0.22:6820 osd.48 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)

Mar 22 17:18:19 px06 sudo[59204]: pam_unix(sudo:session): session closed for user root

Mar 22 17:18:19 px06 ceph-osd[57345]: 2024-03-22T17:18:19.763+0100 7ff5efab56c0 -1 osd.32 69669 heartbeat_check: no reply from 10.0.0.17:6846 osd.8 ever on either front or back, first ping sent 2024-03-22T17:17:58.686812+0100 (oldest deadline 2024-03-22T17:18:18.686812+0100)Hardware Configuration:

- Dell nodes with a mix of SSDs and HDDs, WALs on SSDs for HDD OSDs.

- 4x1GB RJ45 links bonded in OVS (Intel I350)

- 2x25GB Fiber Broadcom BCM57414 (recent firmware update & OVS bond too)

- Both networks are communicatings and vlans are propagated correctly

- Proxmox Cluster config:

Code:

| Nodename | ID | Votes | Link 0 | Link 1 |

|----------|----|-------|----------|--------------|

| px01 | 1 | 1 | 10.0.0.11 | 10.197.241.11 |

| px02 | 2 | 1 | 10.0.0.12 | 10.197.241.12 |

| px03 | 3 | 1 | 10.0.0.13 | 10.197.241.13 |

| px04 | 4 | 1 | 10.0.0.14 | 10.197.241.14 |

| px05 | 5 | 1 | 10.0.0.15 | 10.197.241.15 |

| px06 | 6 | 1 | 10.0.0.16 | 10.197.241.16 |

| px07 | 7 | 1 | 10.0.0.17 | 10.197.241.17 |

| px08 | 8 | 1 | 10.0.0.18 | 10.197.241.18 |

| px09 | 9 | 1 | 10.0.0.19 | 10.197.241.19 |

...Ect- Ceph cluster config:

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.0.0.11/24

fsid = 15247e0b-e91d-49a3-85a7-0e5c62da99e6

mon_allow_pool_delete = true

mon_host = 10.0.0.11 10.0.0.16 10.0.0.17 10.0.0.23 10.0.0.25 10.0.0.27 10.0.0.13

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.0.0.11/24- Before the switch:

Code:

auto lo

iface lo inet loopback

auto ens6f0np0

iface ens6f0np0 inet manual

auto ens6f1np1

iface ens6f1np1 inet manual

auto eno4

iface eno4 inet manual

auto eno3

iface eno3 inet manual

auto eno2

iface eno2 inet manual

auto eno1

iface eno1 inet manual

auto int241

iface int241 inet static

address 10.197.241.16/24

gateway 10.197.241.1

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=241

auto int242

iface int242 inet static

address 10.0.0.16/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=242

auto bond0

iface bond0 inet manual

ovs_bonds eno1 eno2 eno3 eno4

ovs_type OVSBond

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options lacp=active bond_mode=balance-tcp

auto bond1

iface bond1 inet manual

ovs_bonds ens6f0np0 ens6f1np1

ovs_type OVSBond

ovs_bridge vmbr1

ovs_mtu 9000

ovs_options lacp=active bond_mode=balance-tcp

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 int241 int242

ovs_mtu 9000

auto vmbr1

iface vmbr1 inet manual

ovs_type OVSBridge

ovs_ports bond1

ovs_mtu 9000- After the switch:

Code:

auto lo

iface lo inet loopback

auto ens6f0np0

iface ens6f0np0 inet manual

auto ens6f1np1

iface ens6f1np1 inet manual

auto eno4

iface eno4 inet manual

auto eno3

iface eno3 inet manual

auto eno2

iface eno2 inet manual

auto eno1

iface eno1 inet manual

auto int241

iface int241 inet static

address 10.197.241.16/24

gateway 10.197.241.1

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=241

auto int242

iface int242 inet static

address 10.0.0.16/24

ovs_type OVSIntPort

ovs_bridge vmbr1

ovs_mtu 9000

ovs_options tag=242

auto bond0

iface bond0 inet manual

ovs_bonds eno1 eno2 eno3 eno4

ovs_type OVSBond

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options lacp=active bond_mode=balance-tcp

auto bond1

iface bond1 inet manual

ovs_bonds ens6f0np0 ens6f1np1

ovs_type OVSBond

ovs_bridge vmbr1

ovs_mtu 9000

ovs_options lacp=active bond_mode=balance-tcp

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 int241

ovs_mtu 9000

auto vmbr1

iface vmbr1 inet manual

ovs_type OVSBridge

ovs_ports bond1 int242

ovs_mtu 9000- both ceph cluster and ceph public networks are on int242, int241 is the front for proxmox webui

The things i tried:

Check for good communication between the swapped host and the other hosts:

- Checked for ping between swapped host and other hosts:

Code:

root@px06:~# ping 10.0.0.14

PING 10.0.0.14 (10.0.0.14) 56(84) bytes of data.

64 bytes from 10.0.0.14: icmp_seq=1 ttl=64 time=0.258 ms

64 bytes from 10.0.0.14: icmp_seq=2 ttl=64 time=0.188 ms

--- 10.0.0.14 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1021ms

rtt min/avg/max/mdev = 0.188/0.223/0.258/0.035 ms- Captured packets on PX06 int242 interface (ceph public and cluster interface) after the switch :

... and the packet capture shows that the hosts (10.0.0.16 & 10.0.0.25 in that exemple) are communicating correctly on the ceph services.

Code:

root@px15:~# netstat -tulpn | grep 6789

tcp 0 0 10.0.0.25:6789 0.0.0.0:* LISTEN 2140379/ceph-mon

root@px15:~# netstat -tulpn | grep 6881

tcp 0 0 10.0.0.25:6881 0.0.0.0:* LISTEN 3288007/ceph-mds

root@px15:~# netstat -tulpn | grep 6800

tcp 0 0 10.0.0.25:6800 0.0.0.0:* LISTEN 2097923/ceph-osd