I wanted to start a short thread here because I believe I may have found either a bug or a mistake in the Proxmox documentation for the pveceph command, or maybe I'm misunderstanding, and wanted to put it out there. Either way I think it may help others.

I was going through the CEPH setup for a new server that I wanted to configure with an Erasure Coded pool with a k=2, m=1 config that had a crush-failure-domain set to the OSD level. I haven't done this in a bit, and forgot most of how I did it last time. I did remember there being a few commands that were needed in order to establish a EC pool and a replicated metadata pool, and that they needed to be added as storage using another command that specified this.

I initially located an online thread about using the erasure-code-profile option in the native CEPH tools in order to establish a profile, then apply it using the native CEPH create commands. Having only a vague memory I opted to try this and it all seemed to be going smoothly. Unfortunately I ran into some other issues there with the pool saying it didn't support RBD and I ultimately decided to skim the docs again and blow up the pool.

I had previously seen the command,

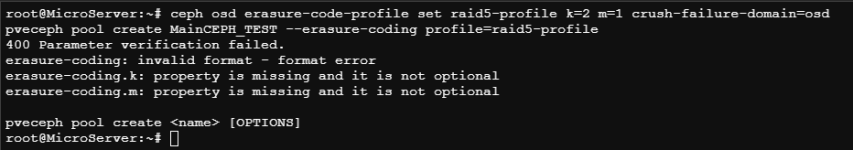

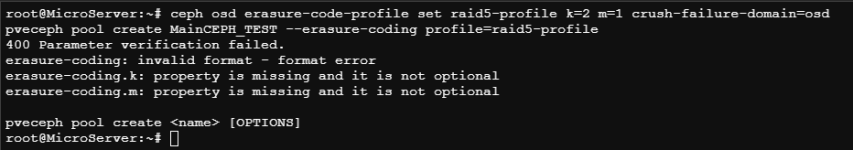

but was unable to get it to accept the profile I had created and instead just went with the working CEPH native commands(this was the pool I just had to blow up). Below are the commands I attempted to run before and again just now, and the error that I got.

I ultimately just reformatted the command using pveceph instead of trying to use a profile and ended up with the following.

If anyone can explain what I did wrong or let me know if this was actually a bug then I'd appreciate it.

pve-manager/8.3.0/c1689ccb1065a83b (running kernel: 6.8.12-4-pve)

ceph version 19.2.0 (3815e3391b18c593539df6fa952c9f45c37ee4d0) squid (stable)

I was going through the CEPH setup for a new server that I wanted to configure with an Erasure Coded pool with a k=2, m=1 config that had a crush-failure-domain set to the OSD level. I haven't done this in a bit, and forgot most of how I did it last time. I did remember there being a few commands that were needed in order to establish a EC pool and a replicated metadata pool, and that they needed to be added as storage using another command that specified this.

I initially located an online thread about using the erasure-code-profile option in the native CEPH tools in order to establish a profile, then apply it using the native CEPH create commands. Having only a vague memory I opted to try this and it all seemed to be going smoothly. Unfortunately I ran into some other issues there with the pool saying it didn't support RBD and I ultimately decided to skim the docs again and blow up the pool.

I had previously seen the command,

Code:

pveceph pool create <pool-name> --erasure-coding profile=<profile-name>

Code:

ceph osd erasure-code-profile set raid5-profile k=2 m=1 crush-failure-domain=osd

pveceph pool create MainCEPH_EC5-data --erasure-coding profile=raid5-profile

I ultimately just reformatted the command using pveceph instead of trying to use a profile and ended up with the following.

Code:

pveceph pool create MainCEPH_EC5 --pg_num 32 --erasure-coding k=2,m=1,failure-domain=osdIf anyone can explain what I did wrong or let me know if this was actually a bug then I'd appreciate it.

pve-manager/8.3.0/c1689ccb1065a83b (running kernel: 6.8.12-4-pve)

ceph version 19.2.0 (3815e3391b18c593539df6fa952c9f45c37ee4d0) squid (stable)

Last edited: