For context I have a nine node proxmox cluster. We've just added five new nodes which all have 4 x 3TB NVMe drives and I've set them up with a separate crush rule and added a device-class of nvme so they can be used separately from the original 4 nodes which are SSD are now on a new replicated rule (so `ceph osd pool autoscale-status` works).

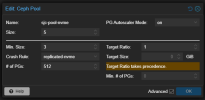

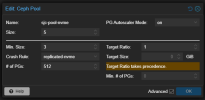

But I'm having some issues. Here is the pool detail:

What I'm expecting is that I 'should' be able to drop two nodes at once and things should keep working. But it.... doesn't.

I've run up a simple VM to run fio on and if I drop more than one host, the disk gets corrupted and nothing works from that point, it's a full reinstall, disk is corrupted and won't boot. I can't get dmesg from the VM anymore (binary error etc... it can't run it). And I'm struggling to work out why this would be the case.

Am I doing something fundamentally wrong? I've spent probably two weeks doing some benchmarking and testing and this feels like a deal breaker to the N+2 redundancy I'm expecting from ceph.

Any pointers at what I could look at to see what's going on - I'm not seeing any reasons why this should happen with the 5/3 configuration. But it is.

But I'm having some issues. Here is the pool detail:

What I'm expecting is that I 'should' be able to drop two nodes at once and things should keep working. But it.... doesn't.

I've run up a simple VM to run fio on and if I drop more than one host, the disk gets corrupted and nothing works from that point, it's a full reinstall, disk is corrupted and won't boot. I can't get dmesg from the VM anymore (binary error etc... it can't run it). And I'm struggling to work out why this would be the case.

Am I doing something fundamentally wrong? I've spent probably two weeks doing some benchmarking and testing and this feels like a deal breaker to the N+2 redundancy I'm expecting from ceph.

Any pointers at what I could look at to see what's going on - I'm not seeing any reasons why this should happen with the 5/3 configuration. But it is.