Hi everyone,

I have been checking having a reccuring problem lately on my proxmox.

Since one week, my proxmox sever start to have some/lot of IO delay like tonight :

the same graphic but in days :

When checking /var/log/syslog the only alert I have that could be cause or a consequence of this IO Delay is RRDCached.

So the only way to get past this problem was to do the following :

systemctl stop rrdcached.service

rm -rf /var/lib/rrdcached/db/pv2-storage/*

rm -rf /var/lib/rrdcached/db/pv2-vm/*

rm -rf /var/lib/rrdcached/db/pv2-node/*

rm -rf /var/lib/rrdcached/journal/

It have already happened only twice in the past (10month ago and 8 month ago), but now in just 8 days I had do this "fix" 5 times already !

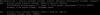

Here is what I get with the command (only rrdcached error ...) :

zgrep --color=always "error\|fail\|crit" /var/log/syslog | ccze -A

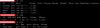

And here is what syslog show me for (full syslog before "rrdcached" errors happened) :

zgrep --color=always "20 20:3" /var/log/syslog | ccze -A

Everytime this error happen all my VM are slowed to the point to be unusuable...

So I'm starting to get concerned about having some real issues on my configuration...

Here are some information on the current configuration :

Standalone Proxmox server (so I doubt there is any quorum problem involved)

VMs running on it:

-1 windows

-6 linux

pveversion :

pveperf :

iostat -x 2 5 (first result / boot result) :

iostat -x 2 5 :

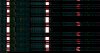

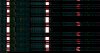

Two "iotop" result with 10s appart ... -> "kworker" come and leave fast .... even if at 99.99% :

I have been checking having a reccuring problem lately on my proxmox.

Since one week, my proxmox sever start to have some/lot of IO delay like tonight :

the same graphic but in days :

When checking /var/log/syslog the only alert I have that could be cause or a consequence of this IO Delay is RRDCached.

So the only way to get past this problem was to do the following :

systemctl stop rrdcached.service

rm -rf /var/lib/rrdcached/db/pv2-storage/*

rm -rf /var/lib/rrdcached/db/pv2-vm/*

rm -rf /var/lib/rrdcached/db/pv2-node/*

rm -rf /var/lib/rrdcached/journal/

It have already happened only twice in the past (10month ago and 8 month ago), but now in just 8 days I had do this "fix" 5 times already !

Here is what I get with the command (only rrdcached error ...) :

zgrep --color=always "error\|fail\|crit" /var/log/syslog | ccze -A

And here is what syslog show me for (full syslog before "rrdcached" errors happened) :

zgrep --color=always "20 20:3" /var/log/syslog | ccze -A

Everytime this error happen all my VM are slowed to the point to be unusuable...

So I'm starting to get concerned about having some real issues on my configuration...

Here are some information on the current configuration :

Standalone Proxmox server (so I doubt there is any quorum problem involved)

VMs running on it:

-1 windows

-6 linux

pveversion :

pveperf :

iostat -x 2 5 (first result / boot result) :

iostat -x 2 5 :

Two "iotop" result with 10s appart ... -> "kworker" come and leave fast .... even if at 99.99% :

Last edited: