Hi all,

i'm working now for 5 days on installing a Fujitsu Externus dx100 S5 - Storage on Proxmox.

I use a Q-Logic QLE2692 Card for a direct FibreChannel connection.

If i use a Windows VM and pass this card to this VM directly, i can see and interact with the Storage without any Problems.

So i think it's a driver or connection problem on Proxmox.

The driver is the original qla2xxx driver build into the default debian kernel.

(https://driverdownloads.qlogic.com/...uct.aspx?ProductCategory=39&Product=1259&Os=5)

lspci

lspci -s b3:00.0 -vvv

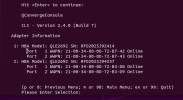

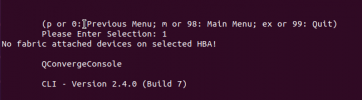

qaucli

Now i can access the CommandLine Tool qaucli. Here i can see the storage as well. (See Pictures "device list")

The only message i'm thinking about is, that it says "no fabric attached device", what does this mean? If i try to set it with the tool, i can't set this fabric attached device?

You are my last hope, i have read the complete google and debianforum.

I think i've just something i overlooked and i need only one function to say mount "-this one"

i even tried to Install the Storage on an ubuntu VM. But here is the same trouble.

If you need more information, just write me a message...

Hope to find anyone to give the special hint

Thanks for your answers

(This is the (bad) english Version from the German Proxmoxpart)

i'm working now for 5 days on installing a Fujitsu Externus dx100 S5 - Storage on Proxmox.

I use a Q-Logic QLE2692 Card for a direct FibreChannel connection.

If i use a Windows VM and pass this card to this VM directly, i can see and interact with the Storage without any Problems.

So i think it's a driver or connection problem on Proxmox.

The driver is the original qla2xxx driver build into the default debian kernel.

(https://driverdownloads.qlogic.com/...uct.aspx?ProductCategory=39&Product=1259&Os=5)

lspci

Code:

b3:00.0 Fibre Channel: QLogic Corp. ISP2722-based 16/32Gb Fibre Channel to PCIe Adapter (rev 01)

b3:00.1 Fibre Channel: QLogic Corp. ISP2722-based 16/32Gb Fibre Channel to PCIe Adapter (rev 01)lspci -s b3:00.0 -vvv

Code:

b3:00.0 Fibre Channel: QLogic Corp. ISP2722-based 16/32Gb Fibre Channel to PCIe Adapter (rev 01)

Subsystem: QLogic Corp. QLE2692 Dual Port 16Gb Fibre Channel to PCIe Adapter

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr+ Stepping- SERR+ FastB2B- DisINTx-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 64 bytes

Interrupt: pin A routed to IRQ 159

NUMA node: 0

Region 0: Memory at fba05000 (64-bit, prefetchable) [size=4K]

Region 2: Memory at fba02000 (64-bit, prefetchable) [size=8K]

Region 4: Memory at fb900000 (64-bit, prefetchable) [size=1M]

Expansion ROM at fbe40000 [disabled] [size=256K]

Capabilities: [44] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [4c] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 2048 bytes, PhantFunc 0, Latency L0s <4us, L1 <1us

ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ FLReset- SlotPowerLimit 50.000W

DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported+

RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 256 bytes, MaxReadReq 4096 bytes

DevSta: CorrErr+ UncorrErr- FatalErr- UnsuppReq+ AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L0s L1, Exit Latency L0s <512ns, L1 <2us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp-

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range B, TimeoutDis+, LTR-, OBFF Not Supported

DevCtl2: Completion Timeout: 65ms to 210ms, TimeoutDis-, LTR-, OBFF Disabled

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+, EqualizationPhase1+

EqualizationPhase2+, EqualizationPhase3+, LinkEqualizationRequest-

Capabilities: [88] Vital Product Data

pcilib: sysfs_read_vpd: read failed: Input/output error

Not readable

Capabilities: [90] MSI-X: Enable+ Count=16 Masked-

Vector table: BAR=2 offset=00000000

PBA: BAR=2 offset=00001000

Capabilities: [9c] Vendor Specific Information: Len=0c <?>

Capabilities: [100 v1] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq+ ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr-

CEMsk: RxErr+ BadTLP+ BadDLLP+ Rollover+ Timeout+ NonFatalErr+

AERCap: First Error Pointer: 00, GenCap+ CGenEn- ChkCap+ ChkEn-

Capabilities: [154 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [1c0 v1] #19

Capabilities: [1f4 v1] Vendor Specific Information: ID=0001 Rev=1 Len=014 <?>

Kernel driver in use: qla2xxx

Kernel modules: qla2xxxqaucli

Now i can access the CommandLine Tool qaucli. Here i can see the storage as well. (See Pictures "device list")

The only message i'm thinking about is, that it says "no fabric attached device", what does this mean? If i try to set it with the tool, i can't set this fabric attached device?

You are my last hope, i have read the complete google and debianforum.

I think i've just something i overlooked and i need only one function to say mount "-this one"

i even tried to Install the Storage on an ubuntu VM. But here is the same trouble.

If you need more information, just write me a message...

Hope to find anyone to give the special hint

Thanks for your answers

(This is the (bad) english Version from the German Proxmoxpart)